NNadir

NNadir's JournalDuring the French revolution a priest, a drunk and an engineer are sent to the guillotine.

They ask the priest if he wants to face up or down when he meets his fate. The priest says he would like to face up so he will be looking towards heaven when he dies. They raise the blade of the guillotine and release it. It comes speeding down and suddenly stops just inches from his neck. The authorities take this as divine intervention and release the priest.

The drunkard comes to the guillotine next. He also decides to die face up, hoping that he will be as fortunate as the priest. They raise the blade of the guillotine and release it. It comes speeding down and suddenly stops just inches from his neck. Again, the authorities take this as a sign of divine intervention, and they release the drunkard as well.

Next is the engineer. He, too, decides to die facing up. As they slowly raise the blade of the guillotine, the engineer suddenly says, "Hey, I see what your problem is ..."

Werner Heisenberg, Kurt Gdel, and Noam Chomsky walk into a bar...

Heisenberg turns to the other two and says, "Clearly this is a joke, but how can we figure out if it's funny or not?"

Gödel replies, "We can't know that because we're inside the joke."

Chomsky says, "Of course it's funny. You're just telling it wrong."

Cerium Requirements to Split One Billion Tons of Carbon Dioxide, the Nuclear v Solar Thermal cases.

The paper I will discuss in this post is this one: Solar thermochemical splitting of CO2 into separate streams of CO and O2 with high selectivity, stability, conversion, and efficiency (Daniel Marxer , Philipp Furler , Michael Takacs and Aldo Steinfeld, Energy Environ. Sci., 2017, 10, 1142-1149)

The paper appears to be open sourced, and if you're interested you can read it yourself in entirety, but I'll post a picture of the "solar reactor" from the paper anyway:

Here's the caption:

The device built and shown in the picture has operated on a lab scale, albeit, as we'll see below, not as a solar device.

In 2017, according to the 2018 Edition of the World Energy Outlook, released a few weeks back, carbon dioxide emissions amounted to 32.1 billion tons.

Most laboratory scale devices in the field of energy (and many other fields as well) never reach industrial scale, although many people in the general non-technical population reading about them show breathless enthusiasm for them, as if they represented a "problem solved" reality.

They do not.

The problem of climate change has not been solved, and what we are doing about climate change is, essentially, nothing at all.

Nevertheless, this is an interesting device, and as a thought experiment, I decided to do a quick "back of the envelope" calculation about how much cerium would be required to split one billion tons of carbon dioxide, only a fraction of what we apparently dumped in a single year, but nevertheless what would be a significant fraction (around 3%) of this dangerous fossil fuel waste that is literally killing the planet.

Although - probably for funding reasons - this device is purportedly described as "solar," it is actually agnostic for the source of primary energy. It would operate using electricity (which is the source utilized in the paper's description of the experimental operation), dangerous fossil fuels (although this might amount to a material perpetual motion machine), or nuclear energy.

In my calculations, I compared the nuclear and solar cases, because they're illustrative, and nominally carbon free, if one ignores the fact that the world’s largest solar thermal plant – there are actually very, very, very few of them as of 2018 - spends a significant portion of its operating time as a very, very, very expensive and unreliable gas plant.

Here, from the paper, are the chemical reactions by which this device splits carbon dioxide:

ΔH = 475 kJ per 1/2 mole of O2

ΔH = 192 kJ per mole of CO2

...and the net reaction for which cerium oxides are catalysts:

ΔH = 283 kJ per mole CO2

The first reaction takes place - T (reduction) - at 1400 C roughly.

The second reaction - the oxidation of the catalyst (and the reduction of carbon dioxide) - takes place between 700 C and 1000 C.

Thus alternate heating and cooling cycles are required.

Let’s compare the sources of primary energy, here solar thermal and nuclear:

The world’s largest solar thermal plant is the plant at Ivanpah in California. I have no idea about the maximum operating temperature of the economic and environmental disaster at Ivanpah. I try not to think too much about quixotic adventures that might have been avoided on inspection which waste enormous resources for no real use. In any case, since the plant operates for part of the time as a gas plant, an effort to split its carbon dioxide into carbon monoxide and oxygen is perpetual motion territory.

To give a feel for the plant, with the understanding of the source, I will rely, for convenience, on data from the Wikipedia page for the Ivanpah "Solar" thermal plant. (Anyone with a better reference is heartily invited to produce it.) The plant officially came on line in 2014, but the numbers I will utilize here will all refer to the years of full operation listed in the tables at the bottom, specifically 2015, 2016, and 2017.

Because waiting for the sun to heat its boilers would lead to degraded performance, each morning the boilers are brought up to temperature by burning dangerous natural gas before the sun can take over. The dangerous natural gas waste, chiefly carbon dioxide, is, as is the case nearly everywhere else, dumped into humanity's favorite waste dump, the planetary atmosphere. Between In the period from January 2015 to December 2017, the amount of carbon dioxide dumped by the plant was 199,236 tons. Overall, dangerous natural gas accounts for 3.96 petajoules of energy. The delivered energy over three years (as electricity) was 15.7 petajoules of energy. Thus 25.2% of the energy delivered was in the form of dangerous natural gas.

The $2.2 billion dollar plant, which occupies 4000 acres, is rated at 392 MW of power, scaled back from the original 440 MW planned because people wanted to pretend to give a rat's ass about the habitat of the desert tortoise.

The actual average continuous power of the plant, for the three full years of operation can be calculated from the total energy, divided by the operating time in seconds. It is 94.86 MW. Thus the capacity utilization of the plant is 24.20%.

The boiler temperatures (as opposed to a putative maximum operational temperature) of the plant are reportedly, according to a White Paper published by its design firm, Bright Source Energy in 2014 (before the plant's actual operational record was available) are 550C. This is considerably lower than the temperature at which cerium (IV) oxide is reduced to cerium (III) oxide releasing oxygen in the process, again, 1400C. It is sufficiently high enough however to denature the keratin in bird wings (160C) according to a wonderful detailed analysis in a 2017 NREL study of birds burned up in flight - colloquially referred to by the dubiously amusing term "streamers" because of the trails of smoke they leave when they fall smoldering or in flames. According to a news item at "E&E News", "only" a bit over 6,100 birds caught fire in flight in the second year of operation.

Recently a few people out of the Argonne National Laboratory extrapolated the death toll of birds using data from operations of solar thermal plants: A preliminary assessment of avian mortality at utility-scale solar energy facilities in the United States. Their estimation was that in Southern California between 16,200 and 59,400 birds are killed each year by solar thermal energy production.

Don't worry. Be happy. It's not like desert habitat is "useful" for anything except to make "green" energy.

And the Argonne scientists make that point, "Don't worry be happy." They point to a reference showing that buildings kill hundreds of millions of birds in the United States each year, which I take to mean that if we replaced all of our buildings with solar thermal plants, we could save the lives of birds. I don’t know what the effect of tents on birds would be however. Then they go on to cite Benjamin Sovacool’s paper on the impact of dangerous fossil fuel plants and nuclear plants on birds.

I have a passing familiarity, by the way, with Benjamin Sovacool’s, um, “work,” for which, to be straight up, I regard with absolute contempt on both technical and moral/ethical grounds. To my way of thinking, he is one of the horseshitters of the apocalypse, along with Mark Z. Jacobsen, of Stanford University and Ed Lyman of the Union of Concerned “Scientists,” the apocalypse in question being climate change, ongoing disaster which no amount of work and effort and engineering can prevent, since it has already destroyed irreplaceable things, and the only question remaining being how much more and how fast it will destroy many, many, many more irreplaceable things.

Sovacool, who has a Ph.D. in something called “Science Policy” to complement his Master’s degrees one of which is also in “Science Policy,” the other in “Rhetoric,” which in turn complement his double major Bachelor’s degree in Philosophy and um, something called “Communication Studies,” seems to get cited a fair number of times with this dubious calculation of the bird impact of nuclear plants, and dangerous fossil fuel plants although there is no evidence whatsoever that he is an expert in ornithology, or for that matter, engineering (including but not limited to nuclear engineering), epidemiology or atmospheric science.

For the record, in my long career, I’ve probably interacted with at least ten thousand holders Ph.D’s. In a typical day, I might have a conversation with ten holders of this degree. Like most human beings, they’re a mixed bag: Some are absolutely brilliant, unbelievably impressive, but there is definitely a subset of Ph.D’s who have critical thinking skills equally as poor and even as worse as those demonstrated by Sovacool, and Sovacool represents a pretty low bar. Most recently he seems to be working in that so called “renewable energy” nirvana, Denmark, which is also an offshore oil and gas drilling hellhole.

The three horseshitters of the apocalypse love to carry on endlessly about how “dangerous” nuclear power is, and run around making statements about how wonderful so called “renewable energy” is in comparison, and how so called “renewable energy” should replace the world’s nuclear plants. I’ve been painfully familiar with aspects of their horseshit for about ten years. As I frequently point out, the current death toll for air pollution is about 7 million deaths per year, so that in the last ten years, the death toll for human beings from air pollution has been greater than the loss of life in World War II.

So we might ask ourselves if the advocacy of the horseshitters of the apocalypse - that we put all our hopes in so called "renewable energy" - is dangerous, given that half a century of their horseshit and similar horseshit has not even come close to slowing the rise in the use of dangerous fossil fuels: 2017 set a record for the amount of carbon dioxide dumped into the air, and the growth in the use of dangerous fossil fuels in that single year increased 489% faster than solar & wind combined, by 5.89 exajoules for the former as opposed to 1.21 exajoules for the latter. (See WEO reference below.)

It is very, very, very, very clear with even a shred of critical thinking, which escapes the horseshitters of the apocalypse entirely, that so called "renewable energy" is extremely dangerous, not because of its environmental effects - although these are certainly worthy of consideration - but because it has not worked, and is not working and (I contend) will not work to stop the extremely destructive and deadly use of dangerous fossil fuels. And because it is not working and has not worked, despite the "investment" of trillions of dollars, it is allowing a death toll greater than all deaths in World War II to occur every decade.

Now let's consider the nuclear case in this discussion of thermal splitting carbon dioxide:

I will state quite plainly that the number of commercial nuclear reactors that have operated at temperatures sufficient to drive the first reaction, the oxygen generating reaction, at 1400C, is zero.

All of the high temperature reactors nuclear built over the last 60 years have been gas cooled reactors. The highest temperatures ever recorded in a commercial gas cooled nuclear reactor were recorded at the AVR, which was not strictly a commercial reactor but doubled as research “pebble bed” reactor, a prototypical test reactor - albeit connected to the grid. It operated in Germany, a country now subsumed with anti-nuclear ignorance, at a research center at Jülich from 1967 to 1988. Its highest recorded temperature of the helium gas utilized to cool it was 950C.

The UHTREX reactor, experimental helium cooled reactor which operated at Los Alamos from 1959 to 1971 operated at temperatures approximating this requirement. It had an outlet temperature of 1300 C, close to the reported temperature utilized in the device described in the paper. The reactor was designed to release fission products directly to the coolant stream. (Ultimately it was decided that this wasn’t a good idea although I am very much convinced that the opposite case is true, particularly in light of advances in materials science, although in my own vision, fission products are not released into a coolant, but function, at least in some possible iterative thought experiments, as the coolant themselves.)

Commercial gas cooled reactors have been most successful in the United Kingdom; the first commercial reactor in the world fit into this class; the Magnox Calder Hall reactor that operated from 1956 up to 2003. This had carbon dioxide as a working fluid, and similar reactors still operate in Britain, the AGCR, or “Advanced Gas Cooled Reactors.” In the United States, two examples of commercial gas cooled reactors have operated to my knowledge, the Fort St. Vrain reactor in Colorado and the Peach Bottom 1 reactor in Pennsylvania, the latter being considered a test reactor rather than a purely commercial reactor. Both reactors were helium cooled, but had short operational life times, from 1966 to 1974 for Peach Bottom 1, and from 1979 to 1989 for Ft. St. Vrain. These operational lifetimes were even shorter than the unacceptably short life mean life times of Danish wind turbines (on the order on average of 17 years), and thus these two represented economic failures.

Fort St. Vrain’s turbines were converted to run on dangerous natural gas, the waste of which is dumped directly into the atmosphere, with nobody actually caring a millionth as much as many Americans care about the outcome of the next Superbowl.

In history, the Supebowl will not matter at all; the carbon dioxide will, more than anyone can even imagine, and hundreds of thousands of scientists are imagining the consequences. There are a subset of people – exceptionally ignorant people in my view – who are doing a kind of victory dance because nuclear plants are closing at a rate faster than they are being built. They are such poor thinkers, are so myopic, that they claim that nuclear plants are “not economic.” In their stupid and ill-informed imagination they believe that nuclear plants are being replaced by “cheap renewable energy.” These are the same sort of people who did a victory dance when the Fort Saint Vrain nuclear plant was converted to a gas plant. Let’s be clear on something, OK, the Ivanpah plant was as much an economic failure as a solar facility as was Fort St. Vrain as a nuclear plant, one difference between the two plants is that Ivanpah has always depended on dangerous natural gas to operate, and that it never met its design capacity for operation. So called “renewable energy” has very little to do with the closure of nuclear plants, since so called “renewable energy” is an expensive and trivial form of energy. The growth in the use of coal in the 21st century, easily outstrips, by a factor of six, the entire output of the wind, solar, tidal and geothermal industry, this after more than half a century of cheering for them. What is causing nuclear plants to shut is increases in the use of dangerous fossil fuels, in particular the use of dangerous natural gas.

Of course, it would be perhaps "unfair" to represent the failure of the Ivanpah plant as representative of what could be the case for imaginary future solar thermal plants any more than it would be fair to represent Ft. St. Vrain's reactor as representative of the nuclear case. Nevertheless, one should remark that when it operated as a nuclear plant, Ft. St. Vrain did not represent the investment of 4000 acres of pristine habitat to produce a trivial amount of industrial energy - requiring an input of dangerous natural gas - to effectively operate as a less than 100 MW power plant. Four thousand acres is nearly 16.2 square kilometers.

World energy demand as of 2017 was 584.98 exajoules:

2018 Edition of the World Energy Outlook Table 1.1 Page 38 (I have converted MTOE in the original table to the SI unit exajoules in this text.)

World energy demand grew in 2017 by 8.88 exajoules. The fastest growing source of new energy on this planet was not so called “renewable energy.”. What the IEA still calls “other renewable energy,” solar, wind, with a little geothermal and tidal thrown in grew in 2017 by 1.21 exajoules. (The IEA excludes the only two significant forms of so called “renewable energy,” hydroelectricity and biomass from this category; they grew by respectively 0.13 exajoules and 1.30 exajoules.) These figures compare to the growth in the energy produced by burning dangerous fossil fuel natural gas, which grew by 4.19 exajoules in 2017, followed by the growth in the energy produced by burning dangerous fossil fuel petroleum, which grew by 1.97 exajoules in 2017. Dangerous natural gas, followed by petroleum, not so called “renewable” energy, despite what you may hear or may be telling yourself, was the fastest growing source of energy on the planet. Combined with the insignificant decline in the use of the dangerous fossil fuel coal (-0.21 exajoules), dangerous fossil fuels combined produced 5.95 of the 8.88 exajoules by which world energy demand increased in 2017, or in the “percent talk” used by partisans of the absurd notion that so called “renewable energy” will save us, dangerous fossil fuels accounted for 66.98% of the growth in energy in 2017.

The lie that natural gas is “cheap” is wholly a function of ignoring its external costs, the flow back water bleeding out of the permanently destroyed bedrock subject to “hydraulic fracturing” – “fracking” – the chemicals and toxic and radioactive materials that water contains, the largely ignored gas explosions around the world, all of these baleful things nonetheless being dwarfed by climate change to which dangerous natural gas is a party to be sure, all costs that will not be paid by the assholes who claim that natural gas is “cheap” but by all human beings, and all living things that live after us.

Ivanpah provides fifteen millionths of the world energy supply. Ignoring all the horseshit about energy storage - the second law of thermodynamics requires that the storage of energy wastes energy - to make the thus far useless and ineffective so called "renewable energy" industry able to provide continuous rather than sporadic energy, this means that to produce the world energy supply with "Ivanpahs" would require 105,000,000 square kilometers. These square kilometers would need to be in the equivalent of US Southwestern deserts with respect to their total irradiance, a function largely of cloud cover, i.e. the weather. In addition note that these plants would be even more useless in any place where the ground is covered for a significant amount of time with snow, not that the continued existence of snow is likely given the complete technical inadequacy in addressing climate change by which humanity imagines – when it doesn’t ignore – the problem. The paper cited above, A preliminary assessment of avian mortality at utility-scale solar energy facilities in the United States, contains a map of the United States showing the irradiance of areas of the United States. The Ivanpah plant is located in one of the regions, showing kwh/m^2/day, of greater than or equal to 7.6. Of course the efficiency of the transformation is represented by the experimental results for the performance of the plant.

Nearly the entire State of Arizona is in the same category of irradiance as observed at Ivanpah, which is located at near the Southern most Nevada border in California. The State of Arizona is about 295,000 square kilometers total, or roughly 1/3 the size of the area needed to produce 105,000,000 square kilometers of Ivanpahs, again ignoring the huge thermodynamic penalty of energy storage, not to mention energy transport.

If, with the knee jerk pablum involving sticking your fingers in your ears about how great so called "renewable energy" is, and thus your response is "It's only desert" all I can say, as an environmentalist, is, excuse my language, "Fuck you!"

A proposal to cover three Arizonas – and this applies to the planet as whole, not just the United States - with mirrors subject to blasting by sand and requiring water to wash them periodically is not environmentalism. It's foolishness, at best, rank stupidity at worst, to put even a shred of faith in this nonsensical unworkable approach.

According to the World Energy Outlook, in 2017 alone, the increase in the amount of the dangerous fossil fuel waste carbon dioxide was half a billion tons, or half the amount of carbon dioxide I propose to split in my thought experiment suggested at the outset of this post. It’s a thought experiment because with the exception of the experimental UHTREX nuclear reactor mentioned above, neither a solar thermal nor a nuclear plant has been built that has demonstrated sustained temperatures of around 1400C.

Indeed in the paper cited with at the outset, which claims to be a paper about using “solar thermal energy” the primary energy source used in the demonstration is not solar energy at all. It’s xenon arcs, presumably powered by reliable electricity which the authors claim “simulates” solar thermal energy, should it ever actually be practically available.

To wit, from the experimental part of their paper:

The bold is mine. Note that much of the other equipment by which the system operated is electrically operated or controlled as well. Some of the equipment is for experimental determinations, for example the gas chromatograph, but other pieces such as valve controllers, temperature monitors and vacuum pumps (or, alternatively, compressors), would be required during any putative commercial operation of this device.

Irrespective of the authors’ reliance on a “solar simulator” using xenon arcs, they claim that the technology for concentrating what they call “DNI” for “Direct Normal solar Irradiation” to what they then call “3000 suns” already exists. Then, without much consideration of the surface area required to convert DNI to “3000 suns,” on a scale to represent a significant portion of the 584.98 exajoules humanity was consuming as of 2017 – half a century into all the worldwide wild cheering for solar energy even as dangerous fossil fuel use continues to rise rapidly, despite solar “investments” – they offer us an equation for the “ideal” maximal thermal efficiency of a solar thermal system, including both a Steffan-Boltzman term and a Carnot term:

From my perspective this equation, while claiming to address the efficiency of “solar to fuel” seems not to account for the chemical thermodynamics, although perhaps they consider it “included” as they offer us the enthalpy term (but not the entropy term or ΔG, the Gibbs Free Energy) for the overall reaction of splitting carbon dioxide into carbon monoxide and oxygen, 234 kJ/mol, and refer also to the operating temperature at which the reaction theoretically takes place, 1773K (1500 C). Using this equation and plugging in some values they calculate that the “ideal” (maximal) efficiency of the solar to fuel conversion is 63%. This said, the thermodynamics of gas phase reactions in real life is very much dependent on conditions other than temperature, notably pressure and flow, but OK, I can live with this statement.

Now after all this rambling and commentary we are free to compare the cerium requirements to split one billion tons of carbon dioxide into carbon monoxide and oxygen. Let’s first consider the stoichiometric case wherein the reaction goes 100% to completion, something that will not happen for reasons I’ll discuss below, that is the case where all of the Ce2O3 is oxidized to CeO2 while reducing carbon dioxide to carbon monoxide, and all of the CeO2 so obtained is reduced back to Ce2O3 by quantitatively releasing oxygen under thermal conditions.

Exactly one billion tons of carbon dioxide represents 2.272 X 10^13 moles of carbon dioxide. Although the stoichiometry of this reaction is 1:1 for the reduction of carbon dioxide were it not cyclic would require the same number of moles of Ce2O3, which is 81.408% cerium by weight, the reaction is cyclic, and thus the amount of cerium required is wholly a function of the number of cycles that can be carried out in a year. Thus the pertinent point is the capacity utilization of either solar thermal or nuclear plants. In so doing it is important to point out that, the experimental results from the paper notwithstanding, that in making this calculation as a thought experiment since neither nuclear plants nor solar thermal plants carrying out chemical reactions (other than to the extent that they combust dangerous natural gas) exist.

Above I stated that the capacity utilization of the Ivanpah plant was 24.20% and thus it might be reasonable to assume that a putative solar plant for conducting chemical reactions – in this case splitting carbon dioxide – might be available for 24 hours * .2420 = 5.81 hours a day. However the Ivanpah plant has burned 3,754,206 MMBTU of dangerous natural gas from 2015 to 2017. Seen purely in terms of heat content, this dangerous natural gas accounted for 3.96 petajoules of energy, while the plant produced 2,494,644 MWh of electrical energy which translates to 8.981 petajoules of electricity. Thus one could argue that 44.1% of the energy came from burning dangerous natural gas and indiscriminately dumping the dangerous fossil fuel waste carbon dioxide directly into the atmosphere. However, it might be possible to construct a solar thermal plant that stores some of its heat energy (with the caveat that storing energy wastes energy). For the sake of this thought experiment, I’m going to compromise and assume that the plant provides sufficient heat to split carbon dioxide for four hours a day, avoiding the necessity of imagining a carbon perpetual motion machine in which the carbon dioxide from natural gas is collected and split and hydrogenated for use the next day to heat the device.

The authors of the paper claim that each reaction in the two reactions through which the cycle is realized for splitting carbon dioxide last for 15 minutes meaning that the reaction total is 30 minutes. If my estimate of the capacity factor of the solar thermal powered reactor is justified – and I believe it is – this means that it is possible to complete 8 cycles a day, or 2,922 cycles per year, assuming everything works properly and there are no protracted shutdowns. Under these conditions the requirement for cerium, in the stoichiometric case, where all of the cerium oxides are available to react with carbon dioxide and/or release oxygen, the requirement for cerium which can be calculated using high school level chemistry, is 2,552,419 tons of Ce2O3 which represents 2,179,170 tons of pure cerium metal. Their graphics showing the course of the reaction suggests that the cycle time was slightly longer but let’s go with 30 minutes in this thought experiment:

The caption:

…and…

The caption:

Now let’s look at the cerium requirements associated with using nuclear energy as the primary source of energy to split carbon dioxide into oxygen and carbon monoxide.

The ignorant people who made a habit of attacking nuclear energy back in the 1970’s and early 1980’s – I was among them – stated that among their many specious objections was the objections that nuclear energy was unreliable, that the systems were so complex that they could never be operated continuously. (The very same people, at least the large subset of them who never bothered to learn how to think – how laughable is this? – never bothered to make the same criticism for their magical views of solar energy, even though solar energy has the lowest capacity utilization of any system for generating electricity.) However these were ignorant people, not engineers who design machines for reliable operation many complex systems, for example, a Boeing 787 aircraft, or for that matter, an electrical grid. Once the bugs were worked out, as represented by devices like the Fort St. Vrain reactor, for the period beginning at the start of the 1990’s through the present day, nuclear reactors have a demonstrated record of being the devices with the highest capacity utilization of any power generating system, higher than coal, higher than gas, higher than petroleum, higher than hydroelectricity, higher than wind and incredibly higher than solar. Reactors routinely run at slightly more than 100% capacity utilization for periods of over a year. Most are shut down only for relatively brief periods of refueling or for routine maintenance.

A new class of nuclear reactors has been developed, or is under development, that are designed to never require refueling, to simply run until they are decommissioned, that is to run for a period of decades continuously with rare or no shutdowns. These types of reactors are known as “breed and burn” reactors. They are started using a small amount of fissionable material, as a practical matter either U-235 or plutonium with pretty much any isotopic vector, that is any distribution of isotopes. (One might also consider U-233, for this purpose although it’s not currently readily available in significant quantities and personally for a number of reasons, I regard plutonium as a superior fuel.) These reactors can run on U-238 as their fuel, so called “depleted uranium,” or even better (since it will generate significant neptunium) the uranium that makes up the bulk of used nuclear fuel. The supplies of depleted uranium already mined and isolated is sufficient to fuel all of humanity’s energy needs for centuries. An example of a “breed and burn” reactor is the Terrapower reactor, funded by Bill Gates: Terrpower Energy Systems and Terrapower Physics. There are several other companies involved in this type of "breed and burn" technology besides Terrapower.

Since "breed and burn" reactors cannot be thermal reactors they have no inherent temperature restrictions, the only limit being the subject of the coolant and the materials science associated with containing the coolant and operating at high temperatures.

Regrettably in my view, most of the world’s fast reactors that have been built thus far rely on sodium coolants, which I regard as a bad habit among nuclear designers. Since this is a thought experiment, let me describe the type of reactor I would like to see built, or at least one permutation of such a reactor that I would design for a cerium based carbon dioxide splitting system. All of the current reactor designs that fill into my imagination (or to denigrate my thinking a bit, fantasies) in recent years utilize liquid plutonium metal as a fuel, since old literature dating from the 1960’s describing the operation of the LAMPRE (Los Alamos Molten Plutonium Reactor Experiment) suggests that molten plutonium has the highest breeding ratio known, in the range of 1.5-1.6. I certainly believe that with modern advances in materials science – many with which I am familiar – this type of reactor can be designed to operate in a “breed and burn” setting. My preferred coolant for this case is liquid strontium metal, since the boiling point, 1377C, of strontium metal is conveniently near the temperature required for the generation of oxygen from CeO2 and can be raised simply by the application of modest pressures. (Other remarkable properties of strontium is that liquid strontium is immiscible with liquid plutonium, and that it is readily available, famously, as a fission product having a low neutron capture cross section. It is also straight forward to remove bulk quantities from water.)

Whatever…

The fact is that there is direct industrial experience with running nuclear reactors at 100% capacity utilization, with the only shut downs being for the purpose of refueling. Therefore since there is a clear path forward to eliminating the need for refueling over the lifetime of a reactor, it is reasonable to suggest the a cerium based carbon dioxide splitting device can also run around the clock, 365.24 days a year for many years. Under these conditions it is possible to have 48 cycles per day, or 17,532 cycles per year. In the stoichiometric case the amount of Ce2O3 required to split 1 billion tons of carbon dioxide per year is 425,403 metric tons, of which 363,195 tons represents cerium metal.

Thus the difference between the stoichiometric solar thermal case and the stoichiometric nuclear case is that the nuclear case requires 1/6 the amount of cerium when compared to the solar thermal case. Thus as in the material case, the mass efficiency, as well as in the land use case, nuclear is vastly superior to solar thermal energy in this case.

The quantities of cerium required described immediately above, the stoichiometric case, for splitting one billion tons of carbon dioxide per year, using this process, just 3% of what we currently dump each year, represents more than 100% of the current annual production of cerium. A recent Russian Report, Certain Tendencies in the Rare-Earth-Element World Market and Prospects of Russia (Gasanov et al Russian Journal of Non-Ferrous Metals, 2018, Vol. 59, No. 5, pp. 502–511) reports that the recorded world production for Lanthanides (aka "Rare Earth Elements" ) as oxides was on the order 130,000 metric tons, with 30 - 40 tons of additional wildcat illegal mining in China possibly adding to the supply. This report adds that the consumption of these ores is rapidly rising, and may be well above 200,000 metric tons per year by the middle of the next decade. The rising demand for these elements is heavily involved for the quixotic and rapidly failing quest for so called "renewable energy," particularly wind energy, because of the importance of neodymium for making magnets, and (to a lesser extent) dysprosium. Lanthanum is widely used in batteries for some types of cars having electric drives, particularly hybrid cars.

Cerium is widely used as a catalyst for the oxidation of soot and related substances such as biochar from the destructive distillation of biomass - which might be an important path to removing the dangerous fossil fuel waste carbon dioxide from the air should someone ever get serious about that - and the gasification of biological and dangerous fossil fuel asphaltenes and other tars. Another related use is in self-cleaning ovens. The uses described offer a consoling fact about the carbon dioxide splitting technology, since very often catalysts working on carbon monoxide are poisoned by carbon deposits on their surfaces – surfaces being the business end of catalysts as I’ll discuss below – via a common reaction known as the Boudouard reaction, which is the disproportionation of carbon monoxide into carbon dioxide and elemental carbon, graphite. The Boudouard reaction is an important reaction for many purposes, including the potential to strip carbon dioxide from the air, but in catalytic situations its problematic. However since cerium dioxide gasifies carbon back into carbon oxides this is not likely to be a problem in this case.

In former times these ores were mined for their thorium content. Although thorium is not strictly a lanthanide, it is found with them in many lanthanide ores; its chief uses were as a nuclear fuel - a potential replacement for uranium for these purposes since terrestrial thorium is more common than terrestrial uranium - to make mantles for gas lamps, and to make refractory ceramic crucibles for high temperature applications such as molten metal processing. The tailings from these historic mine operations may contain significant lanthanides. Today, the situation has reversed, thorium, a mildly radioactive element and potentially a valuable nuclear fuel is dumped and the lanthanides are kept.

Besides thorium, there are 14 lanthanides as well as the valuable element yttrium - which is not formally a lanthanide but has nearly identical chemistry to most of them - in common lanthanide ores, which fall generally into three minerals, monazite, bastnaesite, and xenotime. The concentration of the various elements in these ores varies considerably from mineral to mineral and site to site. Bastnaesite and Monazite can contain between 40-50% cerium, with lanthanum constituting another 20-30% and neodymium perhaps 15% to 20%. (cf Volker Zepf, Rare Earth Elements, A New Approach to the Nexus of Supply, Demand and Use, Springer, 2013, Table 2.3 page 23.) Xenotine is mined for the "heavy" lanthanides, notably dysprosium, which appear in trace amounts in most bastnaesite and monazite ores, as well as yttrium, which dominates this mineral.

If we generously take 50% as the amount of cerium in produced ores – probably not justified but let’s be optimistic, at least as optimistic (but frankly we are totally out of our minds on this score) as when we bet the planetary atmosphere on so called “renewable energy” – then the annual production of cerium oxide is on the order of 65,000 to 80,000 tons. This is less than 1/6 of the requirement in the stoichiometric nuclear case calculated above, 1/40th the amount required for the solar thermal case, again, for just one billion tons of carbon dioxide, 3% of what we actually dump each year.

Cerium is a fission product, so in the nuclear case a certain amount of it is available from the reprocessing of used nuclear fuels. Happily too, at least if the fuel is reprocessed quickly, said cerium is radioactive, owing to the presence, along with stable Ce-140 and Ce-142, of the wonderful Ce-144 isotope, which has a 284 day half-life and decays via Pr-144 to Nd-144. Nd-144 is also slightly radioactive, but is present in all neodymium ores and in fact, in all neodymium containing magnets, including those in our useless wind turbines, owing to its extremely long half-life, 2.29 quadrillion years, vastly longer than the age of the Earth. The radioactivity, and the resulting heat generated means that this radioactive form might be very useful for isolated uses, particularly the case where the cerium is utilized to gasify asphaltenes and tars from the destructive distillation of biomass, particularly since radiation breaks chemical bonds. But the fact is that the very high energy to mass ratio that makes nuclear energy environmentally superior to all other forms of energy also means that there will never be very much of this material available, certainly not an appreciable scale compared to the putative demand for carbon dioxide splitting.

However the real situation – not that reality is particularly popular when we discuss climate change – is actually far worse than I’ve described above, since in all of the above I’ve been utilizing the stoichiometric case. In the device pictured at the outset of this post, the actual yield is not stoichiometric.

From the paper:

Thus the molar ratio for the conversion of CO2 to CO and O2 is decidedly not stoichiometric, 1:1 with respect to Ce2O3, for CO and 1:0.5 with respect to CeO2 for oxygen. In the latter case it is 1:0.024 and for the former 1:0.048.

Wow.

This lab scale and thus hardly optimized result raises the cerium requirements by a huge amount. It means that for the nuclear case, the requirement for exactly one billion tons of carbon dioxide to be split would be 7,566,562 metric tons of cerium (as the metal) and that for the solar thermal case, it would be 45,399,372 metric tons also as the metal.

Despite all of the rhetoric about so called "renewable energy" being "green," the isolation of the lanthanides is a dirty business; I've discussed this elsewhere in this space: Some life cycle graphics on so called "rare earth elements," i.e. the lanthanides and Yet another paper on the external cost of neodymium iron boride magnets. Even if it could be done, any attempt to scale production of cerium to these levels would represent an environmental catastrophe to be sure, since the current demand for lanthanides for so called "renewable energy" and other purposes is, at best, environmentally dubious. Under these conditions, the whole idea of cerium based carbon dioxide splitting is absurd.

It is possible though, that significant improvements can be made. (It is also true that many other carbon dioxide splitting technologies are known; my favorite is actually a zinc based approach involving gaseous zinc, which involves higher temperatures, at least in the case where my private catalytic thought experiments on reducing these temperatures not prove absurd.)

As noted above, heterogeneous catalysis is a surface phenomenon, and the rise of nanotechnology offers some hope of improving these yields if not to the stoichiometric case then much, much closer to it, by simply, albeit technologically challenging, being sure that all of the cerium is on the surface. One avenue that strikes me, perhaps naively, is utilizing a new class of materials known as “polymer derived ceramics,” or PDC’s, a topic of which I became aware owing to my son’s summer job with researchers working in this area. I’m not as familiar with the structural nature of cerium oxides as I am with the structure of plutonium oxides, but as cerium is often used as a surrogate for plutonium in laboratories, I note that one allotrope of PuO2 – which is highly insoluble – is a plutonium/oxygen polymer. Perhaps something similar can be developed for cerium oxides, I don’t know. To the extent that cerium, polymeric or otherwise, can be coated onto a support, only a few molecules deep, the yields can be improved. But however much they are improved, this particular technology will at best offer a minor, not a major, approach to addressing climate change, should we ever get serious about climate change, which we aren’t and probably won’t be, on the right because of denial, and on the left for focusing on technologies that haven’t worked and aren’t working and won’t work.

Let me make one final point, which was mentioned obliquely in the full paper. This device is an energy storage device. Above I wrote that energy storage wastes energy, which is generally true with one important caveat. If the energy stored is obtained from energy that would otherwise be waste itself, typically heat, then the stored energy is recovered, not wasted.

This system operates at very high temperatures, higher than the boiling point of metallic strontium for example. As it happens metallic working fluids have been historically evaluated as working fluids, chiefly the alkali metals potassium, rubidium and even cesium, the latter for a once slightly fashionable technology to investigate known as thermionic generators. (Most of what we know about the compatibility of materials with gaseous metals dates from this time.) There is no particular reason that this heat cannot be recovered, and thus store otherwise lost energy through high efficiency. Above I noted that early nuclear reactors operated on a Brayton cycle with carbon dioxide gas as a working fluid. Depending on the structural integrity of a cerium based catalyst operating at high temperatures – something not mentioned in this paper – in particular its integrity with respect to pressure gradients, there is no particular reason that the catalyst could also not double as a heating element, the cooling being generated by the expansion of supercritical (pressurized) carbon dioxide in a Brayton generator, with the reduction taking place as a side product of turning a turbine. To a first and widely taught approximation, the pressure of gases is independent of the nature of the gas, as described in the famous “ideal” gas law: PV = nRT. More elaborate refinements aside, Peng Robinson, Soave-Redlich-Kwong, etc, etc, etc… it doesn’t matter if the working fluid is pure carbon dioxide or a mixture of carbon dioxide and carbon monoxide. Moreover a hot Brayton gas can be utilized to boil other working fluids, most typically water, but others are possible. This is an industrially utilized process for combined cycle plants that are used in the dangerous fossil fuel industry, making dangerous natural gas more efficiently used, and thus inspiring – the Jevon Paradox applies – the rapid growth in this very dangerous technology.

The authors of the paper claim great credit for pointing out that their work has demonstrated a high purity (83%) carbon monoxide. They claim this as an advantage since the separation of the gases for use as synthesis gas, a mixture of hydrogen and carbon monoxide, that theoretically at least can replace all the major products now obtained from the dangerous fossil fuel petroleum, as well as all major products obtained from the dangerous fossil fuel natural gas. In making the claim, the authors represent that carbon dioxide separations are industrially difficult, but the technology is well known on an industrial scale. Even Exxon, a company for a long time worked to fund the murder of all future generations via climate change denial, has done considerable research into carbon dioxide separations. This is because mined quantities of dangerous natural gas often contain significant amounts of carbon dioxide which needs to be removed before the dangerous natural gas can be burned and its waste dumped without restriction in our favorite waste dump, the planetary atmosphere on which all living things depend, so Exxon knows very well how to separate carbon dioxide from gas streams. Thus there is no particular reason that the gas needs to be extremely pure; the entire process might well be more efficient if it isn’t. (Nobel Laureate George Olah showed that the synthesis of the world’s best chemical fuel, dimethyl ether, actually requires catalytic amounts of carbon dioxide in the mixture, in order to synthesize this fuel directly (without a methanol intermediate) from synthesis gas.

By the way, it is worth noting that if one has carbon monoxide, one has access to hydrogen – if and only if – one also has access to water, a case that is problematic in deserts where monstrosities like Ivanpah are located. Almost all of the world’s industrial hydrogen, approximately 99% of it, is obtained in this way from the famous and industrialized “water gas reaction:”

H2O + CO <-> H2 + CO2.

While this reaction is not accessible at Ivanpah or similar places, for instance if we intend to cover three Arizona equivalents with this useless and unsustainable junk, it is very much accessible for nuclear plants located on shore lines. (Please don’t hand me any bullshit about Fukushima here; focus on Fukushima is garbage thinking, selective attention that is incredibly toxic.) Very high temperatures make supercritical water accessible, and salts are insoluble in supercritical water as opposed to liquid water, meaning that this is a potential technology for desalination, which from my perspective, given our failure to address climate change, may prove necessary. Seawater also contains concentrated carbon dioxide when compared to the atmosphere, and considerable amounts of biomass – especially when highly polluted as is the case with the Mississippi River Delta owing to eutrophication, the explosion of biomass. All of these represent potentially efficient opportunities for the capture of carbon dioxide from the air, a question that the authors of this paper completely gloss over, “whence the carbon dioxide for the reaction to take place?”

The carbon dioxide in our atmosphere represents dumped entropy - some of the efficiency of the use of dangerous fossil fuels is connected with the dilution of carbon from ordered to disordered states. The reversal of those centuries of dumped entropy represent another huge burden we’ve placed on future generations as we’ve been too lazy to think, to self-absorbed with our consumerist nightmares and horseshit about Tesla cars and the like, to care. The reversal of this accumulated entropy will require additional energy – vast amounts of it – and no, solar thermal plants won’t cut it.

This I realize is a long post, and probably almost no one will read it, but if nothing else, it clarified my thinking on the subject, as well as taught me things, in writing it, and thus was a useful enterprise for my week off for the holidays.

I trust your holidays have been thus far as happy and as rewarding and joyful as mine have been. I wish you a happy, safe, and productive New Year.

Single Molecule Magnets Exploiting the 5f Electrons of Plutonium.

The paper I'll discuss in this brief post is this one: Theoretical Investigation of Plutonium-Based Single-Molecule Magnets (Carlo Alberto Gaggioli and Laura Gagliardi,* Inorg. Chem., 2018, 57 (14), pp 8098–8105).

From a purely theoretical standpoint, plutonium is one of the most interesting elements in the periodic table. It's huge array of electrons and orbitals, traveling around a heavy nucleus and thus requiring the electrons to travel at relativistic speeds, a significant fraction of the speed of light, the shielding these electrons bring, the fact that it has a nearly half filled set of f orbitals and its myriad oxidation states and habit of disproportionation (oxidizing and reducing itself simultaneously), never mind its radiation effects all combine to produce a real sense of fascination.

A surprising paper, published this year, (the paper cited above) suggest that plutonium species are worthy of study because of a potential (but perhaps not practical) use in computer hardware. (Perhaps it can at least elucidate a path to other elements, in particular lanthanides, perhaps cerium.)

Or perhaps not, from the introductory text:

...The actinide elements instead, because of their large spin–orbit coupling and the radial extension of the 5f orbitals, are more promising for the design of both mononuclear and exchange coupled molecules. Indeed, new actinide SMMs have emerged and are already demonstrating encouraging properties.(18−25) The actinides present a non-negligible covalency of the metal–ligand interaction,(26,27) and while covalency offers an advantage for the generation of strong magnetic exchange, it also makes the rational design of mononuclear actinide complexes more challenging than in the lanthanide case.

However, after some very sophisticated computer modeling the authors note that such a mononuclear complex of plutonium has been synthesized. It is here:

The caption:

It is known to be exhibit magnetic susceptibility, which the authors explore from a theoretical standpoint with a sophisticated computational analytical method:

The electronic structures were further characterized using multireference methods. The wave functions were optimized at the complete active space self-consistent field (CASSCF)(38) level of theory. All-electron basis sets of atomic natural orbital type with relativistic core corrections (ANO-RCC) were used,(63) employing a triple-ζ plus polarization basis set for Pu (VTZP) and double-ζ plus polarization basis set for the other atoms (VDZP). The resolution of identity Cholesky decomposition (RICD)(64) was employed to reduce the time for the computation of the two-electron integrals. Scalar relativistic effects were included by means of the Douglas–Kroll–Hess Hamiltonian.(65)

The smallest active space employed in this work is a CASSCF(5,7) active space, meaning five electrons and seven orbitals, which takes into account all the configuration state functions (CSFs) arising from the distributions of the five electrons of Pu(III) in the seven 5f orbitals. This active space gives rise to 21 sextet, 224 quartet, and 490 doublet roots. We further examined an enlarged active space [CASSCF(5,12)], in which we included the five unoccupied 6d orbitals of plutonium, to account for possible low-energy 5f to 6d excitations...

...We then performed a multistate CASPT2 calculation (MS-CASPT2)(66) using an IPEA shift of 0.25 and an imaginary shift of 0.2 atomic unit on a selected set of states (vide infra). Spin–orbit (SO) coupling effects were estimated a posteriori by using the RAS state interaction (SO-RASSI) method.(67)

Finally, we computed the magnetic susceptibilities with the SINGLE-ANISO code,(68−70) which requests as input energies (ε ) and magnetic moments (μ ) of the spin–orbit states obtained from the RASSI calculation and uses them in an equation based on the van-Vleck formalism (eq 1).

The magnetic susceptibility is a function of temperature and arises from the sum over the spin states (i,j) of the magnetic moments weighted by the Boltzmann population of each state. The magnetic moments are calculated by applying the magnetic moment operator in the basis of multiplet eigenstates.(71) The magnetic moment operator reads

where ge (=2.0023) is the free spin g factor, μB is the Bohr magneton, and Ŝ and L̂ are the operators of the total spin momentum and the total orbital momentum, respectively.

The summations run over all electrons of the complex.

For compound 1, the calculations described above were also performed at the experimental geometry to confirm that the two different geometries give similar results.

It might be lots of jargon, but it gives a feel for the task...

The authors determine the energies of various electronic states, which is described in the following graphic:

The caption:

And the point of the calculation, the magnetic susceptibility:

The caption:

The success of a model really depends on how closely it matches experiment.

The authors comment:

But in their conclusion the authors note that they are on the path to success:

It will be interesting to see if someone is able to synthesize the improved complex they predict will be superior.

It is difficult to imagine that plutonium based computer hardware will ever be practical of course; the reason for doing this work, besides testing theory that might be useful in other areas with other elements, is that it is extends pure knowledge, it causes to stretch our scientific intellectual muscles to inspire wonder.

Of, course, in the abstract, one notes that plutonium has at least two isotopes with very long half-lives, and thus lower radioactivity, that one might imagine might be sufficiently stable to build such a device, Pu-242 and Pu-244. The latter isotope has a half-life of 80 million years. However Pu-244 is very difficult to access. Other than atom-by-atom synthesis in an accelerator, the generation of this isotope is extremely complex, particularly if one is seeking high isotopic purity. It would involve the long term irradiation of pure americium, with the goal of displacing as much as the lower readily available isotope, Am-241, with the higher isotope, Am-243. This would require periods of irradiation punctuated by separations to remove resultant Pu-238 (from the decay of Cm-242) and Pu-242 (also from the branched decay of Cm-242), until reasonably pure Am-243 resulted for a final irradiation. Pu-244 arises from one branch (the minor branch, about 1%) of the decay of Am-244. The separation would need to take place fairly early, since the other isotope produced from the decay of Am-244, Cm-244, decays with an 18 year half life to a contaminating isotope (and neutron emitter) Pu-240.

...Not worth it I think.

Nevertheless, the paper itself was worth a read, because it's fun, and interesting, and it expands one's mind.

I hope your New Year's plans are proceeding nicely. Have fun and be safe.

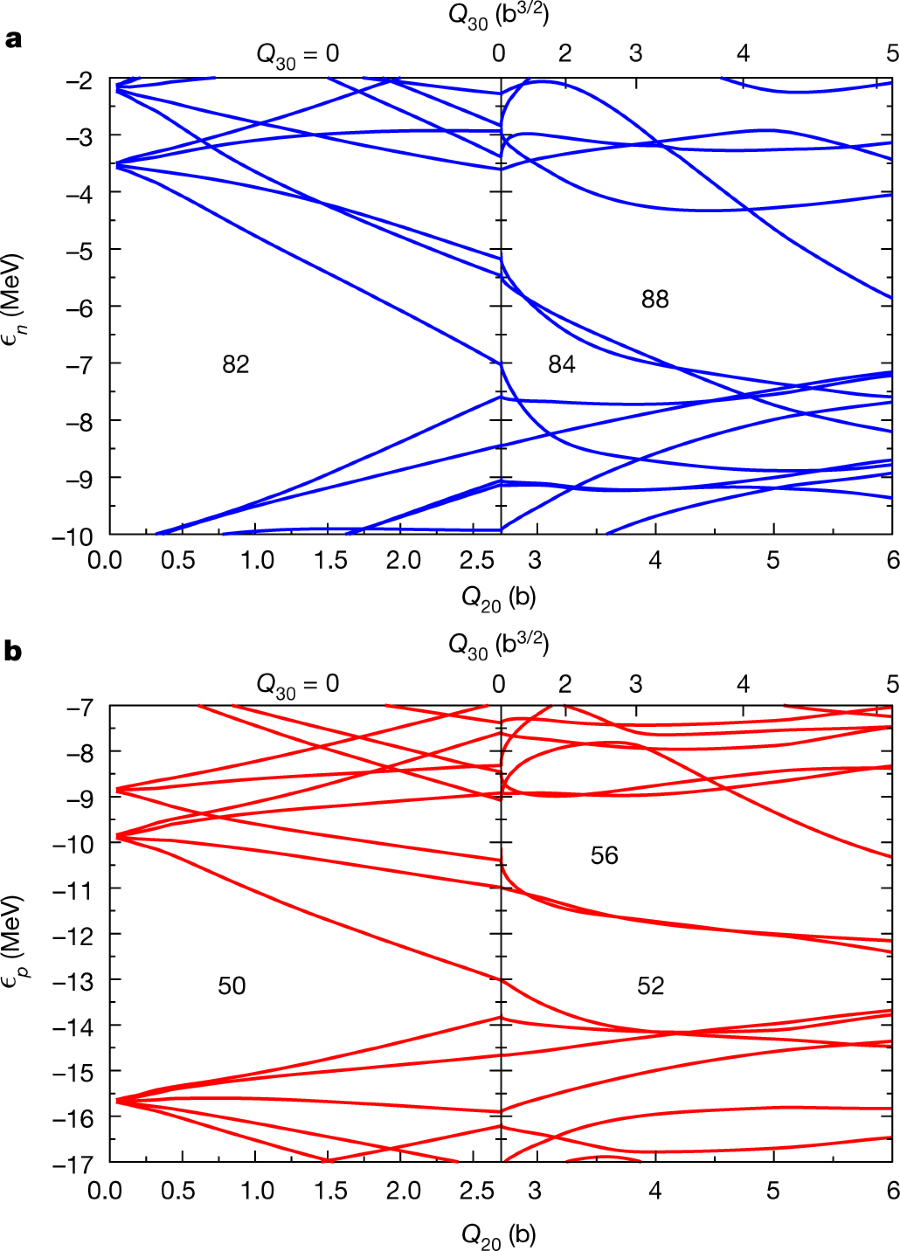

Theoretical Analysis of the Observed Asymmetry in the Fission of Actinides.

The paper I'll discuss in this post is this one: Impact of pear-shaped fission fragments on mass-asymmetric fission in actinides (Guillaume Scamps & Cédric Simenel, Nature 564, 382–385 (2018))

Used nuclear fuel is very interesting stuff purely from a chemist's perspective because of the wide distribution of elements in it. This, in my view, is a good thing, because it allows the recovery of lots of elements with potential for utilization in the solution of extremely important situations. For example, it has been argued - I've referred to this often in places I've written on the internet - that the world supply of the valuable and technologically important element rhodium available from used nuclear fuel will shortly exceed that available from ores. (cf. Electrochimica Acta 53, 6, 2008, Pages 2794-2801) The exactly symmetric fission of plutonium would give an isotope of silver with 118 or 119 neutrons which would rapidly decay - owing to a large neutron excess - to a stable isotope of tin, 118Sn. Tin is a useful element, but nowhere near as valuable as rhodium.

The fission product distribution (with mass numbers for the abscissa) for plutonium-239 is shown here:

?w=444&h=389

?w=444&h=389

I've been thinking about used nuclear fuels - "nuclear waste" in the parlance of people with far too limited imaginations - for a very long time, but I never paused to think about why the actinide elements - at least up to element 100, Fermium which does almost perfectly undergo symmetric fission - fission in an asymmetric fashion.

The paper cited at the outset addresses this issue.

From the abstract:

From the paper's introduction:

Although progress has been made recently in describing fission-fragment mass distributions with stochastic approaches9,10, theoretical description of the first stage of fission, from the ground-state deformation to the fission barrier, remains a challenge11. However, the study of the dynamics along the fission valleys is now possible with the time-dependent energy-density functional approach12,13 including nuclear superfluidity7,14,15

Heavy nuclei have long been treated as fluids, beginning with the work of Neils Bohr and George Gamow who described heavy nuclei using the "liquid drop" model. This model was used by Lise Meitner and her nephew Otto Frisch to discover nuclear fission using the laboratory results of Otto Hahn. In this model, these drops deform, and sometimes they deform so much that the electrostatic repulsion of protons in their nuclei overcomes the very locally acting strong nuclear force.

From the paper:

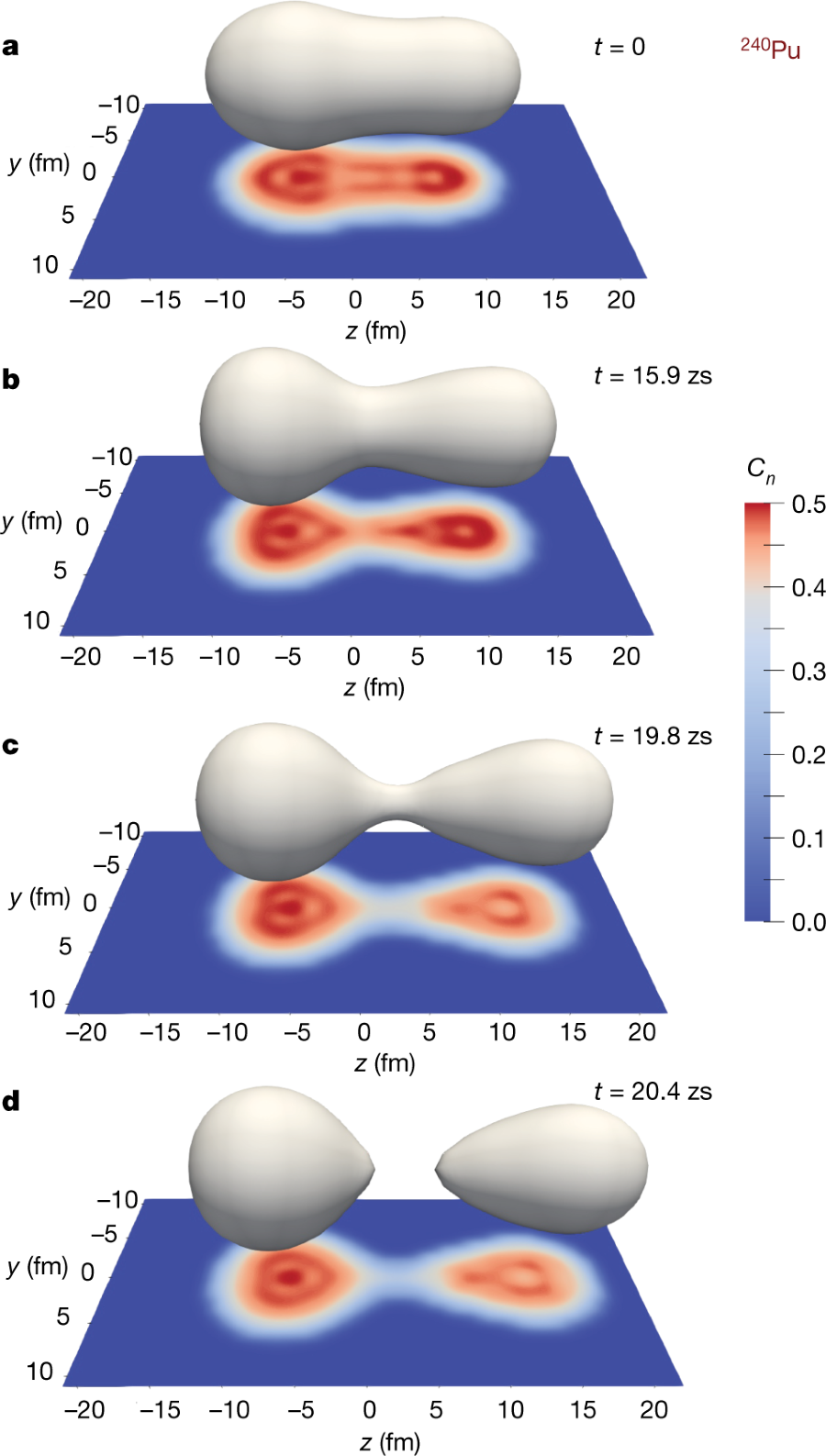

Figure 1:

The caption:

The authors use certain computational quantum mechanical calculation programs (Hartree-Fock and DFT type calculations often used in the study of fermion - electron - interactions, here applied to the alternate quantum mechanical system, Bosons, dictated by the Bose Einstein statistics applied to at least some nuclei.

To wit:

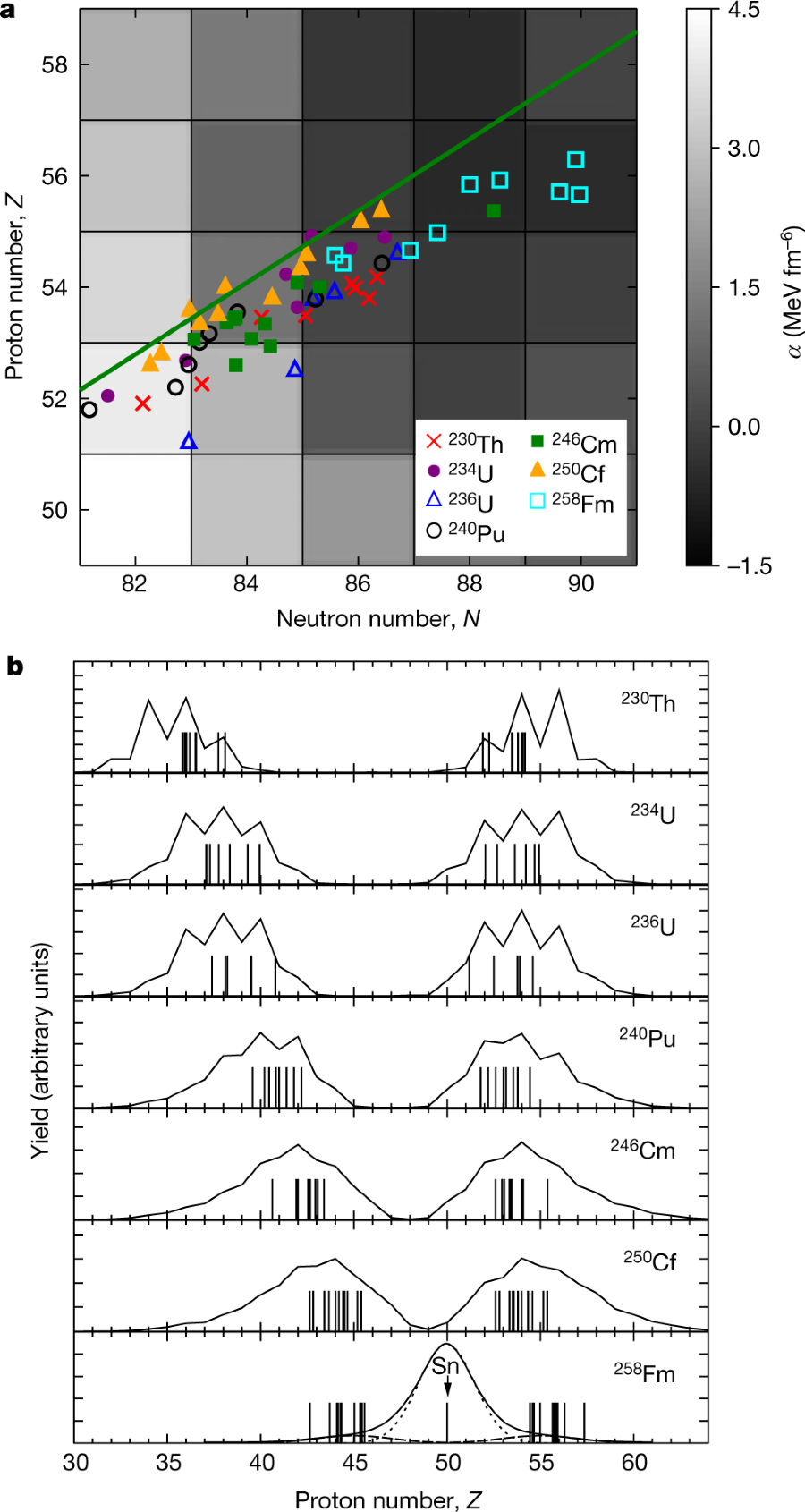

The calculated distribution of fission products in the heavy elements:

The caption:

The authors write about the interplay of the two fundamental forces at play in atomic nuclei:

The strong attractive nuclear force between the fragments is responsible for the neck (see Fig. 1b, c), inducing quadrupole (cigar-shaped) and octupole (pear-shaped) deformations of the fragments. Although quadrupole deformation is often taken into account in modelling fission17, octupole deformation is also important for describing scission configurations properly18. The neutron localization function19 Cn (see Methods), shown as projections in Fig. 1, also exhibits strong octupole shapes. The localization function is often used to characterize shell structures in quantum many-body systems such as nuclei20,21 and atoms21.

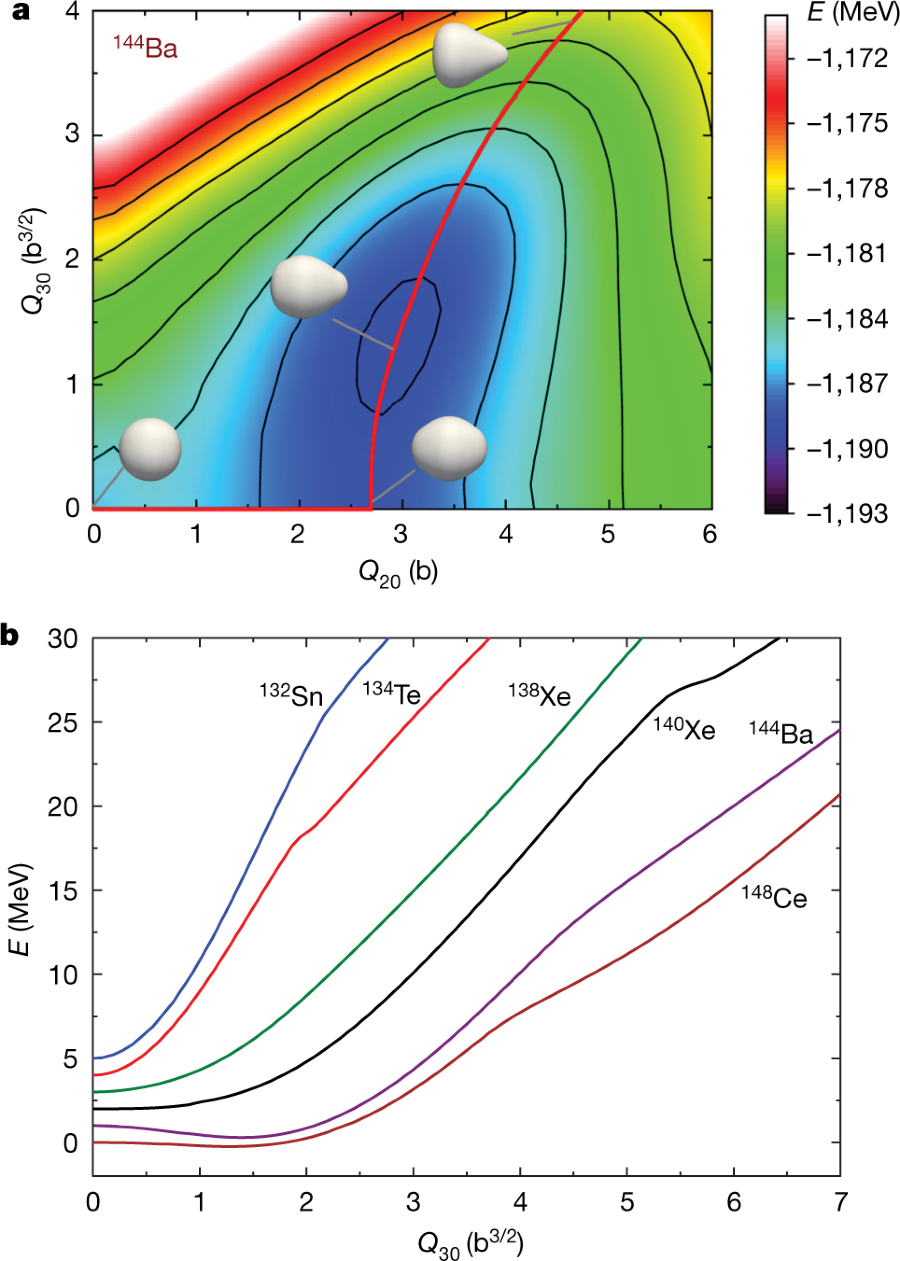

They discuss some properties of one of the prominent fission fragments, Ba-144.

144Ba is an important fission product, since it gives rise through rapid beta decays to the interesting and potentially very useful radioactive isotope 144Ce, which has a moderately long half life of 284 days, meaning that it is possible to isolate it for use, before decaying into the quasi-stable naturally occurring isotope found in all samples of neodymium, an element that plays an important role is some types of magnets utilized for the somewhat absurd pretenses of giving a rat's ass about climate change represented by electric cars.

One of the graphics refers to computations involving 144Ba:

The caption:

Here's another graphic touching on the relative stability of fission products, including 144Ba, with respect to fission products.

The caption:

Some concluding remarks from the authors:

This paper was a wonderful Christmas gift to me resulting from my birthday present from my wife, a subscription to Nature (meaning I can read this journal any time without dragging my fat ass to an open library.)

Whether we figure it out or not in any kind of time to save what still might be saved, our understanding (and use) of nuclear fission is our last, best hope.

I hope your Christmas Eve and Christmas day will prove as wonderful as my holidays have been thus far.

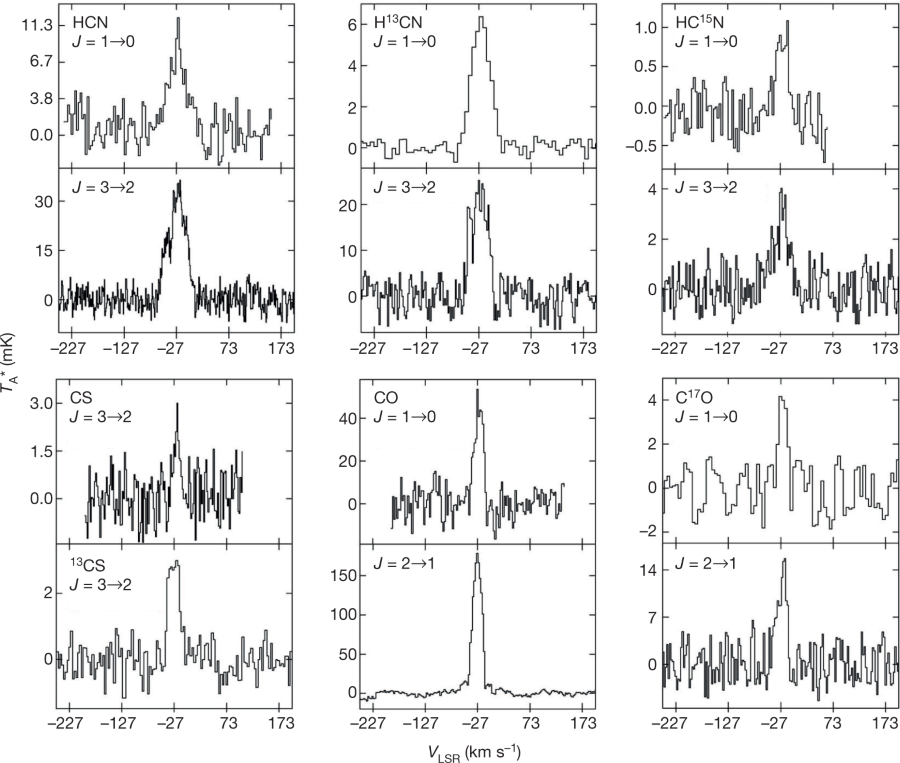

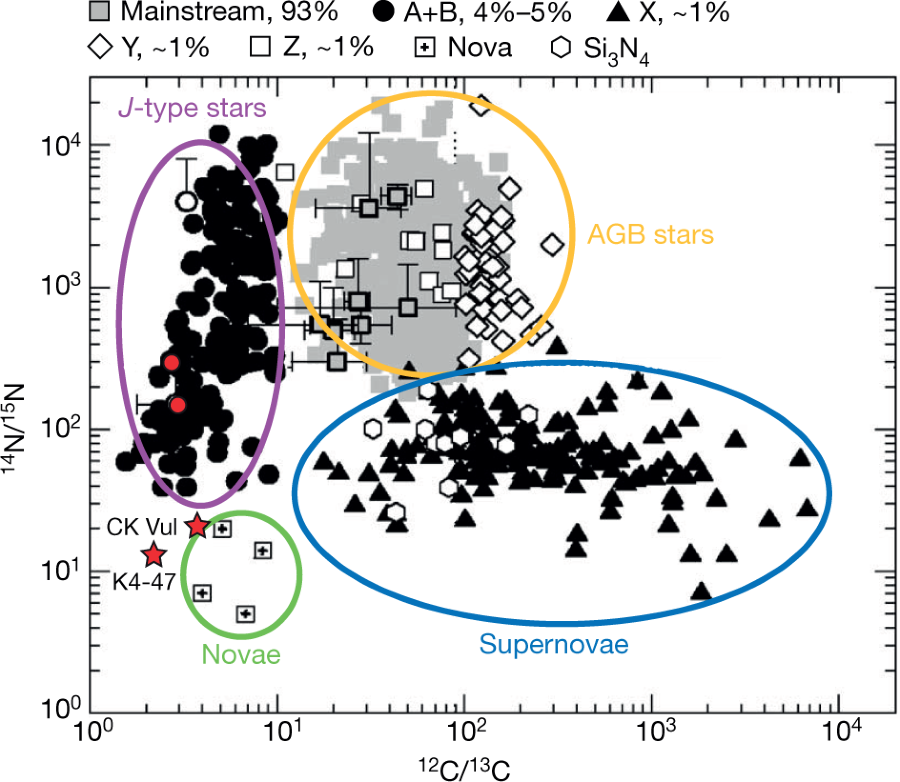

Rare Carbon, Nitrogen, and Oxygen Isotopes Appear Abundant in a Young Planetary Nebula.

The paper I'll discuss in this post is this one: Extreme 13C,15N and 17O isotopic enrichment in the young planetary nebula K4-47 (Ziurys et al Nature 564, 378–381 (2018))

Some background:

Carbon has two stable isotopes, 12C and 13C; Nitrogen has two as well, 14N and 15N; Oxygen has three, 16O, 17O, and 18O.

All of the atoms in the universe except for hydrogen, a portion of its helium and a fraction of just one of lithium’s isotopes, 7Li, have been created by nuclear reactions after the “big bang.” The majority of these reactions took place in stars, with some important exceptions: Lithium’s other isotope, 6Li practically all of the beryllium in the universe, and all of its boron. (Lithium, beryllium, and boron are not stable in stars, and all three are rapidly consumed in them; they all exist because of nuclear spallation reactions driven by cosmic rays in gaseous interstellar clouds.)

For the uninitiated, writing a nuclear reaction in the format 14N(n,p)14C means that a nucleon, in this case nitrogen’s isotope with a mass number of 14 is struck by a neutron (n) and as a consequence ejects a proton (p) to give a new nucleon, carbon’s radioactive isotope having a mass number of 14.

In the case of carbon, the nuclear reaction just described has been taking place in Earth’s atmosphere ever since that atmosphere formed with large amounts of nitrogen gas in it. Thus a third radioactive isotope of carbon occurs naturally from the 14N(n,p)14C reaction in the atmosphere as a result of the cosmic ray flux from deep space and protons flowing out of the sun. However, since carbon 14 is radioactive and since the number of radioactive decays depends proportionately on the amount of atoms that exist, it eventually reaches a point at which it is decaying as fast as it is formed. We call this “secular equilibirium.” The long term secular equilibrium at which carbon 14 is formed in the atmosphere at the same rate at which it is decaying is the basis of carbon dating. (This secular equilibrium has been disturbed by the input of 14C as a result of nuclear weapons testing.) Even with the injection of 14C as a result of nuclear testing, 14C remains nonetheless extremely rare and for most purposes other than dating, can be ignored, except perhaps by radiation paranoids.

In the case of all three elements mentioned at the outset, the isotopic distribution in the immediate area of our solar system is dominated in each case by a single isotope: On Earth Carbon is 98.9% 12C; Nitrogen is 99.6% 14N; Oxygen is 99.8% 16O. The abundances vary only very slightly in the sun.

An interesting nuclear aside: 14N has a very unusual property: It is the only known nuclide to be stable while having both an odd number of neutrons and an odd number of protons. No other such example is known. Note the correction to this statement by a clear thinking correspondent in the comments below.

In recent years, I've been rather entranced by the interesting properties of the fissionable actinide nitrides, in particular the mononitrides of uranium, neptunium and plutonium and their interesting and likely very useful properties, and in this sense I've been sort of wistful over the low abundance of 15N in natural nitrogen. In an operating nuclear reactor, with a high flux on neutrons - in the type of reactors I think the world needs, fast neutrons - the same nuclear reaction that takes place in the upper atmosphere, the 14N(n,p)14C reaction, takes place. Thus the inclusion of the common isotope of nitrogen in nitride nuclear fuels will result in the accumulation of radioactive carbon-14. In this case, given 14C’s long half-life, around 5,700 years – much longer than the lifetime of a nuclear fuel – secular equilibrium will not occur while the fuel is being used.

Personally this doesn't bother me, since it avoids the unnecessary expense (in my view) of isolating nitrogen’s rare isotope, 15N, and because carbon-14 has many interesting and important uses already. Carbon-14’s nuclear properties are also excellent for use in carbide fuels, inasmuch as it has a trivial neutron capture cross section compared to carbons two stable isotopes and, without reference to the crystal structure of actinide nitrides and mean free paths therein, and without reference to the scattering cross section of the nuclide (which I don't have readily available), it takes 15% more collisions (for C14 to moderate (slow down) fast neutrons from 1 MeV (the order of magnitude at which neutrons emerge during fission) to thermal (0.253 eV) neutrons than it takes for carbon’s common isotope 12C. (Cf, Stacey, Nuclear Reactor Physics, Wiley, 2001, page 31.) Thus 14C is a less effective moderator, and thus has superior properties in the "breed and burn" type reactors I favor, reactors designed to run for more than half a century without being refueled, reactors designed to run on uranium’s most common isotope, 238U rather than the rare isotope, 235U, currently utilized in most nuclear reactors today. Over the many centuries it would take to consume all of the 238 already mined and sometimes regarded as so called “nuclear waste,” access to industrial amounts of carbon-14 might well prove very desirable for the purposes of neutron efficiency.

Anyway...

The dominance of the major isotopes of carbon, nitrogen, and oxygen in our local solar system and in much of the universe is a function of stellar synthesis. Most stable stars destined to have lives measured in billions of years, including our sun, actually run on the CNO cycle, in which the nuclear fusion of hydrogen into helium takes place catalytically rather than directly.

Here's a picture showing the CNO cycle pathways:

Six of the nuclei in this diagram are stable, the aforementioned 12C, 13C, 14N, 15N, 16O, and 17O. However only 3 of them occur in other pathways, 12C, 14N, and 16O. When a main sequence star is very old and has consumed nearly all of its hydrogen, the only nuclei left to "burn" is 4He. The problem is that 4He has much higher binding energy than its nearest neighbors, including putative beryllium isotopes. Here is the binding energy curve for atomic nuclei, the higher points being the more stable with respect to the lower points:

Helium-4's anomalous stability prevents the formation of the putative isotope Beryllium-8. Observation of this isotope of beryllium is almost impossible since it's half-life is on the order of ten attoseconds, and it cannot actually form in stars. This is why 12C is a critical element in the pathway to the existence of all heavier elements. It forms from the simultaneous fusion of three helium atoms, and exists because it is more stable than helium-4. This is exactly what happens in dense stars when they have run low or out of hydrogen and only have helium left to burn. Carbon-12 can fuse with helium-4 to form oxygen-16. In addition, it can fuse with residual deuterium (2H) under these circumstances to form nitrogen-14. (However, in the helium burning phase deuterium, which forms from the p(p,γ )d reaction, where d is deuterium nuclei, is also relatively depleted.) Thus the formation of these isotopes is independent of the CNO cycle. As a result, it turns out that after hydrogen and helium which together account for 98% of the universe’s elemental mass, oxygen and carbon are respectively the third and fourth most common elements. These four elements comprise 99.5% of the elemental mass of the universe. All other elements, with nitrogen included, turn out to be minor impurities in the universe as a whole.

The minor isotopes in the CNO cycle are actually consumed in stars in this model, and to the extent that they exist, they simply raise the catalytic rate of hydrogen consumption.

All of this is the “understanding,” at least.

According to the authors of the paper cited at the outset, however, there seems to be other things going on in the universe, places where these reactions and their effects do not dominate. The authors are studying, at microwave and other frequencies, a planetary nebula that is estimated to be only 400-900 years old.

From the introductory text in the paper:

From the abstract of the paper, touching on the unusual nature of what the authors are seeing:

... These results suggest that nucleosynthesis of carbon, nitrogen and oxygen is not well understood and that the classification of certain stardust grains must be reconsidered.

They describe in the body of the paper the molecules they find that allow them to identify the isotopes, from the vibrational frequencies of their rotations which are effected by these, the frequencies being effected by the masses at the atoms of which they are constructed. (In the paper the techniques for the sensitive detection of these frequency variations is described.)

Figure 2:

The caption:

Some further remarks:

A note on the rarity of this finding:

Aside from K4-47 and CK Vul, similarly low 12C/13C, 14N/15N and 16O/17O ratios have been found in presolar grains—small, 0.1–20-μm-sized particles extracted from meteorites28. These grains are known to predate the Solar System and originate in the circumstellar envelopes of stars that have long since died.

There is some discussion of the current theories of the origins of presolar grains, comprised largely of silicon carbide, thought to originate from "AGB" (Asymptotic giant branch) stars.

A graphic on this topic from the paper:

The caption:

Some final comments from the authors before technical discussions of methods:

50 years after the "Earth-rise" picture from Apollo 8 gave us a sense of our planetary fragility coupled with its magnificence, the rise of intellectually deficient, self absorbed fools, of which the asinine criminal Donald Trump is just one example, has threatened all that lives on that jewel planet first photographed from the orbit of the moon.

One feels the tragedy.

But the universe goes on, and for me, in this holiday season, it is good to feel its eternity, the beautiful facts that have no reason to be found other than that they are beautiful. In the grand scheme, I'm not sure we matter.

I wish you the best holiday season, and the peace that I found in this little paper, and that I wish that you, in your own place and own way, will similarly find.

If the 25th amendment is not activated under these circumstances, it may as well not have been...

...written.

It reads:

Section 1.

In case of the removal of the President from office or of his death or resignation, the Vice President shall become President.

Section 2.

Whenever there is a vacancy in the office of the Vice President, the President shall nominate a Vice President who shall take office upon confirmation by a majority vote of both Houses of Congress.

Section 3.

Whenever the President transmits to the President pro tempore of the Senate and the Speaker of the House of Representatives his written declaration that he is unable to discharge the powers and duties of his office, and until he transmits to them a written declaration to the contrary, such powers and duties shall be discharged by the Vice President as Acting President.

Section 4.

Whenever the Vice President and a majority of either the principal officers of the executive departments or of such other body as Congress may by law provide, transmit to the President pro tempore of the Senate and the Speaker of the House of Representatives their written declaration that the President is unable to discharge the powers and duties of his office, the Vice President shall immediately assume the powers and duties of the office as Acting President.

Thereafter, when the President transmits to the President pro tempore of the Senate and the Speaker of the House of Representatives his written declaration that no inability exists, he shall resume the powers and duties of his office unless the Vice President and a majority of either the principal officers of the executive department or of such other body as Congress may by law provide, transmit within four days to the President pro tempore of the Senate and the Speaker of the House of Representatives their written declaration that the President is unable to discharge the powers and duties of his office. Thereupon Congress shall decide the issue, assembling within forty-eight hours for that purpose if not in session. If the Congress, within twenty-one days after receipt of the latter written declaration, or, if Congress is not in session, within twenty-one days after Congress is required to assemble, determines by two-thirds vote of both Houses that the President is unable to discharge the powers and duties of his office, the Vice President shall continue to discharge the same as Acting President; otherwise, the President shall resume the powers and duties of his office.

This "President" has never been able to discharge his duties as President.

He is also clearly guilty of "high crimes and misdemeanors,." as he is clearly in the employ of a foreign autocrat.

Is there one, or two, or three patriots left in the Republican party?

Anyone?

None?

A Bunch of Artists Working with Uranium Based Media Have All Died.

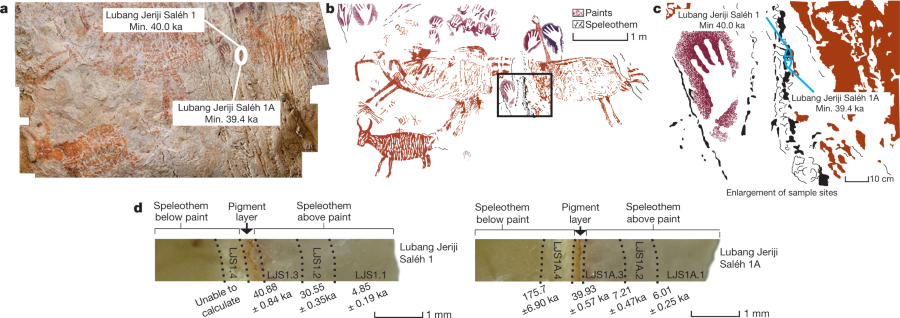

The paper I'll discuss in this post is this one: Palaeolithic cave art in Borneo

(Aubert et al, Nature 564, 254–257 (2018) ).

From the abstract:

Apparently, this is the oldest know art on Earth. Let's cut to the chase and take a look at the art from a figure in the paper:

The caption:

This art was discovered in a remote area featuring very challenging access:

Don't worry about those "densely forested" mountain chains, by the way. We'll kill the forests soon enough, if not by logging, then by climate driven fire. Once it's cleared you'll be able to drive your Tesla SUV right up to them.

If, by the way, you actually want to review some of this oldest art on Earth, you should click on the link to the paper. The paper itself is behind a fire wall, but I believe the the supplementary Extended Data and Supplemental files are open sourced and you can take a look.

One may also learn about the chemical composition of these pigments in these files. In particular, one can learn the isotopic signature of the uranium, natural thorium, and thorium-230, the decay product of uranium-238. The ratio of the latter two and the distance from the secular equilibrium point: the point at which thorium-230 concentrations in a particular sample are decaying at the same rate as they are being formed. This distance from this equilibrium point gives the age of the sample. The concentrations of uranium are on the order of parts per million, and the thorium-230 on the order of parts per trillion. The lower decay products are probably not useful to look at, since the caves surely have been leaching radon gas since they formed.

Other common dating techniques using natural radionuclides are, of course, carbon-14 (which has moved from its previous equilibrium point owing to nuclear testing in the 20th century) and rubidium/strontium 87 for very old rocks. Certain lanthanides can also be utilized in this way, since many of the natural lanthanides contain significant amounts of radioactive isotopes.

Here, from the full paper's text is a description of these determination:

Beautiful analytical chemistry...

The pigments themselves are actually not uranium based by the way, except to the extent that the carbonates therein carried leaching uranium. The pigments, most of which are said to have a "mulberry" color were largely iron based.

The title of this post by the way, represents an abuse of language. This abuse is deliberate.

Uranium is a ubiquitous element, as common as tin. There are between 4.5 and 5 billion tons of it in the oceans alone. I offered significant detail on planetary flows of uranium - giving the references - elsewhere on the internet: Sustaining the Wind, Part 3, Is Uranium Exhaustible?

The very large source of uranium resulting in human exposure is from fertilizer, since uranium has a high affinity for phosphate. (Phosphate ores in Florida were once considered as uranium ores, until richer sources of uranium were found. These same ores are now dug to make fertilizer and the uranium in them is not removed; it would be too expensive to do so.)

The existence of uranium is the last best hope of the human race as the planetary atmosphere collapses owing to the ongoing catastrophe of climate change. Regrettably, fear of this element in the periodic table - it is a chemically toxic element, as is the natural arsenic found in many worldwide water supplies, most notably Bangladesh - prevents its use to save what still might be saved. It is worth noting that over the long term, especially in continuous recycling schemes that nuclear power can reduce the radioactivity of the planet.