NNadir

NNadir's JournalSome Reactor Physics for the Production of Anti-Proliferation Plutonium.

All of humanity's puny efforts to address climate change have failed. I keep a spreadsheet of data from the Mauna Loa Carbon Dioxide Observatory which compares the weekly measurement with the same measurement the year before. This week, the level of carbon dioxide was 2.07 ppm higher than it was a year ago, which compared with most of the data over the last 5 years, is relatively mild, but over the broader scale, highly disturbing. In the 20th century, the average of all such data (collected beginning in 1958) was 1.54 ppm per year. In the 21st century this same figure is 2.12 ppm. Of the 30 highest such data points, 19 occurred in the last 5 years, 21 in the last 10 years, and 23 in the 21st century. The highest ever such recorded piece of data was recorded on July 31, 2016, 5.04 ppm over the value for the same week in in 2015.

We are now approaching the late September/Early October annual minimum for atmospheric concentrations of the dangerous fossil fuel waste carbon dioxide in the planetary atmosphere. It will be well above 400 ppm, 22 ppm or 23 ppm more than it was just ten years ago. No one now living will ever see carbon dioxide concentrations below 400 ppm in their lifetimes.

The popular response to addressing climate change consists these days almost entirely of hyping so called "renewable energy." Since so called "renewable energy" has not worked, is not working, and will not work, this approach is extremely dangerous to humanity, and indeed, all living things, particularly when one considers the trillions of dollars squandered on it in just the last ten years. Combined, all the solar and wind energy produced by all the expensive and useless facilities ever built in half a century of wild cheering cannot produce as much energy in a year as is produced by the annual increase in the use of the dangerous fossil fuel natural gas, said use being secured by the popular imagination about so called "renewable energy." ("Renewable" is, by the way is a fraudulent term, since wind and solar plants depend on access to either exotic or extremely dangerous materials.)

In more than 3 decades of study, I have convinced myself that the only option that might work to mitigate climate change, even to arrest it (although that's very unlikely), is nuclear energy.

Of course, nuclear energy suffers from a negative public perception owing to selective attention paid to its risks - and like all energy systems nuclear energy has risks - to the exclusion of the risks of all other forms of energy. For example, half of the 7 million air pollution deaths that take place each year result from dangerous fossil fuel waste, the other half from dangerous "renewable" dangerous biomass waste, and yet very little concern is expressed about this point compared to so called "nuclear waste," which I will argue below is not even "waste" at all.

Another fun comparison is the risk of nuclear war. Since the early 20th century, the vastly overwhelming number of people killed by weapons of mass destruction have been killed by fossil fuel weapons. The number of people killed by petroleum based weapons of mass destruction dwarfs the number of people killed by nuclear weapons of mass destruction; and yet no one calls for shutting petroleum refineries because crude oil can be and is diverted to make Napalm and jet fuel.

We cannot un-invent nuclear weapons, nor can we ever make them impossible, since the supply of uranium on this planet is inexhaustible. I showed this by appeal to the scientific literature elsewhere on the internet:

Is Uranium Exhaustible?

I offered my views on the implications of this fact in yet another place on the internet: On Plutonium, Nuclear War, and Nuclear Peace

We now have accumulated sufficient used nuclear fuel, which is incorrectly called by people who can't think clearly "nuclear waste" to do some of the remarkable things that scientists in the 1950's and 1960's envisioned for radioactive materials; this back when most of the world's nuclear reactors were designed not to generate energy, but to make weapons grade plutonium. Back then there simply wasn't enough, say, cesium-137, to destroy organohalides contaminating water supplies worldwide. Regrettably fear and ignorance of all things radioactive has prevented application of this superior approach to addressing such serious environmental issues.

Used nuclear fuel also contains considerable amounts of the elements neptunium and americium, which, I argued in one of the links above, are excellent tools for making plutonium - the key to any effort to serious effort to address climate change - that is simply unusable in nuclear weapons.

These ideas certainly don't originate with me; I merely report them. (I refer to them, as short hand, to the "Kessler solution" since Kessler is one of the nuclear scientists who has worked to advance this idea, although he is surely not the only one.)

Despite catcalls from the peanut gallery of folks who know nothing at all about nuclear energy but hate it anyway, highly educated and hightly trained nuclear engineers around the world have been working on these ideas, one hopes with a growing sense of urgency, since nuclear weapons are now being controlled by petulant brats who grew up isolated from the real world, the puerile so called "President of the United States" and the disgusting little twerp who rules North Korea.

In my files this morning, as I stumbled through some collected literature that I have had not yet reviewed, I came across this paper:

Long-life fast breeder reactor with highly protected Pu breeding by introducing axial inner blanket and minor actinides (Hamase et al Annals of Nuclear Energy 44 (2012) 87–102)

From the introductory text of the paper (with some artifacts of its translation from Japanese), we can grasp the basic idea:

In the wake of an interest in nuclear electricity production due to exhaustion of fossil fuels and issue of global warming, today the requirement of uranium (U) is increasing in the world. On the other hand, the prospect of U supply in the world has been reported to be about 100 years (OECD/NEA-IAEA, 2008) and the exhaustion of U resources is concerned with the expansion of nuclear power use. To meet the energy demand, a FBR has been focused on as a Pu producer. However, in the conventional FBR, generated Pu in axial/radial outer blankets consists of more than 93% of 239Pu. This kind of Pu is categorized as a ‘‘weapon-grade Pu’’ (Pellaud, 2002) and is concerned for nuclear proliferation. Recently, the concept of Protected Plutonium Production (P3) to increase the proliferation resistance of Pu by transmutation of MA has been proposed by Saito (2002, 2004, 2005). In this concept, MA can be utilized as an origin of 238Pu since dominant nuclides of MA such as 237Np and 241Am are mainly well transmuted to that isotope. The features of 238Pu, high decay heat (567 W/kg) and high spontaneous fission neutron rate (2660 n/g/s) (Matsunobu et al., 1991) are well known to hinder the assembling Pu in a nuclear explosive device (NED) and reduce the nominal explosive yield. Furthermore, it has been reported that 240Pu and 242Pu also play an important role for denaturing of Pu (Sagara et al., 2005), since 240Pu and 242Pu transmuted from MA has relatively large BCM and high spontaneous fission neutron rate (1030 n/g/s and 1720 n/g/s) (Matsunobu et al., 1991). Also, based on the P3 proposal, Meiliza et al. (2008) has reported that the proliferation resistance of Pu produced in axial/radial blankets of conventional FBR was increased by doping a small amount of MA into axial/radial outer blankets. MA is, therefore, effective to mitigate the nuclear proliferation concern.

"BCM" is "bare critical mass."

The paper contains a great deal of technical information about the reactor design and properties, and various cases are shown.

Depending on the type of fuel used, (oxide or metal) the reactor can be designed to operate for as long as 6000 full power days, roughly 16 years. Plutonium that is undergoing fission is hardly available for making nuclear weapons, and in any case, the usefulness of any plutonium in the reactor for use in nuclear weapons is greatly reduced by the presence of denaturing isotopes, in particular the heat generating isotope 238Pu (the same isotope that powered the Cassini mission).

Basically these types of reactors are essentially fueled by depleted uranium. One can show that the uranium already mined, along with the waste thorium generated by the failed and useless wind and electric car industry, can easily fuel all of humanity's energy needs for several centuries to come without any mining of any energy related material of any type, no petroleum, no coal, no natural gas, and indeed, no lanthanides, cadmium, etc, etc for useless wind and solar junk.

From the paper's conclusion:

The feasibility study on simultaneous approaches to the extension of core life-time and the high protected Pu breeding by introducing the axial inner blanket and doping MAs in a large-scale sodium-cooling FBR has been performed for mix-oxide MOX and metallic fuel. Firstly, as the extension of core life-time, the analytical results showed that if MA was doped into the axial inner blanket, the main fission reaction zones were shifted from the active core to the axial inner blanket, and the core life-time was extended remaining reactivity swing small because 238Pu transmuted from MA was the fissionable nuclide in the fast neutron region. The maximum available EFPDs in MOX-fueled FBR with introducing the axial inner blanket and MA was extended from 1700 to 2900 compared with the conventional MOX-fueled FBR. The maximum available EFPDs in the case of metallic-fueled FBR with introducing the axial inner blanket and MA was extended to 5900.

Secondly, as the proliferation resistance of Pu, it has been reported that Pu produced in axial/radial outer blankets of conventional FBR was increased by doping a small amount of MA into them, and ATTR, an evaluation function of proliferation resistance of Pu based on isotopic material barriers such as DH and SN, has been suggested to categorize produced Pu. In the present paper, conventional ATTR was modified by taking into account BCM as ATTRmod, which was applied to evaluate the proliferation resistance of Pu generated in the axial inner blanket and axial/radial outer blankets. It was found that if 40 wt.% and 28.5 wt.% of MA were doped into the axial inner blanket in MOX and metallic fuel, respectively, the proliferation resistance of Pu generated in the axial inner blanket was significantly increased to satisfy the criteria of ‘‘practically unusable for an explosive device’’ proposed by Pellaud and ‘‘technically unfeasible for a high-technology HNEDs’’ proposed by Kessler and Kimura. Assumed that Pu generated in the axial inner blanket and also axial/radial outer blankets were collected and reprocessed together, the proliferation resistance of Pu generated in all blankets was also increased. Furthermore, in order to increase the proliferation resistance of Pu generated in axial/radial outer blankets, only 4 wt.% of MA was required in MOX and metallic fuel. For not purpose of extension of core life-time, only 5 wt.% of MA doping into the axial inner blanket was needed to increase the proliferation resistance of Pu in MOX and metallic fuel.

I am not necessarily, by the way, endorsing this particular reactor; it's sodium cooled, and I personally don't like sodium coolants. But the basic ideas of plutonium management are very important, since plutonium is the last best hope of Earth.

Have a nice Sunday afternoon.

Working with one of the most refractory and hardest materials known Tantalum Hafnium Pentacarbide.

Generally, most people are aware that spacecraft and supersonic aircraft require refractory (high melting) materials to avoid being burned up by air friction.

Since the end of the "space race" and the "cold war" there has been less interest in refractory materials than there might have been some years back, something I know from having attended a bunch of presentations of materials science departments while my son was selecting a school.

Be that as it may, whether it is generally known or not, is that future generations, owing to our inattention, fixation on dumb ideas that don't work, and general irresponsibility, will require refractory materials to reverse whatever can be reversed from our willingness to screw them over by dumping trillion ton quantities of dangerous fossil fuel waste (at a rate of over 30 billion tons per year) while we all wait, insipidly, like Godot, for the grand solar and wind Nirvana that never comes (and never will come.)

I could discuss that for hours, but rather than do so, I'd rather simply focus on a paper I collected some time ago on a rather remarkable material that fits the bill, Ta4HfC5, tetratantalum hafnium pentacarbide.

The paper I'll discuss is this one: Reduced-temperature processing and consolidation of ultra-refractory Ta4HfC5 (Int. Journal of Refractory Metals and Hard Materials 41 (2013) 293–299)

The introduction gives a nice description of the remarkable properties of this material:

Carbides, nitrides, and borides are of interest for many applications because of their high melting temperatures, high elastic moduli, and high hardness. Among all refractory compounds, 4TaC-HfC ranks among the highest, with an estimated melting temperature of 3942 °C and hardness of approximately 20 GPa at 100 g-f [1–3]. TaC is the most metallic of the IV and V transition metal monocarbides. It has the NaCl-type structure (B1, space group Fm3−m) and an exceptionally high melting point of 3880 °C [4–7]. TaC's relatively good oxidation resistance and resistance to chemical attack have been attributed to strong covalent-metallic bonding [8]. Other relevant properties of TaC include high strength, high hardness (11 to 26 GPa), wear resistance, fracture toughness (KIC ≈ 12.7 MPa-m1/2), low electrical resistivity (42.1 μΩ-cm at 25 °C), and high elastic modulus (up to 550 GPa). TaC is also reported to exhibit a ductile-to-brittle transition in the temperature range 1750–2000 °C that allows it to be shaped above the DBTT, and it also exhibits ductility of 33% at 2160 °C [9–12]. Similarly, HfC also crystallizes in the NaCl-type structure (B1, space group Fm3−m, close packed), and exhibits a high melting point (3890 °C, the highest among the binary metallic compounds) [13–15]. HfC also has good chemical stability, high oxidation resistance, high hardness (up to 33 GPa [16]), high electrical and thermal conductivity, and a high Young's modulus (up to 434 GPa) [17–24]. HfC has found applications in coatings for ultrahigh-temperature environments due to its high hardness, excellent wear resistance, good resistance to corrosion, and low thermal conductivity. HfC is also found in high-temperature shielding, field emitter tips, and arrays (HfC has the lowest work function of all transition metal carbides). In addition, HfC can be used as a reinforcing phase in tool steels [25–27].

However, if you reflect on it for even a moment, you realize the difficulty of working with such a material. It cannot be worked easily, and as it's melting point is higher than almost any container in which it can be processed, it certainly can't be cast. It's melting point is even higher than remarkable materials like uranium nitride, thorium nitride and thorium carbide. (Thorium oxide, which is mildly radioactive, has been widely used for ceramic refractory crucibles for handling molten metals.)

Such materials can only be handled by sintering, which involves heating them to roughly two thirds of their melting temperature (pretty extreme in any case) and applying extreme pressure, conditions under which the elements can diffuse to a smaller to larger extent.

In this case, the authors milled hafnium carbide and tantalum carbide powders for a long period of time (18 hours) and placed them in a graphite press under a pressure roughly 1000 times atmospheric pressure, and heated them at 1500 C (much lower than the melting point) and got pretty decent tetratantalum hafnium tetracarbide. (Machining this stuff is yet another problem, not addressed here.)

This material is not ready for prime time, nor will it ever be.

Tantalum is mostly utilized in cell phones, where it is a constituent of the supercapacitors on which those devices depend. The mining of tantalum is a great human tragedy, the "coltan" issue. (Tantalum is always found in ores that also contain niobium which was formerly known as columbium, hence the name "coltan" for the ore.) Tantalum is one of the "conflict" elements, and mining it is simply a horror.

This disturbing documentary, "Blood Coltan" is available on line:

Hafnium is a side product of the nuclear industry. It is always found in ores of its cogener zirconium, which is widely used in nuclear reactors. Typically the amount of hafnium in zirconium ores is on the order of 1-3% However, since hafnium has a very large neutron capture cross section (and is sometimes used in control rods, particularly in small reactors like those on nuclear powered ships) it must be removed from zirconium before the zirconium can be used in nuclear reactors.

It is possible however, to obtain pure hafnium free zirconium from used nuclear fuel, where it is a major fission product. The chemical separation of hafnium and zirconium is nontrivial, as is the chemical separation of niobium and tantalum, owing to the "lanthanide contraction." It is possible to obtain monoisotopic zirconium, zirconium-90, (which is lighter than "natural zirconium) from the decay of the fission product Sr-90, itself a useful heat source. Thus at some point it may be cheaper to utilize fission product zirconium instead of natural zirconium, at least it would be so in a sensible world run by intelligent and responsible people, a nuclear powered world.

But we don't live in such a world. (One may hope that future generations will be smarter than ours.)

This said, this information might be useful under many imaginable exotic conditions, and I found it interesting.

Have a nice Sunday.

Biodegradable Hydrogels for Glucose Sensitive Insulin Delivery to Treat Diabetics.

Insulin is not a cure for Type I diabetes; it is a treatment. The distinction is important for this disease which, unlike type II diabetes which strikes older people, tends to strike people in their childhood.

A diagnosis of Type I insulin dependent diabetes means, at best, a life time of carefully monitoring one's own blood for glucose concentrations, and repeatedly, as appropriate, injecting one's self with insulin. A miscalculation can lead to hypoglycemia and sometimes serious health effects.

Many alternative ways of delivering insulin have been explored, and a few commercialized, but needles remain the main way of addressing the disease, and moreover, it is not always easy to calibrate the times at which one needs or needs to avoid such an injection.

It would be nice if there were a method for delivering insulin in a way that was responsive to sugar concentrations. Thus it was encouraging to come across a paper published in the scientific journal ACS Applied Materials and Interfaces, this one:

Supersensitive Oxidation-Responsive Biodegradable PEG Hydrogels for Glucose-Triggered Insulin Delivery (Li et al ACS Appl. Mater. Interfaces, 2017, 9 (31), pp 25905–25914)

The authors write thusly:

Hydrogels have been investigated extensively and utilized widely in the fields of biotechnology, regenerative medicine, pharmaceutics, and personal hygiene.1−4 In the past two decades, responsive hydrogels have attracted particular attention owing to their great promise for delivery of drugs and bioactive macromolecules,5−7 sensing,8,9 diagnosis,10 bioanalysis and bioseparation,11,12 cell culture or tissue engineering,13,14 and so forth. Although various physical or (bio)chemical stimuli, including temperature, pH, light, enzymes or other biomolecules, reduction or electrochemical redox potential, mechanical force, and magnetic field, have been applied as triggers to induce the formation, change in shape or morphology, and degradation or dissociation of hydrogels,15−17 there are only a few papers on oxidation-responsive polymeric hydrogels that suffer from the weaknesses of low sensitivity or low response rate toward reactive oxygen species (ROS) or low mechanical strength...

... Although a number of papers report polymeric or supramolecular hydrogels that contain phenylboronic acid/ ester moieties, a majority of them are associated with the glucose- or pH-sensitivities of these hydrogels.43−50 Recently, Hamachi and co-workers have reported H2O2-responsive supramolecular hydrogels formed by self-assembly of the hydrophobic p-boronophenyl methoxycarbonyl-capped di- or tripeptides. By incorporating various oxidative enzymes, such as glucose oxidase (GOx), into these hydrogels, they demonstrated a simple strategy for constructing, without tedious synthesis, soft materials responsive to various biochemical stimuli, such as glucose.51,52 Their works greatly expanded the applications of oxidation-responsive hydrogels. In this article, we describe a novel kind of oxidationresponsive hydrogels that were fabricated by the redox-initiated radical polymerization of a 4-arm-poly(ethylene glycol) (PEG) macromonomer having H2O2-cleavable acrylic bonds at the chain ends (Scheme 1). The hydrogels can be degraded by H2O2 via the sequential oxidation and 1,6-/1,4-elimination of the phenylboronic acid linker.53 These hydrogels are designed to be biocompatible and extremely sensitive to H2O2 because they were composed of hydrophilic PEG network and a small fraction (<10 wt %) of hydrophobic but highly sensitive linkers.54

The authors have only tested their hydrogen in vitro, it's degradation by glucose, as well as it's cytotoxicity against cultured cells.

I would imagine that their tox work will be significant, both in China and in the US if they bring the product here. Thus any commercial application is at least a decade off. It's interesting work though, and one hopes that if not this product, similar products will become available to serve as quasi-synthetic pancreases.

Their work was supported by the National Science Foundation of China, China being a government that unlike the current US government, does not hate science and scientists.

Enjoy the weekend.

A Beautiful Review Article on the Total Synthesis of Vancomycin.

Recently in this space, I mused on the biosynthetic origins of very complex natural products from the natural (by which I mean "genetically coded" ) amino acids.

A wonderful place to consider the interface of RNA catalysis and enzyme catalysis.

My post by the way, contains a statement that is wrong, this one:

I certainly knew better about both taxanes and vancomycins, but somehow wrote this statement anyway. Perhaps I meant to say "industrially synthetically accessible." I can't say. I wrote that post apparently late at night, several weeks ago.

Whatever.

The lab scale synthesis of these kinds of molecules, including Vancomycin, is a part of a very beautiful scientific discipline, "natural product synthesis," for which many Nobel Prizes have been awarded.

I have just alluded to one reason for doing these kinds of syntheses, which is that they are beautiful, works of art, works of high art. The other reason is more practical. By understanding how to manipulate the features of molecules of this complexity, one can make analogues which may be better suited for reasons of pharmacokinetics, toxicology, and (very importantly) bioavailability, bioavailability being the property of delivering a drug to the cellular or biochemical pathologically active regions.

The total synthesis of Vancomycin has been reviewed in a very nice article in the current issue of Chemical Reviews, this one:

Total Syntheses of Vancomycin-Related Glycopeptide Antibiotics and Key Analogues

One of the authors is Dale Bolger, who received his Ph.D. from one of the great synthetic chemists of all time, E.J. Corey. (I have had two friends who worked in Corey's lab, both reported he was personally a bit of an ass, but irrespective of that, he is one of the greatest American scientists of all time.)

Bolger is one of the world's great synthetic chemists (now at the Scripps Institute) worked himself on Vancomycin syntheses, and reviews the great work of other labs.

Bolger's text reiterates the practical raison d’être for syntheses of this type, in the text of the introductory paragraphs of the review:

An important development in the field of glycopeptide antibiotics occurred in the late 1990s when three groups independently achieved the total synthesis of vancomycin. Given the sheer structural complexity of the natural product, this series of synthetic accomplishments was remarkable and at the frontier of the field of organic synthesis at that time. With reports of the rapid increase in resistant bacterial strains by health officials, this effort was driven not only by the challenge of developing an effective route to the complex natural product but also to pave the way for biological interrogation of previously inaccessible synthetic analogues. Herein, we review only work completing total syntheses of members of the vancomycin-related glycopeptide antibiotics, their aglycons, and synthetic analogues. Work on their semisynthetic modifications(1, 2) and methodological studies are not reviewed as they have been covered elsewhere.

The glycopeptide antibiotics are currently among the leading members of the clinically important natural products discovered through the isolation of bacterial metabolites. They possess a broad spectrum of antibacterial activity against Gram-positive pathogens with manageable side effects. Since their clinical introduction, the glycopeptide antibiotics vancomycin (1) and teicoplanin (6) have become the drugs of “last resort” when resistant bacterial infections are encountered (Figure 1). With the emergence of methicillin-resistant Staphylococcus aureus (MRSA), vancomycin (1) has been widely used in the clinic as the “go to” treatment.(3, 4) Originally restricted to hospitals, today more than 60% of both ICU (intensive care unit) and community acquired S. aureus infections are MRSA(5, 6) and were responsible for nearly 12 000 deaths in the United States in 2011 alone.(7) Moreover, infectious diseases (e.g., influenza and pneumonia), complicated by additional bacterial infections often requiring treatment with vancomycin, are ranked among the leading causes of death in the United States. The glycopeptide antibiotics are also recommended for use with patients allergic to β-lactam antibiotics and those undergoing cancer chemotherapy or ongoing dialysis therapy.(8) Consequently, the importance and clinical use of vancomycin continues to steadily increase since its introduction 60 years ago.(9) As vancomycin-resistant bacteria have been observed in the clinic in both enterococci (VRE, 1987)(10) and S. aureus (VRSA, 2002)(11-17) and as the prevalence of antibiotic-resistant pathogens has increased, discovery of the next-generation durable antibiotics capable of addressing such bacterial infections has become an increasely urgent problem.(18)

Since the establishment of the structures of glycopeptide antibiotics, extensive synthetic efforts have been made through both semisynthetic and total synthesis means. These studies have laid the foundation for ongoing structure–function studies of the antibiotics, aiding in the definition of their mechanism(s) of action. They have also elucidated unanticipated new roles for added non-naturally occurring functionality that have led to the discovery of improved or rationally designed glycopeptide antibiotics.

Here's a figure from the text which particularly appealed to me:

Figure 4. Evans retrosynthetic analysis of vancomycin aglycon.

Organic chemists will recognize that molecule 28 itself, an oxazolidone derived (probably by phosgenation) from (3-chloro-5-nitro-4-fluorophenyl)-β-hydroxyalanine, a early stage precursor in the Evans synthesis it itself a nontrivial synthetic target, owing to its substitution pattern. Early in my career, I had the pleasure of working on BOC and FMOC N protected oxalidones, albeit diones.

Final stages of the Evans synthesis:

Esoteric, but interesting, I think.

A Minor Problem For Sound Science of the Effect of Offshore Windfarms on Seabirds: There Isn't Any.

I've just been leafing through a wonderful book that one wishes didn't have to be written.

It's um, this one: Why Birds Matter

One of my scientific interests is material flows in which I focus on particular elements in the periodic table, and I've collected and read a great deal of literature on that subject. One very important element is the element phosphorous, on which the real "green revolution" - that would be the fertilizer revolution in the 1950's and not the absurd scheme to lace the world with wind turbines and solar cells - depended.

Why Birds Matter is written for our times, inasmuch the argument consists of a great deal of comment on the economic importance of birds, and what it would cost to the human economy if they ceased to exist, and they may cease to exist; many species have already done so, and more are sure to follow.

The only thing we really, really, really care about is, um, money, right and left.

And one of the big economic drivers on this planet is, um, food.

We absolutely must have phosphorous, to feed humanity and for that matter, all living things, whether or not we decide that the only people who should eat are those who can pay for it.

It turns out that one of the most important sources for phosphorous on this planet is, um - well there's no nice way to put it - bird shit. And this is the topic of chapter 9 in "Why Birds Matter."

The island nation of Nauru once had the highest per capita income in the world because it was the chief exporter of bird shit, or what bird shit had become after a few million years, phosphate rock. After Nauru, a small country, dug up all the bird shit on the island and exported it to Australia and New Zealand and elsewhere, the government decided to "invest" all the money, and all the investments went bad, and now Nauru is a very poor country with no resources, very little remaining phosphate, a population with a diabetes problem and an economy based on warehousing Islamic refugees who tried to make it to Australia but who were intercepted by the Australian Navy.

So Nauru needs seabirds to shit on it again. But seabirds are in trouble.

And one of the big trouble for seabirds is, um, wind farms, which are often, to my personal regret, described by people on our end of the political spectrum as "green" and good for the environment.

They are no such thing. I was recently challenged here to produce some um, "peer reviewed" literature (which isn't by the way, magical) on the negative impact of wind farms on the environment, and I pulled some stuff out of my files, and poked around the recent literature on the topic. Here is my response to the challenge: Sure, I'd be pleased to...

After poking around a bit for some more updated stuff than what's in my files, I came across this nice piece: Lack of sound science in assessing wind farm impacts on seabirds (Green et al, Journal of Applied Ecology 2016, 53, 1635–1641) I believe it may be open sourced, so you can read it yourself if you're not in a library. (I'm in a library as I write, so I can't tell if it's open sourced, but I think it is.)

Some text from the paper:

And...

Collision risk models (CRMs) are used to predict the number of fatal collisions of flying birds with wind turbines and per capita additional mortality rates. In the UK, the most widely used CRM is that of Band (2012) (see review by Masden & Cook (2016)). The model requires estimates or assumptions about bird numbers and ages at the wind farm, attribution of birds at the wind farm to source populations, sizes and age structure of source populations, flight behaviour and avoidance rates. Data specific to the project and species being assessed are usually collected on seabird numbers and flight heights, judged by eye, but these estimates are subject to substantial uncertainties, variability and potential biases (Johnston et al. 2014), including:

1.accuracy of input variables is rarely quantified, is often poor, and the CRM outputs are highly sensitive to the values used, including flight speed (Masden 2015), and avoidance rate estimates;

2.in many cases, birds at risk are not attributed to source populations because recently developed tracking technologies are either not deployed at all or not on a sufficient scale for robust estimation;

3.count and flight height data are usually insufficient in quantity and quality for precise estimation of seasonal variation, age structure and age differences (Band 2012).

Total avoidance rates used for CRM calculations for seabirds, including within-wind farm avoidance of individual turbines and macro-avoidance by movement of birds around the turbine array, are most often based upon judgement or extrapolation from other contexts rather than pertinent data. Empirical values are only available from a few species (mostly gulls and terns) and usually extrapolated from studies of onshore wind farms, where different circumstances prevail (Cook et al. 2014). Robust direct estimates of within-wind farm avoidance rates are lacking for seabird species frequently present in and near planned and consented offshore wind farms in the UK, such as northern gannet Morus bassanus and black-legged kittiwake Rissa tridactyla (Cook et al. 2014). Macro-avoidance and displacement rates have been estimated using radar, visual surveys and imaging, but robust quantitative estimates...

By the way, birds and bats are only part of the reason that the wind industry sucks, but it is, I think, an important part.

The wind and solar industries are nothing more than fig leafs for the dangerous gas industry, and the dangerous natural gas industry is killing us as surely as the dangerous coal industry is.

This post won't get the more than 60 recs a post on this website got for a poorly reported blurb about how wonderful the wind industry is for, um, mussels, but I think if we cannot question our own assumptions, cannot rethink our biases, we will not serve humanity.

Don't be rote. Think.

Have a nice day tomorrow.

Quorum-Sensing Peptide-Modified Polymeric Micelles for Brain Tumor-Targeted Drug Delivery

The title of this post comes directly from the paper I'll briefly discuss here, this one: d-Retroenantiomer of Quorum-Sensing Peptide-Modified Polymeric Micelles for Brain Tumor-Targeted Drug Delivery (Lu et al ACS Appl. Mater. Interfaces, 2017, 9 (31), pp 25672–25682)

I don't spend as much time with this journal as I'd like, and I'd never have the time to follow it entirely; it's a rich journal published weekly, and it's full of wonderful stuff.

This paper caught my eye though: My mother died from a brain tumor as a relatively young woman. It wasn't pretty, and the memory of that event never went away; it never will go away, until at least, I die. I can touch anyone of those horrible associated events as if they were taking place now; it was that awful.

But this is a very cool paper from a purely scientific stance, since it begins with a discussion of the lives of bacteria (which generally have nothing to do with brain tumors) and moves to the behavior of cells in our brains. (That's beautiful.)

The text from introduction to the paper says pretty much what it's about:

The authors synthesize some "retro-inverso" peptides and put a paclitaxel payload on them. Paclitaxel is a very famous anti-cancer drug in the taxol family; it slows the replication of wildly dividing cells like cancer cells by interfering with spindle formation in mitosis. This drug was originally discovered in the bark of relatively rare yew trees in the Pacific Northwest, causing some people to worry about the extinction of the tree to make cancer drugs. The world chemical community was, however, able to synthesize the drug from precursors found in dead yew pine needles, saving the trees and saving lives.

The payload approach, attaching a cancer drug to a protein (or in this case a peptide) that will recognize a target is very popular these days, particularly with antibodies. Antibodies having cancer (or other) drugs attached to them are known as "ADC's" or "Antibody Drug Conjugates." Peptides (which are short stretches of coupled amino acids not long enough to be considered a protein) also have recognition ability, although their conjugated use for payload delivery is somewhat more rare.

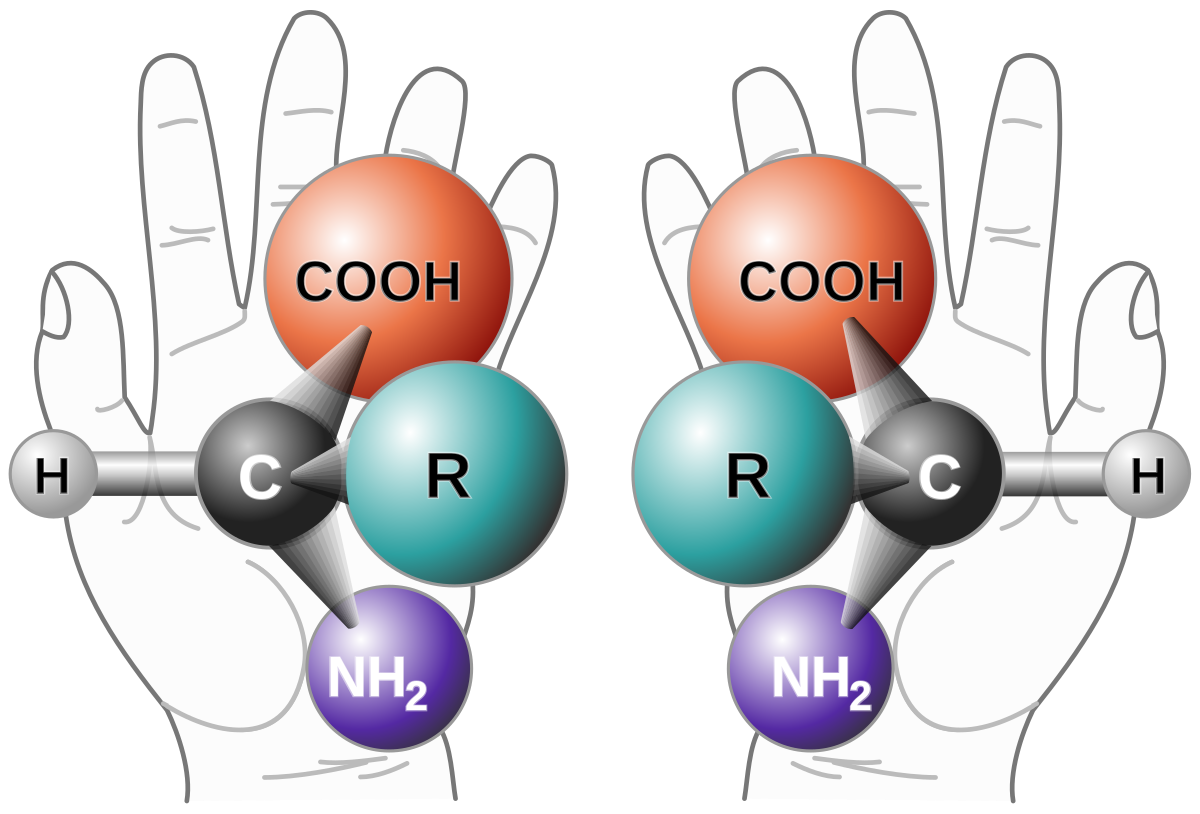

"Retro-inverso" peptides are peptides where the sterochemistry, the three dimensional arrangement of the constituent amino acids, is inverted from the stereochemistry of the natural amino acids, all of which (except cystine for reasons of nomenclature) have an "S" configuration as opposed to the unnatural "R" configuration. R and S in the case of amino acids refers to D and L configurations; all natural amino acids are L; their mirror images are D.

(If you are unfamiliar with this concept, this nice internet picture may help:

These are different molecules because like your hands, which are also mirror images, they cannot be superimposed upon one another.)

A "retro-inverso" peptide is one in which all the amino acids are D rather than L. This gives them a much longer biological half-life than they would have if they were L, giving them more time to have their biological effect.

(The "retro-inverso" approach was pioneered by the late Dr. Murray Goodman at UCSD. I knew him well enough personally to be on a first name basis with him; he once took me to lunch at the faculty club where he wanted to know how it was that I spoke French. He was a very nice man, and a very impressive scientist.)

This approach seems to have worked quite well on mice having gliomas (brain cancer tumors).

From the text:

Here's a nice picture of what's going on in this work from the paper itself:

This work by the way comes out of the Department of Pharmaceutics, School of Pharmacy, Fudan University & Key Laboratory of Smart Drug Delivery (Fudan University), Ministry of Education, Shanghai 201203, China.

This science was supported by the Chinese government.

At one time we had a government that supported and encouraged science and funded it.

We now have a government composed entirely of a class of people who hold science and scientists in total contempt; who hate science and scientists. They're called "Republicans."

We allow these awful people to rule us at terrible risk to ourselves and all future generations of Americans.

I wish you a happy and productive work week.

Sure. I'd be very pleased to do so. A look at the Danish data for wind turbines is also...

...instructive though.

One needs to do a little Excel manipulation to do it, and be able to compare at utilize numbers, to see that this useless crap on average becomes landfill, on average, in about 15 years, meaning as with the case of the atmosphere, future generations will be screwed by our environmental wishful thinking.

The Danish Excel spreadsheets are here: Master Table of the Performance of Danish Wind Turbines

The much ballyhooed Danish wind program which inspired a lot of stupidity around the world, after strewing thousands upon thousands of leaky crap whirlygigs that depend on the disastrous lanthanide mines of Baotou, China, doesn't produce as much energy as a single nuclear plant built 30 years ago can produce.

Analysis of this data convinced me that my lack of hostility to the wind industry - which I may have held ten years ago - was inappropriate. The other thing that convinced me was the fact that we squandered a trillion bucks on this garbage in the last ten years alone (found here): Global "Investment" in so called "Renewable Energy".

A glance at the Mauna Loa carbon dioxide observatory might show what the environmental result of this massive squandering has done for climate change: Carbon Dioxide Trends at Mauna Loa

If one were to spend as much time with this data as I have over the last 20-30 years, one could see that a rate of 2.3 ppm per year, observed over the last ten years is the highest rate of decomposition of the atmosphere ever observed, meaning all the money squandered on so called "renewable energy" hasn't done shit even to slow the second derivative of the atmosphere's collapse.

Now, none of this is "peer reviewed;" it merely requires independent critical thinking to utilize.

This is also true of "peer reviewed" papers, with which I've spent the last 30 years reading about energy and the environment; it requires critical thinking as well.

This said, I'm happy to supply some links, some of which say that the environmental impact of the wind industry is inadequately evaluated, others suggesting what that impact is; and no, it's not zero, even ignoring the fact that the wind industry's main achievement is to increase rather than decrease reliance on unsustainable and frankly criminal dependence on dangerous natural gas.

Here's a nice current papers in the primary scientific literature which I have collected the full text from my files.

From very recent Chinese analysis of the wind portion of so called "renewable energy" (China faces the most health effects from the so called "renewable energy" scam:

Approach to Evaluate the Reliability of Offshore Wind Power Plants Considering Environmental Impact

Life cycle assessment and net energy analysis of offshore wind power systems (This one includes analysis of steel and concrete impacts, but is very weak on biological impacts.)

Bird Killer, Industrial Intruder or Clean Energy? Perceiving Risks to Ecosystem Services Due to an Offshore Wind Farm

Here's a whole book focusing on the biological implications on this stupid enthusiasm for this absurd and stupid scheme to fill the ocean and land with giant greasy tubines:

Wind Energy and Wildlife Interactions It was published just this year and it contains lots and lots and lots of "peer reviewed" references for anyone that's interested in the point. The text on the impact of German wind farms on the endangered red kites is illustrative.

Fuck the Kites; Wind Power is sexxxxxxxxxxyyyyyyyy.

One of the most moving references therein, is this one, a scientific paper written as a "plea." The catchment area of wind farms for European bats: A plea for international regulations

Fuck the bats; Wind Power is sexxxxxxxxxxyyyyyyyy.

This paper is open accessed:

Wind Farm Facilities in Germany Kill Noctule Bats from Near and Far

Turning to the marine area, on which tens of thousands of papers have been written, many in recent years, these scientists, mostly Marine Biologists complain that nobody has a clue about the effect of all this offshore development will have on the benthos, specifically those creatures that live on the sea floor, you know, like mussels: Turning off the DRIP (‘Data-rich, information-poor’) – rationalising monitoring with a focus on marine renewable energy developments and the benthos

Even though we have no idea at all about the effect of the useless and ineffective wind industry (at least with respect to climate change), there's lots of cheering here for a pop news article about mussels.

It's a disgrace.

By the way, I've spent the last 30 years using much of my free time reading the primary scientific literature about energy and the environment. When Carbonite reports on the number of files on my computer it has backed up, the number is usually over 600,000 files. I would guess that at least 50-60% of the papers in my files relate to energy and the environment, with a large portion of that devoted to climate change. This includes an extensive number of papers related to the world's safest, and most sustainable form of energy, nuclear energy, which the neither the wind industry or the solar industry can match for low environmental and human impact.

At the time I started this kind of time intensive obsession, right after Chernobyl blew up, I was, a fan of the wind industry and the solar industry, up to even ten years ago. I was, in 1986, much to my personal disgrace, a critic and opponent of the nuclear industry.

I've changed my mind.

It's not like I did so with inattention. Quite to the contrary, I've invested lots and lots and lots of time, and if nothing else, my opinions, my strong opinions are informed.

If you want "peer reviewed" stuff, one need not ask for it from a blogger on the internet. You can get it yourself. Google Scholar is your friend.

My conclusion after all this work is this: The wind industry and the solar industry are useless, and they are dangerous. I am embarrassed by the rote enthusiasm for these schemes on my end of the political spectrum, the left, the environmental left. The number of recs this vague, and frankly misleading news item generated here is troubling to say the least.

The solar industry in particular, which after half a century of wild cheering can't even produce 2 of the 570 exajoules of energy generated and consumed by humanity is nothing more than a modern day asbestos, asbestos having been a "wonder material" generating wide enthusiasm in the mid 20th century, only to be a bane for our generation to clean up - if we dare to clean it up.

The solar industry, which has already participated in causing 10% of the rice crop in Southern China to land above generally acceptable levels of safe cadmium, will prove for future generations, far more baleful, this after we also dumped trillions of tons of carbon dioxide into the atmosphere in which they must live. We assume, rather criminally, that they will do what we ourselves were incompetent to do; this is the real meaning of all this "by 2050, or by 2080, or by 2100" crap put out by assholes like the poorly educated bourgeois brats at say, Greenpeace. It's not going to happen; they are likely to live in a long running disaster movie and will lack the resources to help themselves. The planet will be a giant Puerto Rico, circa 2017.

Future generations may not forgive us; personally, I don't think they should. The lies we told ourselves will bare no expiation of our place in history, which may be recorded as the "worst generation. Ever."

Have a nice Sunday evening.

Trispecific Antibody to HIV Developed; May Represent A Real Cure for AIDS.

HIV, as many people know, is caused by a "retrovirus," that is a virus that does not contain DNA but rather RNA which is "reverse transcribed" into DNA in infected cells, whereupon the DNA activates machinery in order to construct new viral particles which ultimately rupture the cells, releasing more viruses.

The HIV virus thus offers two opportunities for transcription error: During transcription to DNA, and during formation of RNA for new viral particles. Moreover the HIV viral machinery contains no transcription error correcting mechanism. Mutations in HIV thus arise 10X faster than with DNA viruses.

It was my privilege to work, albeit in a peripheral sense, on the first several of the second class of anti-HIV drugs, the protease inhibitors in the mid 1990's; the first class was reverse transcriptase inhibitors like AZT. This class of drugs had a real impact on the disease; the survival of people like, for one case, Magic Johnson, is a testimony to their success.

The HIV protease cleaves viral zymogens, zymogens being proteins that are inactive until a part of each molecule cleaved by the protease, in this case an "aspartyl" protease that cleaves the zymogens at an aspartic acid residue, thus activating the viral proteins reverse transcriptase, integrase, and more of itself, the protease. Without this cleavage the viral proteins are inactive and the virus cannot function as a virus; it is inactivated, but not destroyed.

However, because of the rapidity of mutations in the virus, over ten billion viral particles are produced each day in an infected person with active AIDS, with a new generation of viruses being produced every 2.4 days at a rate of 140 generations per year, resistant strains of the virus can and do arise rapidly.

For the first generation of proteases developed in the 1990's, resistant strains had appeared for all of them by the year 2000.

The amino acid substitutions for the mutant strains to these drugs are listed here, where the letters refer to the codes for specific amino acids, and the numbers refer to the position in the HIV protease:

D30N: Nelfinavir. (Agouron/Pfizer).

M46I/I47V/I50V: Amprenavir (BMS).

L10R/M46I/L63P/V82T/I84V: Indinavir (Merck)

M46I/L63P/A71V/V82F/I84V: Ritinovir (Abbott).

Saquinavir: G48V/L90M (Roche)

The companies in the parentheses are the companies that developed these drugs.

(cf: Protein Science (2000) 9: 1898-1904)

Modern treatment for AIDS is not really curative; it is palliative and relies on a drug cocktail, a reverse transcriptase inhibitor (the class containing AZT), a protease inhibitor, and a third class, a CCR5 inhibitor known as a fusion inhibitor. It is hoped (and happily often observed) that the combination is effective, if expensive, with a failure to observe the regimen actually promoting the generation of resistant strains. (This is also true of other anti-infectives, such as antibiotics; however with antimicrobials such as antibiotics, the rate of evolution of resistant strains is slower.)

These drugs do not kill the virus; they inactivate it or in some (problematic) cases, slow its replication without actually halting it.

It is thus with some excitement that I came across this paper in the most recent issue of Science: Trispecific broadly neutralizing HIV antibodies mediate potent SHIV protection in macaques

(Xu, Pegu et al, Science 10.1126/science.aan8630: Final Page Numbers Not Yet Assigned) The paper was published by a consortium of scientists from the pharmaceutical company Sanofi, and a team of academic and government institutions, the latter type of institutions being under attack by the orange ignoramus in the White House and his fellow science hating enablers in Congress and his cabinet.

"Trispecific" means that the antibody has multiple "CDR's" or "Complementarity Determining Regions" designed to bind to different areas on cells. Antibodies are, of course, Y shaped proteins that mediate immune responses, and the CDR's are small sequences of amino acids in these proteins that recognize foreign or diseased cells and attach themselves to them result in their destruction or inactivation. Most antibodies are monospecific, designed to attack a single region of display on the foreign body. This protein, by contrast has been designed to simultaneous attack any of three different regions involved in HIV pathology; by doing so it reduces the avenues by which this wily virus can escape destruction and thrive.

From the introductory text of the paper:

Although individual anti-HIV-1 bnAbs can neutralize naturally occurring viral isolates with high potency, the per-centage of strains inhibited by these mAbs varies (21, 22). In addition, resistant viruses can be found in the same patients from whom bnAbs were isolated, suggesting that immune pressure against a single epitope may not optimally protect or treat HIV-1 infection. We hypothesized that the breadth and potency of HIV-1 neutralization by a single antibody could be increased by combining the specificities against different epitopes into a single molecule.

Glycans are sugar signaling molecules bound to proteins. (They are very challenging molecules with which to work, although spectacular advances in the their characterization are under way.) "Epitope" is the sequence of amino acids that defines the CDR.

Some technical text relating to the design of the antibodies:

We then evaluated different combinations of single arm and double arm specificities from PGDM1400, CD4bs, and 10E8v4 Abs for their expression levels and activity against a small panel of viruses (fig. S3), leading ultimately to the identification of trispecific antibodies VRC01/PGDM1400-10E8v4 and N6/PGDM1400-10E8v4 as lead candidates. When analyzed against a panel of 208 viruses (18) and com-pared to the parental antibodies alone, the highest neutrali-zation potency and breadth was observed with N6/PGDM1400-10E8v4, with only 1 of the 208 viruses showing neutralization resistance...

The molecule was able to prevent AIDS infections in a model animal, macques. This said, a molecule of this design has not been tested in humans, although human volunteers tolerated a bispecific analogue quite well. It is not known if the antibodies will not generate ADA's or "antidrug antibodies" which are antibodies against antibodies. This risk is always associated with protein drugs, despite their broad success in treating disease and saving lives.

The authors comment thusly:

The experimental details of the project are described in the supplementary information, which is apparently open sourced and is here: Supplementary Information

Here one may learn that the technology making this work possible is genetic engineering.

To wit:

All protein drugs are, in fact, GMO, and if you have a politically motivated hatred of genetic engineering and all things GMO because you get your "science" from reading Greenpeace pamphlets, Greenpeace being an organization that hates science with the same intensity as say, Republicans, these kinds of drugs are not for you.

Nevertheless, this is exciting and encouraging work.

Enjoy the weekend.

"Smart Bricks" for Measuring the State of Gasifier Walls.

It is becoming increasingly clear that all efforts to address climate change have failed, and it will fall to future generations - assuming that we have not permanently impoverished them and destroyed their futures with our bad thinking on energy and the environment, left and right - to clean up the mess with which we've left them.

In desultory reading on a night of reflection on my life where I feel the guilt of my generation, the history bad ideas in energy flows before me like a suddenly honest sinner's nightmare, but even as I recognize our crime against the future, I am forced to confess that not every bad idea is totally without merit if at least something valuable can be extracted from it.

One of the worst ideas in energy - one that has actually seen, happily only in a limited number of places, industrial application - was a pet project of Jimmy Carter, he of the bad ideas in energy. Briefly, at least while a Presidential primary candidate, though thankfully not when he actually became President, this bad idea was also endorsed, in theory at least, by Barack Obama. The bad idea to which I refer is, of course, coal gasification, technically known as "reformation" to make synthetic petroleum, also known as Fischer Tropsch fuels. (I opposed Obama in the 2008 primaries based on this position; happily he proved me wrong, his policies were much superior to his rhetoric in this case.)

In coal gasification, the idea is to heat coal to very high temperatures under pressure in the presence of steam - actually not steam but supercritical water - to make a mixture of hydrogen and carbon oxides known colloquially as "Syn gas." If the heat from this process is generated by burning coal and dumping the waste indiscriminately into the air, this technology would at best double, at worst more than triple, the climate change impact of petroleum, said climate impact already being entirely unacceptable.

Because we are not smart enough, or honest enough to even stop using fossil fuels, choosing to address them instead with worthless pablum about a grand "renewable energy" future that did not come, is not here, and will not come, the engineering challenge for future generations will dwarf ours, since they will need not only to ban fossil fuels, but will also remove the hundreds of billions of tons of dangerous fossil fuel waste, carbon dioxide, from the atmosphere, where it has been accumulating at a rate of over 30 billion tons per year, a rate which is rising, not falling, mostly because of the runaway popularity of the dangerous fossil fuel natural gas for which so called “renewable energy” is nothing more than a fig leaf. (Dangerous natural gas is not clean; it is not safe, and in spite of tiresome and obviously untrue nonsense put out by purveyors of the grotesquely failed and ridiculously expensive so called “renewable energy” scam, it is not “transitional.”)

Sophisticated arguments have been made that the thermodynamic and thus the related economic engineering challenges of removing carbon dioxide from the atmosphere make it next to impossible. A widely discussed paper on this topic is here:

Economic and energetic analysis of capturing CO2 from ambient air (House et al , PNAS.108 51 20428-20433.(2011)]

A number of arguments questioning this assumption have been advanced and, in the very same journal where the House paper was published, a team of scientists at Columbia has argued in an overview paper that House's paper better not be the last word because removing the dangerous fossil fuel waste carbon dioxide is an urgent matter: The urgency of the development of CO2 capture from ambient air (Lackner et al, PNAS 109 13156–13162 (2012)) Of course, this paper was published, as of this writing, almost 5 years ago, so whatever “urgency” there is about climate change, it’s been totally ignored, which is not to say it's really not urgent, only that it's becoming more urgent.

For a nice review of chemical air capture strategies see: Direct Capture of CO2 from Ambient Air (Jones et al Chem. Rev., 2016, 116 (19), pp 11840–11876) (I've attended lectures by the primary author of this review, Chris Jones, of Georgia Tech, at scientific meetings; I'm impressed by his work.)

From my perspective, air capture should be an achievable goal for human beings in a generation less stupid and selfish than ours. I say this only because clearly plants do this (albeit surprisingly inefficiently in energy to mass ratio terms) and therefore, just as generations of human beings living before the Wright brothers recognized that heavier than air flight had to be possible, since birds and insects existed. House's paper explicitly states that biological strategies for removing carbon dioxide are not covered.

There is, of course, an option that simultaneously exploits both biological and physicochemical options for removing carbon dioxide from the atmosphere, and utilizes chemistry that I evoked at the outset of this post, reformation, not of coal, but of biomass. The combustion of biomass has been, or course, practiced for millennia, and it is still practiced widely today; but as practiced it is very dangerous, dangerous biomass waste is responsible for about half of the seven million air pollution deaths that take place each year as of 2017, even as awful poorly educated dullards carry on about so called “nuclear waste,” which has killed no one in more a half a century of accumulation, and which is in actuality a valuable resource that future generations may appreciate more than most people in this entirely easily distracted generation are competent to understand.

No matter.

My hostility to so called “renewable energy” should be familiar to anyone familiar with my writings here and elsewhere, and biomass is often defined as “renewable energy” but, this said, I believe, as I do for so called “nuclear waste,” that biomass waste has great potential as a resource, most notably for the removal of carbon dioxide from the atmosphere, but also for the recovery of other critical materials, the most important of which is phosphorous. (World supplies of mineable phosphorous – on which the world’s food supply currently depends – are very much subject to depletion.)

While “renewable” biofuels like ethanol have represented a tremendous environmental tragedy in the United States, (you know, the road to hell…) resulting in the destruction of the Mississippi Delta ecosystem, for example, it happens that there is another approach to biomass utilization that is likely to prove far more benign than fermentation and distillation, and to the extent it is one of the few options capable of actually removing carbon dioxide from the air, deserves consideration. This is the thermal reformation of biomass, where biomass substitutes for the coal based scheme that Jimmy Carter proposed, and which frankly, we should all be grateful, never made it to big time in the United States, the world’s most egregious consumer nation.

If the heat for driving this largely endothermic reaction is nuclear heat, the process is almost certain to be unambiguously carbon negative, particular in the case where the carbon collected is utilized in products like polymers, carbon fibers, carbon nanotubes, refractory metal carbides (which would be necessary for nuclear heat at high enough temperatures to drive reformation reactions and thermochemical water splitting reactions), silicon carbides and extremely useful and exciting graphene, modified graphene and carbon nitrides. All of these products sequester carbon, and do so in an economically viable way, a “waste to products” way.

But there’s a problem. Biomass is not pure carbon, hydrogen, oxygen and nitrogen, of course: It also contains a considerable fraction of metals. The most problematic of these are the alkali metals, in particular potassium and sodium, and to a far lesser extent, lithium and rubidium.

Consider potassium.

A nice paper, the residue of Chinese grammar in the translation aside, which was recently released as a corrected proof discusses the case quite well: Transformation and release of potassium during fixed-bed pyrolysis of biomass (Lei Deng Jiaming Ye Xi Jin Defu Che, Journal of the Energy Institute, Corrected Proof, Accessed 9/19/17)

An excerpt:

The occurrences of ash deposition and high-temperature corrosion on superheaters have experienced three processes. First, parts of K, Cl and S go into the gas phase to form HCl, Cl2, SO2, SO3, KOH, KCl or K2SO4 during combustion of biomass [7,17e19]. Second, gaseous potassium salts condense in the gas phase and on the surface of superheater to form sticky particles and condensed layer, respectively. Then the ash deposition occurs when fly ash particles are trapped by the sticky condensed layer [9e11,16]. Finally, the ash deposit (mainly composed of KCl and K2SO4) and metallic matrix react with HCl, Cl2, SO2 or SO3, which would cause the growth of ash deposit and high-temperature corrosion [9,12,20e23]. Obviously, potassium is involved in all three processes and plays a crucial role. Compared with coal, biomass generally has much higher potassium content [5,24]. Although pyrolysis is different from combustion with regard to the surrounding environment and temperature fields, it is still meaningful to investigate the transformation and release of potassium during biomass pyrolysis, because pyrolysis happens at the primary stage of combustion. The investigation will be significantly helpful to understand the origin of ash deposition and high-temperature corrosion occurred on superheaters and find methods to solve these problems. It can also be useful to the design of biomass-fired boilers or other thermal conversion equipment.

A form of energy technology which requires constant replacement of infrastructure is neither sustainable nor environmentally benign, simply from a materials utilization standpoint, since the preparation of materials is generally energy intensive. (This is a big problem with another example of the failed expensive so called "renewable energy" industry, the wind industry, where Danish database of turbines shows that the piece of crap turbines don't last an average of 16 years before needing replacement.)

I personally believe that the materials science issues involved of high pressure reformers is one that can be solved; however the question stands unequivocally before us that we are out of time, that anything we may or may not do to address climate change is already too late. It may be desirable therefore to build less than optimized biomass reformers, at least as a stopgap measure, until engineers and scientists can optimize materials to be more sustainable. We must have technologies that not only prevent the dumping of dangerous fossil fuel waste into our favorite waste dump, the planetary atmosphere, but also remediate the waste dump itself: Our atmosphere is a "superfund" site, and we must find a way to clean it.

It is therefore with interest that I read a recently published paper that purports to have developed a technology that can at least measure the performance of materials in high temperature reformers continuously, during operation.

The paper is here: Estimations of Gasifier Wall Temperature and Extent of Slag Penetration Using a Refractory Brick with Embedded Sensors (Debangsu Bhattacharyya, et alInd. Eng. Chem. Res., 2017, 56 (35), pp 9858–9867)

Some text from the paper:

"Almost two years..." That's even worse than wind turbines, and wind turbines suck. Moreover, the liner they're describing is chromia. Chromium is not an environmentally benign element with which to work. (To be fair there are many other refractory oxides, carbides and nitrides with which one can envision accomplishing the same task, one of the most important of these is zirconia, ZrO2, and of course nitrides, like, say, thorium nitride.

The authors go on in the paper to describe a type of brick with an embedded sensor. The brick is alumina and in it is embedded a thermoresistor made of tungsten carbide in an alumina matrix.

The sensor is arranged so as to give an interdigital capacitor, a set of capacitors in series that measure changes in temperature via changes in the diaelectric constant of the system and thermal expansion resulting in changes in distance between the capacitor as well as changes in the diaelectric constant (presumably from a base line) owing to the intrusion of slag elements.

Some remarks from the conclusion:

...For commercial application of the smart refractory brick in industrial gasifiers, many aspects need to be investigated. First, the brick needs to be tested under actual operating conditions for prolonged time. Second, impacts of the startup/shutdown and off-design operating conditions on the brick stability need to be evaluated. Third, response of the embedded sensors may be affected by unknown inputs. Fourth, because a wireless transmission system is being considered, there may be issues due to communication constraints, packet dropouts, and synchronization errors. The authors look forward to investigating some of these aspects in the near future.

One wishes the authors luck in a country, this one, where the three branches of government are controlled by people who hate science because they're too stupid to know any.

I appreciate the work of Dr. Debangsu Bhattacharyya, as well as his courage to bear his very cool name right in the heart of Trump country, West Virginia.

Interesting work.

Enjoy the rest of the work week.

A Polly Arnold Review on the Organometallic Chemistry of Neptunium.

There's this great video by Norah Jones during a tribute to Graham Parsons where in preface to performing his song "She" she declares that on listening to every song performed at the tribute she said, "Oh that's my favorite song..." and then declares that "But this is really my favorite song..." (It's a wonderful performance.)

My kid, who I am happy to report is visiting me this weekend - coming home from college to celebrate my birthday - always laughs at me because every time I see a poster on a wall in a university building referring to the chemistry of element - any element - I say "That's my favorite element..."

"Dad," he says, "Every element is your favorite element." (That's not true. I don't care all that much about the chemistry of terbium, or for that matter lutetium.)

One of my favorite elements, really, is the element neptunium, since I regard it as a key to decreasing the risk of nuclear war as close to zero as is possible, via the "Kessler Solution." (We cannot uninvent nuclear weapons, nor can we ever eliminate the risk of nuclear war, since the supply of uranium is inexhaustible. I explored this point elsewhere: On Plutonium, Nuclear War, and Nuclear Peace

In another post on the same website I wrote about some interesting chemistry associated with the actinide elements, noting that the Nobel Laureate who had, in many ways, the greatest effect on day to day life of any Nobel Laureate, Fritz Haber, since despite its greatly problematic environmental consequences, the world's food supply depends on the Haber process, noted very early on that uranium was likely to be wonderful catalyst for nitrogen fixation: Uranium Catalysts for the Reduction and/or Chemical Coupling of Carbon Dioxide, Carbon Monoxide, and Nitrogen. In that post, I discussed the work of Polly Arnold, a world leader in organoactinide chemistry.

Now Dr. Arnold has written a review article in one of my favorite journals, Chemical Reviews:

Organometallic Neptunium Chemistry (Chem. Rev., 2017, 117 (17), pp 11460–11475)

An excerpt from the text:

The density of neptunium, is, by the way, 19.5 tons per cubic meter, meaning that all the neptunium produced each year would fit into a cube 130 centimeters on a side. (This compares favorably with dangerous fossil fuel waste which at 30 billion tons per year, can never be contained under any circumstances.) However no one could ever construct such a cube, since 19.5 tons of neptunium greatly exceeds its critical mass, and this being true, the element is a very wonderful potential nuclear fuel.

50 tons of neptunium is a very valuable resource, especially because of the very interesting property metallic neptunium has of forming a low melting eutectic with metallic plutonium that makes for interesting possibilities for the LAMPRE type reactors that were explored by a generation smarter than ours, in the mid twentieth century.

One hopes that a future generation, smarter than ours, will utilize this neptunium resource to clean up the intractable mess with which our irresponsibility and dumb assed ideas and fantasies has left them.

They may not, and should not, I think, forgive us.

Chemical Reviews, as an aside, is a wonderful place for chemists to catch up in areas in which they are non-specialists. I love that journal.

Enjoy the rest of the weekend.

Profile Information

Gender: MaleCurrent location: New Jersey

Member since: 2002

Number of posts: 33,518