NNadir

NNadir's JournalThe Chernobyl Forest Fires and Levels of Radioactive Elements Spread Through Europe by the Smoke.

There are three papers I'll discuss in this post, one of which is about the always popular discussion of the Chernobyl accident that took place 35 years ago. Chernobyl is always more interesting to people than other industrial "accidents," and the associated mortality. Everyone wants to talk about Chernobyl; very few people in my experience are interested in discussing the deaths since 1986 of between 200,000,000 and 250,000,000 people from air pollution.

Of course, the air pollution deaths are not "accidents." They are the deliberate result of wishful thinking, selective attention, absolute contempt for future generations, and I argue, of Chernobyl and Fukushima, since, for example, Germany will be burning coal - and killing both people and the future - this winter because of Chernobyl.

The three papers I'll discuss are all in the same issue of the scientific journal Environmental Science and Technology, Vol 55, Issue 20, (2021), as of this writing (10/29/21), the current issue.

The first paper, the eye catching one, is this one:

Europe-Wide Atmospheric Radionuclide Dispersion by Unprecedented Wildfires in the Chernobyl Exclusion Zone, April 2020 The reference is this: Environmental Science & Technology 2021 55 (20), 13834-13848. The authorship is rather broad. I count 44 scientists from 22 European Countries, and a larger number of research institutions I didn't bother to count. The lead corresponding author is from France, a country which will be immune from dangerous natural gas shortages this winter whether the wind is blowing or not. He is: Olivier Masson − Institut de Radioprotection et de Sûreté Nucléaire (IRSN), Fontenay-Aux-Roses 92260, France. His institution's name is full of English cognates Institut de Radioprotection et de Sûreté Nucléaire translates as "The Institute of Radioprotection and Nuclear Safety."

Today - any "today" and not just the "today" I spending writing about the scientific paper covering the 2020 Chernobyl forest fires - between 16,000 and 19,000 people will die from air pollution. The most recent data from which this figure can be calculated can be found here: Global burden of 87 risk factors in 204 countries and territories, 1990–2019: a systematic analysis for the Global Burden of Disease Study 2019 (Lancet Volume 396, Issue 10258, 17–23 October 2020, Pages 1223-1249). I reference this paper frequently in this space when people want to offer me an insipid lecture on either Chernobyl or Fukushima.

I can safely guarantee that 44 scientists from 22 European Countries will not write about the air pollution deaths today - any "today" - although in truth, the much broader list of scientists and countries, wrote the Lancet paper, which listed all sorts of causes of mortality, the overwhelming number on the list being more dangerous than nuclear power, even though people often say that nuclear energy is "too dangerous" and things that kill in far more vast numbers, say, for instance, eating fatty foods, are not considered "too dangerous." The same people who tell me that nuclear power is "too dangerous" imply that climate change is not "too dangerous." They imply this even though their favored fantasies about wind and solar energy have done absolutely nothing to address climate change.

During the 21st century, a century thus far associated with vast, expensive and wild enthusiasm for solar and wind energy, the rate at which the dangerous fossil fuel waste carbon dioxide has been rising in the planetary atmosphere, has risen from 1.52 ppm per year (the end of October 2000) and 2.54 ppm per year (the end of October 2021). I get these figures from a spreadsheet of records of carbon dioxide at the NOAA Mauna Loa carbon dioxide observatory that I have maintained for many years. They express a 12 month running average of the weekly figures for carbon dioxide concentrations and comparison with the figures recorded ten years previous.

(The observatory makes this comparison when releasing the weekly average figures: Weekly average CO2 at Mauna Loa. The annual trough for these concentrations, which usually occurs in September appears to have been reached was recorded in the week beginning September 12, 2021, when the reading was 413.09 ppm. The annual maximum, usually observed in April, was observed in the week beginning April 25, 2021, when the reading was 420.01 ppm. This is the first reading ever to exceed 420 ppm. The same week ten years previous to that week was 393.41 ppm. The first reading to exceed 400 ppm was observed for the week beginning March 16, 2014, less than ten years ago.

All of this enthusiasm for solar and wind didn't address climate change. It isn't addressing climate change. It won't address climate change. I would thus argue that this enthusiasm for solar and wind as tools to address climate change is "too dangerous." It hasn't worked. It isn't working. It won't work. The deluded enthusiasm has made things become worse faster than they were before the enthusiasm was translated into action, ineffective action.

Before discussing the exciting paper on forest fires in the Chernobyl Exclusion Zone, which is now sort of a nature preserve, albeit a radioactive exclusion zone, as a consequence of the radiation preventing it from being overly trammeled by human beings, let me introduce the two other papers I'd like to discuss which are arguably related to the Chernobyl event. They are these:

Air Quality Data Approach for Defining Wildfire Influence: Impacts on PM2.5, NO2, CO, and O3 in Western Canadian Cities (Stephanie R. Schneider, Kristyn Lee, Guadalupe Santos, and Jonathan P. D. Abbatt Environmental Science & Technology 2021 55 (20), 13709-13717)

Hydroxyl Radical Production by Air Pollutants in Epithelial Lining Fluid Governed by Interconversion and Scavenging of Reactive Oxygen Species (Steven Lelieveld, Jake Wilson, Eleni Dovrou, Ashmi Mishra, Pascale S. J. Lakey, Manabu Shiraiwa, Ulrich Pöschl, and Thomas Berkemeier Environmental Science & Technology 2021 55 (20), 14069-14079)

Let's start with the last paper, which is a paper outlining the mechanism by which air pollutants kill people, the paper on reactive oxygen species. Radical chemistry - which is often associated by the way with radiation, and is part of the mechanism by which sunbathing causes melanoma - is in the best case associated with cell death; in the worst case, as in Melanoma, it is a cause of cancer.

This is one of the important mechanisms by which air pollution kills people, but it is not the only one. The other is associated with placing additional strain on hearts.

From the introduction to the text:

PM2.5 is a complex mixture that can encompass thousands of different chemical constituents, each having distinct properties. PM2.5 originates from both natural and anthropogenic sources, including mineral dust from deserts, gasoline and diesel motor exhausts, tire and brake wear, power generation, residential energy use, agriculture, biomass burning, cooking, and cigarette smoking. Because of the great heterogeneity in both PM2.5 composition and sources, targeted PM2.5 pollution control is challenging, and, to date, there is no clear connection between one particular PM2.5 constituent and mortality estimates. (9−12) In spite of fundamental challenges studying causal relationships between air pollutants and health outcomes, it has been generally accepted that the underlying pathology of air pollutant exposure includes oxidative stress and systemic inflammation. (7,13−15) Moreover, in recent years, the oxidative potential of PM2.5 has become a common metric for measuring PM2.5 toxicity. (13,16−19) The oxidative potential of PM2.5 has been shown to vary greatly among sampling sites and proximity to the emitting source. (16,20−22) Based on case-crossover studies, it has been suggested that the risk of respiratory illness and myocardial infarction was increased in exposure episodes with high PM2.5 oxidative potential. (13,23)...

Reference 3 is the Lancet paper is routinely use to describe how many people have been dying each year from dangerous fossil fuel use since the Chernobyl reactor blew up in 1986, producing a risk that is far more interesting than more than 200,000,000 deaths from air pollution, a death toll three to four times greater than World War II, which is also more interesting than air pollution.

This paper focuses on air pollution deaths from particulates.

A little more from the introductory text:

NO2 is an irritant gas that has been linked to mortality in epidemiological studies. (34,35) However, because NO2 is often co-emitted with PM2.5 and other pollutants in combustion processes, it remains unclear if it poses an independent health risk. (36,37) In the ELF, NO2 can consume antioxidants and form nitrite (NO2–) in the process. (38,39) The oxidized forms of antioxidants are typically nontoxic, but their reactive intermediates have been suggested to form ROS in small yields in the case of the glutathiyl radical. (39,40)

"ELF" is defined in the abstract of the paper as "Epithelial lining fluid," which occurs in the lungs and in other places.

I find these comments about NO2 interesting. To my knowledge - which is limited - a possible mechanism for carcinogenesis associated with NO2 is the formation of nitrous acid. The known epidemiological associative risk of air pollution and lung cancer to my mind may be tied to the formation from lysine side chains, which terminate with an amino group, suggests the possibility of the formation of nitrosamines, which are well known in the mechanism by which cigarettes cause cancer, since nitrosamines can decompose to give strong alkylating agents which alkylate DNA guanine residues, leading to cancer. Lysine is an important amino acid found in lung tissue, since the amino group is involved in carbon dioxide transport. Probably this has been discussed somewhere, I should look.

Sorry for the aside musing.

After discussing other pollutants like ozone, the authors rely on a model called "KM-SUB-ELF" to trace the carcinogenic reactive oxygen mechanism detailing one way dangerous fossil fuels kill people, not that this is as interesting as Chernobyl's explosion 35 years ago. "KM-SUB-ELF" refers to the kinetic multi-layer model of surface and bulk chemistry of epithelial lining fluid.

Well, let's not spend too much time on this; let's work our way to getting to those far more interesting fires in the Chernobyl Exclusion Zone, a nature preserve formed by the fact that humans are scared, generally, to go there, although there is a scientific/tourist element of humans who do visit the region.

Here's a few dull pictures from the ELF paper just discussed, before we go, not that the relation between air pollution and lung cancer is interesting:

The caption:

The caption:

The caption:

The caption:

I'll refer very briefly to the paper on Canadian wildfires and the pollutants they produce. In this paper, they are trying to distinguish from the "normal" air pollutants in cities that kill people and whether the same pollutants discussed in the uninteresting paper on ELF and reactive oxygen species, effect the these levels. It is, apparently, a mixed bag, depending on whether the wind blows, just like pollution associated with electricity in California and Germany depends on whether the wind blows, but the output of these pollutants is well known from forest fires.

The introductory text:

In addition to economic and environmental impacts, wildfires can also affect air quality, as occurred from the Horse River fire. (3) Primary pollutants emitted by wildfires include greenhouse gases, such as carbon dioxide (CO2), methane (CH4), and nitrous oxide (N2O). (6,7) Also widely studied are emissions of particulate matter, particularly PM2.5 (defined as particulate matter less than 2.5 μm in diameter), and ozone (O3) precursors such as carbon monoxide (CO), volatile organic compounds (VOCs), and nitrogen oxides (NOx, which include NO2 and NO). There is also evidence of wildfire influence on enhanced O3 production. (8)

Exposure to pollutants contained in wildfire smoke has negative impacts on human health, with adverse respiratory, cardiovascular, and perinatal health outcomes that can lead to premature death. (9) In Canada, this can range from 54 to 240 deaths annually as a result of short-term exposure and 570 to 2500 annual premature mortalities from long-term exposure. (10) A recent toxicological study has suggested that PM2.5 from wildfires is more toxic per unit mass than PM2.5 from other sources. (11) It has become apparent that there are serious health risks from PM2.5 and O3 exposure below the regulatory standards, making the wildfire contributions to PM2.5 and O3 important to understand. (12)

Governmental agencies set air quality guidelines by recommending thresholds for selected air pollutants. In Canada, the PM2.5 threshold as implemented by the Canadian Ambient Air Quality Standard (CAAQS) is set at an annual mean of 8.8 μg/m3 and a 24 h mean of 27 μg/m3. (13) The U.S. equivalent, the National Ambient Air Quality Standard (NAAQS), is set at an annual mean of 12.0 μg/m3 and a 24 h average of 35 μg/m3. These standards reflect the recommendations of air quality standards published by the World Health Organization. (14) Extreme wildfire events are considered “exceptional events” in the NAAQS and the CAAQS since they do not reflect changes in anthropogenic emissions and are removed from the data when calculating the 24 h averages and the annual mean of PM2.5. (15) In the United States and Canada, there is no reporting standard about the impact of wildfires on air quality.

A major challenge to determining the impacts of wildfires on local air quality, and the focus of this paper, is determining when ambient air has been affected by wildfires. Overall, there is no widely accepted method to evaluate the influence of wildfires on local air quality, especially in populated regions. A combination of ground-based measurements, models, and satellite measurements has been used to make that determination, as summarized recently by Diao et al. (16) Understanding the contribution of wildfires to PM2.5 and other pollutants has become increasingly important with the increasing frequency and severity of wildfires as the climate warms...

Who cares? Chernobyl!

There were a lot of fires in British Columbia this summer, soon to fall down the memory hole along with the Deep Horizon Oil disaster, among other things. The British Columbia, Washington State, Oregon and California fires were caused by extreme heat, in many places heat so extreme, and so record breaking that people literally dropped dead from it.

However, according to the rhetoric I hear and the way I choose to interpret it, most people think that nuclear power is "too dangerous" implying that climate change is not "too dangerous."

We live in an age where insanity is celebrated and gleefully reported.

Nuclear power is "too dangerous" because...because...because...Chernobyl. Fukushima. ...and...of course...as a professor at Princeton University recently reminded me while talking about how solar energy is so great...Three Mile Island.

These three nuclear events outweigh everything...hundreds of millions of air pollution deaths at a continuing rate of around 18,000 deaths a day, the coasts of continents in flames, people dropping dead in the streets from heat...everything.

Why? Because Chernobyl is radioactive. This brings me to the first paper referenced in this post, the forest fires - probably induced by climate change, but who cares? - in the Chernobyl Exclusion Zone, where there are lots of living things that are not human beings.

So let's begin with the scary graphic introducing the paper on the Chernobyl Exclusion Zone forest fires:

Scary enough for you? Look at all those sinister looking radioactivity symbols flying up!

Now let's turn to the introduction to the text:

Herein, we investigate the devastating April 2020 wildfires, which lasted for about 4 weeks in the Ukrainian part of the contaminated areas around the CNPP. The detailed geographic analysis and timeline is provided in the SI. The fire situation in the CEZ and bordering areas was characterized by a combination of numerous ignitions and subsequent spread of fires. Their magnitude varied according to different parameters: (1) biomass type, vegetation density, and location accessibility (forest, meadow, peatland, and marshland); (2) meteorological parameters (wind speed, wind direction, precipitation frequency, and amount). These multiple factors hindered firefighting, despite the mobilization of nearly 400 firefighters and 90 specialized aerial and terrestrial vehicles (two AN-32P airplanes, one Mi-8 water-bombing helicopter, heavy engineering equipment, and seven additional road construction machines of the Armed Forces of Ukraine). The first 3 weeks of April saw the development of particularly large and numerous fires. Two main fire areas were identified during this period: in the Polisske district and in the Kopachi-Chistogalovka-CNPP cooling pond (less than 12 km from CNPP). Daily information about burned areas including vegetation cover, contamination density, and radionuclide emissions were then published by the Ukrainian Hydrometeorological Institute (UHMI). (21) According to the UHMI, 870 km2 were burned in total, including 65 km2 in proximity to the CNPP and 20 km2 on the left bank of the Pripyat river...

Later on the authors turn to some background information, of which many people are aware because we talk far more about Chernobyl than we do about the more than 200,000,000 who died from air pollution since the reactor exploded:

It is unlikely very much Ce-144 (cesium-144) remains after 35 years and I have no idea why the authors mention it other than to discuss historical fires, where some may have survived, for example the 1992 fire. It's half-life is 284.9 days. The date of the 1992 fires is not readily available, but if we choose July 15, 1992 arbitrarily assuming the fire was in the middle of summer, less than 0.4% of the original cerium present remained. As of today's date as of this writing, 10/30/2021, about two hundred billionths of it remain.

The half-life of cesium-137 in the paper is given both as a rounded number, 30.1 years, and the generally accepted number, 30.07 years one sees most often. A more recent and more precise figure, out of the ENDF file at the Brookhaven National Laboratory gives the half-life as 9.4925E+08 seconds, which works out to 30.08 years; whether the more precise figure is also accurate is not for me to say.

Using the ENDF value for the half-life it seems that as of this writing 44.1% of the original cesium-137 remains.

The text suggests that most of this remaining cesium-137 is sequestered in soils; the adsorption of cesium onto minerals is well known and this is unsurprising. Nevertheless, some radioactivity is taken up by the forest and ends up in the flammable litter, and the question is how much is volatilized in the fires, the question the paper with the scary graphic in the intro takes up.

Apparently these fires scare the shit out of people, particularly journalists. Here for instance is an article on this subject from the popular press, from the Atlantic, a publication I generally like even though, as we shall see below, the journalists there are incompetent to discuss most issues in science, as we shall see: FOREST FIRES ARE SETTING CHERNOBYL’S RADIATION FREE. At least, albeit surprising, the authors include a subheading that refers to a reason that the world's forests are burning up. Here's the subtitle:

Let's return from my bad habit of bashing the woeful scientific ignorance of journalists and return to the scientific paper detailing how much radioactivity the fires released using the only units that matter, units of concentration.

The graphics give a feel for the concentrations in cubic meters - the volume of a breath is about 0.002-0.003 cubic meters for an average human adult. I will explain the "μBq" unit in the ordinate units below.

To wit:

The caption:

A few years back, a correspondent on this website called up one of my old pro-nuclear posts to tell me all about a tunnel that collapsed at the Hanford Reservation, the site where historically plutonium for nuclear weapons was made. The point that was to be made, I guess, is that nuclear power is "too dangerous." To my mind, a correspondent using this particular example has his or head so far up his ass that, if he or she is wearing glasses, the screws in the frames might damage his or her duodenum.

Although I have had the distinct onus of attracting a broad array of anti-nukes, who want to raise specious points about nuclear energy, this on a planet where approximately 18,000 people die per day from air pollution because we don't use nuclear power, this particular correspondent sticks in my mind.

I've come rather to like the guy or gal in question, because he or she often whines that "I didn't say xxx or yyy or zzz" and - this apparently ranks in his or her mind as a crime against humanity worse than indifference over approximately 18,000 deaths per day - that I am guilty of producing a "strawman."

I have little tolerance for ignorance that kills people. To me, anti-nuke ignorance is precisely equivalent to anti-vax ignorance, worse actually because on the entire planet there have been few, if any, days where 18,000 people died from Covid in a single day.

Air pollution is a plague, even if we carry on day to day pretending that more important issues face us.

Nevertheless, again, I rather like the guy or gal, and take him or her off my ignore list from time to time to check in and hear him or her whine about "strawmen." One claimed "strawman" was when I offered the sarcastic remark that such as anti-nuke as this person is, might be more concerned about a single radioactive atom decaying in his or her tiny little brain than, say, climate change or air pollution deaths.

Neither of these disasters are, to my mind, necessary. The technology to eliminate them exists; it's not easy nor cheap to utilize this technology, and it will involve the minds of people with spectacular intellects, education and training, but it exists. It is more effective than tearing the shit out of virgin wilderness with diesel trucks and bulldozers to render that wilderness into industrial parks for wind turbines than will be landfill in 20 to 25 years or less. The use of this technology will require a race of human beings who once existed but have nearly vanished, a race of human beings who care more for the future than they do for the parochial concerns of the contents of their bourgeois pockets and pocketbooks.

So why do I like the guy or gal with the big concern about the collapsed tunnel at the nuclear weapons site? It has to do with measuring the depth of stupidity. One can actually learn a lot by measuring such a depth.

I had great fun, and learned an awful lot about the geochemistry of anthropogenic radionnuclides in writing this post, even though I would be the first to agree that no one should read it because it's desultory, boring, and extremely esoteric. (I write to learn.)

828 Underground Nuclear Tests, Plutonium Migration in Nevada, Dunning, Kruger, Strawmen, and Tunnels

In that piece I discussed the unit, the "μBq," in measuring the depth of stupidity associated with the "strawman" that this person, because it turns out that the unit very much involves a single atom. A "Becquerel" is the unit of radioactivity, named after the discoverer of nuclear decay, that corresponds to the decay of a single radioactive atom per second. One atom. To make sense of a "microbequerel" one must take its reciprocal, which will give the number of seconds that it will take to observe a single decay.

In the graphic immediately above, designed to show the ratio between plutonium and cesium, we see that most measurements of cesium-137 data points were below 10,000 μBq/m^3. This means that one must wait, on average, 100 seconds to observe a single decay of a single radioactive decay of a single atom of radiocesium in a cubic meter at 10,000, ten seconds at 100,000 μBq/m^3. . An adult human breath is about 0.002 to 0.003 cubic meters.

I'll post a relevant excerpt from my diatribe about the 828 nuclear tests:

K-40 has, by the way, a very energetic decay, well over a million electron volts per atom.

One often hears the statement, usually from poorly educated anti-nukes, that "there is no safe level of radioactivity." This is contradicted by the fact that every and any human being would die without being radioactive; a normal adult human being needs to have about 4000 nuclear decays in their flesh per second in order to live.

Facts matter.

The statement that "there is no safe level of radioactivity" is based on an oft applied assumption in radiobiology called the "Linear No Threshold" assumption, or "LNT." The "LNT" has never been definitively been proved, nor has it definitively been disproved. If it was true this will mean that some people - obviously not all people - are killed by an element of their bodies without which they could not live, so the construction of a control group will always be impossible, since the control group would be killed by eliminating potassium from their bodies.

The LNT model, which informs most safety information in connection with radiation to this day, is largely based on work conducted by a husband and wife research team working with mice, the Russells working at Oak Ridge National Laboratory, building on work . They had a co-worker, Richard Shelby, their Ph.D. student in fact, who on review of their data, discovered that they had underestimated the mutation rate in their control group, thus placing their data in serious question.

A Health Scientist at the University of Massachusetts, Edward Calabrese, has been on a quest - whether it is quixotic or not is not relevant - to have the LNT model discarded. He is not alone in this quest; others question it as well, but to me, it's angels dancing on the head of a pin. It may be true that some people occasionally have cancers induced by potassium-40 decay, but attempts at preventing this from ever happening would kill any experimental subjects involved in the matter.

Some of Calabrese's polemics make for interesting reading. Here are some examples:

The linear No-Threshold (LNT) dose response model: A comprehensive assessment of its historical and scientific foundations (Calabrese, Chemico-Biological Interactions 301 (2019) 6–25)

On the origins of the linear no-threshold (LNT) dogma by means of untruths, artful dodges and blind faith (Calabrese, Environmental Research 142 (2015) 432–442)

The threshold vs LNT showdown: Dose rate findings exposed flaws in the LNT model part 1. The Russell-Muller debate (Calabrese, Environmental Research, 154 (2017) 435–451)

Support for rejecting the LNT comes from the known mechanisms for DNA repair; when these fail, cancer is often the result. If not addressed by innate immunological responses - most of us generate cancer cells fairly regularly but unless these cells possess immunological checkpoints, they are destroyed by an immune response - a cancerous disease state will result.

Calabrese is not alone in his considerations. The search terms LNT and validity in Google scholar will generate over 35,000 hits, over 3,000 in the last 5 years.

It is difficult however, in my view to argue that a single atom of cesium-137 decaying every hundred seconds, or even every ten seconds is going to result in mass fatalities.

The Chernobyl forest fire is hoopla if the concern is radiation. The more serious concern with that fire is the same as with every fire, including those in Canada, a fire clearly started by climate change which the half a century of wind and solar energy worship has failed miserably to addressed. That concern is air pollutants, the far more serious effect on "ELF" described above.

The Chernobyl fire paper does contain a few more graphics and comments. Here they are:

The caption:

The caption:

The caption:

The later sections near the end of the paper include this language:

Chernobyl-labeled radionuclides aside, naturally occurring radionuclides with a high dose coefficient and that are prone to emission during a wildfire have to be considered. This is typically the case for, among others, 210Po (T1/2 = 138 days) as a progeny of the relatively long-lived 210Pb (T1/2 = 22 years), which accumulates in the biomass through foliar uptake. Polonium-210 has an effective dose coefficient of 3.3 10–6 Sv/Bq and 3.0 10–6 Sv/Bq for an adult of the public and for a worker, respectively, and given a type M solubility corresponding to chloride, hydroxide, volatilized Po, and all unspecified Po forms. Polonium is among the radioactive elements with a low fusion point (about 254 °C for elemental Po under 1 atm). The volatilization points of common polonium compounds are about 390 °C and thus much lower as compared to mean wildfire temperatures (>500 °C with maximum of 1000–1200 °C). (5) As a result, 210Po is easily emitted during a fire...

As the world burns, not just at Chernobyl, where people are prone to pay attention, but along the coasts and interiors of all the temperate and tropical continents, fires that fly down the memory hole, a little critical thinking would be in order.

Nuclear energy is not risk free, nor should it be required to be, unless some technology of lower risk exists, and I have convinced myself that no such technology exists. In times of climate change, much like potassium, its possible risks delineated with a dose of sarcasm, nuclear energy is essential to the survival not only to our way of life, but life itself.

Enjoy the rest of the weekend.

Arizona Nuclear Plant to Produce Hydrogen to Power Dangerous Natural Gas Plants.

The article is here, in Power Magazine: Power-to-Power Hydrogen Demonstration Involving Largest U.S. Nuclear Plant Gets Federal Funding

I want to be perfectly clear about something, however, the proposed system is a thermodynamic nightmare and wastes energy. However the Palo Alto Nuclear Plant is cooled by wastewater, and to the extent that the wastewater to hydrogen to water works, again, wasting energy in a region where there is little energy to waste, it will recover absolutely clean water, nothing in it except hydrogen, oxygen, and perhaps very minor amounts of air pollutants condensing with the steam.

An excerpt:

The funding formally kicks off the demonstration, which will involve multiple stakeholders in research, academia, industry, and state-level government. On a federal level, that includes Idaho National Laboratory (INL), the Idaho Falls-sited laboratory that is becoming a central hot spot for nuclear integration research and development, as well as the National Energy Technology Laboratory (NETL), and the National Renewable Energy Laboratory. The Electric Power Research Institute, along with Arizona State University, and the University of California, Irvine will also collaborate on the project. These entities have been vocally supportive of the DOE’s June 7–launched Energy Earthshots Initiative, which aims to reduce the cost of clean hydrogen by 80% to $1 per kilogram (kg) over the next 10 years.

The project’s private partners will involve OxEon, a company that specializes in solid oxide fuel cells, and Siemens Energy, a gas turbine manufacturer that is heavily invested in decarbonized gas power and wants to produce heavy-duty gas turbines that are capable of combusting 100% hydrogen in volume by 2030. Siemens Energy, notably, is also a key stakeholder in HYFLEXPOWER, a European Union-backed four-year project to demonstrate a fully integrated power-to-hydrogen-to-power project at industrial scale and in a real-world power plant application.

Also notably involved is the Los Angeles Department of Water and Power (LADWP), a public power entity that in May 2021 outlined plans to convert four Los Angeles–area gas-fired power plants to run on hydrogen under the HyDeal LA project in coordination with the Green Hydrogen Coalition. That coalition, which comprises power companies, pipeline manufacturers, and financial firms, is currently working to put the necessary infrastructure in place...

Electricity is a thermodynamically degraded form of energy.

The Palo Alto Nuclear Plant's three reactors are pressurized water reactors that came on line in 1986, and thus were based on 1970's technology. Although they produce the cleanest energy in the American Southwest, they have low thermodynamic efficiency, probably around 33%. The failure of so called "renewable energy" to demonstrate a shred of reliability means that the American Southwest, notably California, is dependent on the combustion of dangerous natural gas, the waste of which is dumped directly into the planetary atmosphere, where it is killing the planet at an increasing rate.

Rather than make hydrogen, it would be far more desirable to ship excess electricity to that gas dependent state, California. California's electricity profile, depending on when the wind is blowing and if there is water in the reservoirs, seldom fall below 300g CO2/kwh. The best CO2 per kwh on California thus produces about 1200% as much carbon dioxide as does the Palo Alto Nuclear Plant, which is roughly 25 g CO2/kwh as reported in most scientific publications. California's carbon performance will degrade further when the Diablo Canyon plant is shut by appeals to fear and ignorance. Therefore there really isn't any room to waste Palo Alto electricity on hydrogen.

A more modern approach, utilizing the high temperatures realized in operable nuclear fuels, would be to build a heat network using nuclear heat which would include a thermochemical cycle in its path, with an overall plant efficiency, were one to include the energy required for desalination, approaching 80%.

Nuclear profits sustain Bulgaria in gas crisis

Nuclear profits sustain Bulgaria in gas crisisThe measures were announced on 21 October by Prime Minister Stefan Yanev in a national address. He said the subsidy "will benefit over 630,000 non-residential end consumers" with the grant distributed automatically thanks to a contract between the government and the retail electricity suppliers, including the suppliers of last resort that have stepped in after other energy firms went bust.

The benefit will be backdated from 1 October and will last until 30 November, at an estimated cost of BGN450 million. Yanev said, "The funds will be provided from the state budget at the expense of the presentation of grants amounting to BGN450 million from Kozloduy nuclear power plant." He added, "In the next reporting year, the dividend due to be paid by [Kozloduy's owner] Bulgarian Energy Holding will be reduced by the indicated amount."

The upgraded subsidy improves on measures Yanev discussed on 19 October when speaking on TV1, which would have covered 250,000 businesses with a BGN30 per MWh payment. At the time, Yanev said subsidies would "support the economically weaker companies, which are also the largest employer in the country..."

...Facing the need to phase out coal - which provides 40% of electricity - while also maintaining energy security, Bulgarian policymakers would like to expand nuclear capacity either at Kozloduy or at Belene, a new site also on the Danube. However, in a recent interview with Trud newspaper the chaiman of the Bulgarian Atomic Forum, Bogomil Manchev, said: "There is no longer an option for either one project or the other. The 'or' has disappeared."

Bulgaria is keen for the European Commission to decide positively that nuclear power can be included in its taxonomy of sustainable investments and is a member of the ten-nation 'Nuclear Alliance' of EU countries calling for this. Yanev raised the issue with the Vice President of the European Commission, Franz Timmermans, who visited Bulgaria earlier this month...

The reliance on dangerous natural gas to cover up for the lack of reliability of so called "renewable energy," has reached its limit. The requirement for redundant systems to do what one system can do is not only extremely wasteful, but it is also extremely expensive. The dependence on dangerous natural gas is not anymore sustainable than are the solar and wind industries, since all three industries rely on unsustainable mining activities.

As always, the most economically disadvantaged people pay the price for the delusional affectations of the bourgeoisie.

A Mother of Crystallography, Helen Megaw (1907-2002).

My first interest in a class of crystals known as perovskites came when I was considering certain oxygen transporting crystals in connection with a thermochemical water splitting concept I was dreaming up. These crystals were doped oxide perovskites, and back in 2015 I actually compiled a spreadsheet based list, searchable by compositional elements of around 150 of these known substances and the rates at which oxygen permeates through them.

There are much better thermochemical cycles than the one I thought up, but dreams die hard, and even bad ideas have value.

Speaking of bad ideas, there is a lot of talk these days about perovskite solar cells which involve lead chemistry.

I oppose these solar cells, but that's another matter.

I came across a solar cell perovskite paper today - one really can't avoid them since so many are written - and it got me to thinking about the class of perovskites in general, and how the world came across them, and I learned that their structure was first elucidated by a great woman scientist, Helen Megaw, who worked in x-ray crystallography and who was one of the leading scientists in this field throughout the 20th century.

Here's a nice little paper published in her honor: Helen D. Megaw (1907–2002) and Her Contributions to Ferroelectrics (IEEE Transactions on Ultrasonics, Ferroelectrics, and Frequency Control M. Glazer, , vol. 68, no. 2, pp. 334-338, Feb. 2021)

Some excerpts:

Helen Dick Megaw was born on June 1, 1907, into a most distinguished and influential Northern Irish family. Her father, Robert Dick Megaw, was a famous Chancery Judge in the High Court of Justice of Northern Ireland and an Ulster politician. Also, her uncle, Major-General Sir John Wallace Dick Megaw, was a director of the Indian Medical Service, while one brother built the Mersey tunnel (in Liverpool, U.K.), the Dartford tunnel (London), the Victoria underground line (London), and Battersea (London) power station. Another brother, Sir John Megaw, was a Lord Justice in the Court of Appeal, and one of her sisters researched diet and health in the 1930s and marriage laws in Uganda in the 1950s. A most extraordinary family background!...

...One of her aunts was secretary to the Mistress of Girton College, Cambridge (one of the only two Cambridge Colleges at the time exclusively set up for women: the College like all others in Cambridge now accepts both men and women) and Helen’s ambition was to study there. She won an exhibition1 to the College in 1925 but for financial reasons decided to go to Queen’s University, Belfast. The next year, she won a scholarship, and this time, it proved possible for her to take up a place at Girton. Initially, she had intended to read Mathematics, but she had enjoyed Chemistry at school, and on her teacher’s advice, she opted for Natural Sciences so that she could study both science and mathematics. She thought that the regulations required her to study three subjects, and she planned to study chemistry, physics, and mathematics. However, her Director of Studies, Miss M. B. Thomas, explained that she was required to study three experimental subjects (mathematics being an optional extra), and she advised Megaw to choose mineralogy as her third experimental subject. Had Helen known that she could have selected geology instead of mineralogy, she would have opted for geology, and, in all probability, she would not have become a crystallographer! ...

...So, it was that she became a research student under the renowned, and some would say infamous, John Desmond Bernal, investigating the thermal expansion of crystals, and the atomic structure of ice and the mineral hydrargillite (a hydroxide of aluminum). One of Bernal’s students at the same time was the young Dorothy Crowfoot, later to become famous as the Nobel Prize winner Dorothy Hodgkin, and Helen and Dorothy became firm friends...

... Bernal was a stimulating influence on Helen and happily confirmed her interest in crystals. Her choice of crystallography was a wise one, because it was the one scientific discipline then, thanks principally to W. H. and W. L. Bragg, which had already established itself as a place in which both men and women could engage on an equal basis, and she never, or rarely, was aware of any form of discrimination. She started work on the structure of the mineral hydrargillite, a form of Al(OH)3. Fig. 2 shows a photograph of a model of its crystal structure. Although rather rough, having seen better times, this model was her first crystal model constructed with help from Dorothy Hodgkin in 1934. Megaw’s main Ph.D. work with Bernal was a study of the crystal structures of ice. Helen’s accurate and demanding investigation of ice and heavy ice showed that the hydrogen atoms were involved in bonding between two oxygens. She and Bernal surveyed the known structures of hydroxides and of water and concluded that there were two types of hydrogen bonds. In one kind, found in ice, the hydrogen oscillates between two positions, each being closer to one of the oxygen atoms than the other. In the other type, the hydrogen is bonded more strongly to one of the two oxygen atoms. In honor of her discoveries of the nature of ice, an island in Antarctica was named Megaw Island in 1962...

... In 1934, Helen spent a year with Prof. Hermann Mark in Vienna and then moved to work briefly under Prof. Francis Simon at the Clarendon Laboratory, Oxford. This was followed by two years of school teaching before taking up a position at Philips Lamps Ltd. in Mitcham in 1943. It was here that she worked out the crystal structure of a significant industrial material, barium titanate, which is used in capacitors, pressure-sensitive devices, and in a variety of other electrical and optical applications. This material, which crystallizes in the so-called perovskite structure, belongs to the class of materials known as ferroelectrics, discovered initially around 1935. Because of its strategic military importance, much of the work was secret, and Helen was only allowed to publish her work on the structure provided that she did not mention its useful properties! This structure is so famous and important that Helen’s name is permanently associated with it and with perovskite structures in general. In the ferroelectrics community, Helen’s contributions are notably recognized, and her book Ferroelectricity in Crystals published in 1957 was the first of its kind and soon became a classic text (Fig. 4)...

Figure 4:

The article says that she gave the idea to Bernal, based on her work on ice crystals, to explore the x-ray crystallography of proteins, a field that has enormous implications in the discovery of pharmaceutical compounds and the treatment of disease.

I'm kind of glad I stumbled on her. A very interesting scientist...

Well the piano part is interesting anyway.

Got my vote for Phil in today.

I'm very boring, voted straight Democratic, again and again, and again, and again.

Airborne Microplastic Concentrations in Five Megacities of Northern and Southeast China

The paper to which I'll refer in this post without too much discussion of the implications, which should, in any case, be obvious, is this one: Airborne Microplastic Concentrations in Five Megacities of Northern and Southeast China (Xuan Zhu, Wei Huang, Mingzhu Fang, Zhonglu Liao, Yiqing Wang, Lisha Xu, Qianqian Mu, Chenwei Shi, Changjie Lu, Huanhuan Deng, Randy Dahlgren, and Xu Shang Environmental Science & Technology 2021 55 (19), 12871-12881)

Many people who care about the environment are aware of the problem of microplastics, particularly in bodies of water and in landfills. The effort to deal with plastic waste by recycling has proved to be largely a failure, and these dangerous petroleum and dangerous natural gas products have accumulated on a large scale.

The issue about which I have not thought, but perhaps should have thought, is the problem of airborne microplastics. The cited paper brought this problem to my attention, and I thought I'd briefly refer to it in this space.

From the paper's introduction:

The "million" in this text is probably an error, since this would imply 10^17 tons. On the other hand, 120,000,000 tons seems to low.

For reference, the amount of carbon dioxide dumped by humanity each year while we all wait, decade after decade for the grand so called "renewable energy" nirvana that did not come, is not here and won't come, is roughly 35 billion tons per year as dangerous fossil fuel waste, with another 10 billion tons per year deriving from land use changes.

We can take these figures with a grain of salt without detracting with the measurements taken by the authors of the paper.

In addition to ingestion exposure, inhalation may be another important pathway for MP exposure. Increasing evidence shows the widespread occurrence and transport of MPs in the atmosphere, with some studies positing that MP intake via inhalation may exceed ingestion via dietary consumption. (11) Quantifying the exposure intensity of airborne MPs is essential for evaluating human inhalation risk. (12) However, most studies examining airborne MPs are based on passive measurements of atmospheric deposition or accumulation in the surface dust layer. (13,14) MP concentrations measured by active pump sampling accurately reflects the air exposure intensity directly, (15−17) but the data concerning airborne MP concentrations derived from active pump collection are still rare. (12) The paucity of MP concentrations suspended in the atmosphere makes it difficult to accurately assess MP exposure risks to humans, especially since the limited data collected to date using different methodologies range by ∼4 orders of magnitude. (11,12,18) Moreover, the maximum reported exposure concentrations of airborne MPs may significantly underestimate the true exposure intensity. This is mainly due to the difficulty in detecting/enumerating MP particle sizes less than 30–50 μm using current methodologies, whereas the size distribution of airborne MPs may increase significantly at smaller sizes (10 million population) comprising urban agglomerations in northern and southeast China. We also explored potential relationships between airborne MP concentrations and routinely monitored air pollution indicators (e.g., PM2.5, PM10) to determine whether these routinely measured parameters could be used as a proxy for estimating MP concentrations. The relationship of airborne MPs and socioeconomic factors, such as population and GDP, were also investigated as potential covariates to explain spatial patterns in MP concentrations. This study informs potential human health risks associated with MP inhalation exposure and explores various factors contributing to differences in airborne MP concentrations and characteristics in densely populated urban centers.

Some graphics from the paper which ought to be self explanatory:

The caption:

The caption:

The caption:

The caption:

The caption:

The caption:

The caption:

The PVC component is of interest to me, since PVC is a "heavier than water" polymer, the use of which sequesters chlorine, allowing for the industrial accumulation of bases such as hydroxides, which are useful for the capture of carbon dioxide. (The composition of PVC is roughly 56% chlorine by weight.) PVC is often not a single use polymer, and although its production is not currently huge, only on a few tens of millions of tons per year, it does have some properties that make it environmentally less odious, perhaps in some settings, even benign or indeed, even positive.

In addition to the relatively larger plastic fibers, which are easy to be observed and therefore get more attention, our results showed that smaller MPs were dominated by nonfiber fragments. The various size and shapes of MPs are expected to strongly influence MP interactions with body tissues/fluids and the ability of the body to eliminate MPs from the respiratory/digestive systems. The polymeric composition of MPs will also affect the fate (i.e., accumulation/degradation) of MPs within the various body tissues.

I hadn't thought much about this issue, but perhaps it should have been obvious.

This is a real problem. I believe that there is an engineering solution to solving the problem but nobody, I think, wants to hear me beat my horse.

I wish you a pleasant workweek.

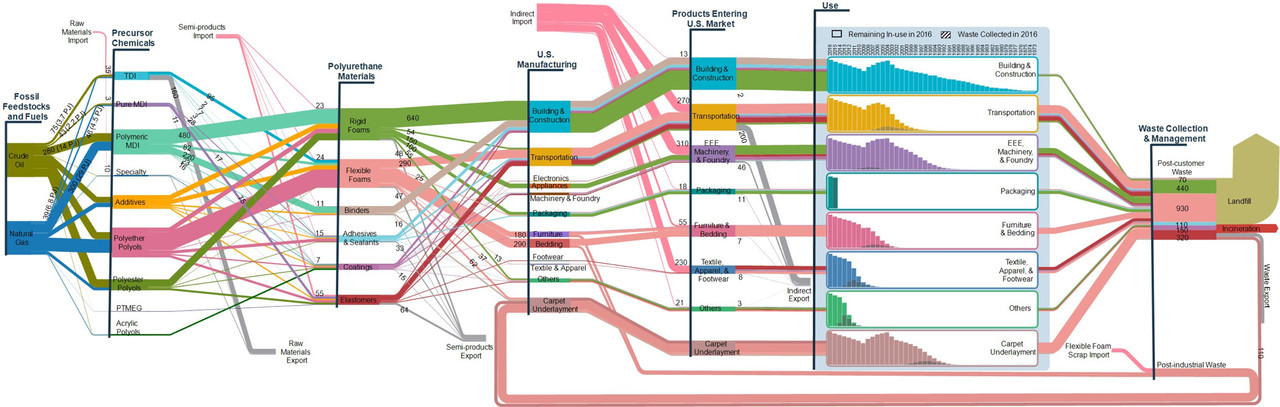

Ultralow Thermal Conductivity, Novel Zintl Antimonides, Thermoelectrics, Nuclear Fuel and Fukushima.

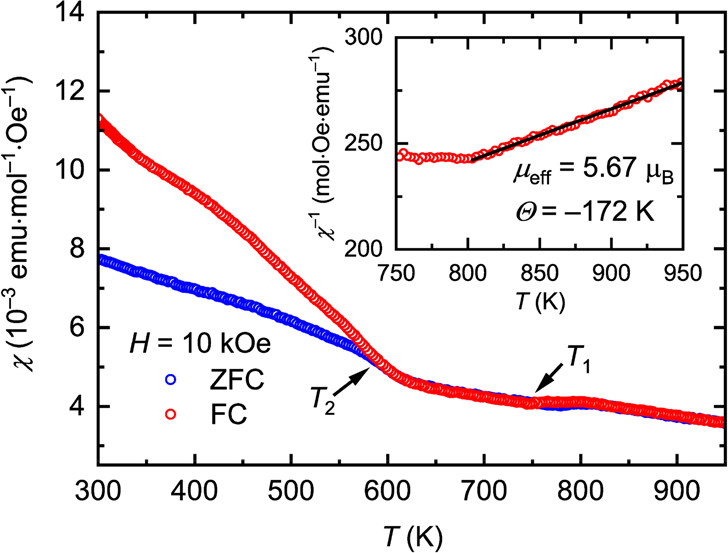

The paper I'll discuss in this post is this one: Ultralow Thermal Conductivity and High Thermopower in a New Family of Zintl Antimonides Ca10MSb9 (M = Ga, In, Mn, Zn) with Complex Structures and Heavy Disorder (Alexander Ovchinnikov, Sevan Chanakian, Alexandra Zevalkink, and Svilen Bobev, Chemistry of Materials 2021 33 (9), 3172-3186).

I first found myself thinking about "Zintl salts" some years ago, when considering interactions between the element cesium and lead. These elements do not alloy in a strict sense, rather they form an interesting salt, wherein cesium is oxidized to its common +1 state and lead, a metal, is reduced to a remarkable anion having a cluster structure:

Deltahedral Clusters in Neat Solids: Synthesis and Structure of the Zintl Phase Cs4Pb9 with Discrete Pb94- Clusters (Evgeny Todorov and Slavi C. Sevov Inorganic Chemistry 1998 37 (15), 3889-3891)

A figure from the above:

The caption:

Thermoelectric devices generate electricity directly from heat; no moving parts are involved. They are extremely reliable devices, as evidenced by, among other devices, the Voyager Space Craft which is powered by a thermoelectric "RTG" which has been sending signals from deep space for 44 years, nearly half a century. The power source on the Voyager space craft derives its heat from the decay heat from plutonium-238. Similarly powered devices have been utilized for the many space missions, most recently on the Mars Rover Perseverance. To continue utilization of these types of devices, the United States has resumed the production of plutonium-238, supplies of which ran out.

The root cause of the failure of the nuclear reactors at Fukushima to survive a tsunami better than the surrounding cities - which also did not survive the tsunami although their failure and the connected deaths excited little interest compared to the interest in the failure of the reactors to survive - was connected to the fact that diesel generators won't operate under water. In the case where the reactor is not producing electricity - armatures and steam turbines won't turn under water, back up power is necessary, and, on almost every nuclear reactor in the world, this pack up power is fueled by diesel generators. The coolant pumps for the reactors, which do operate under water thus had no source of back up power when the reactors shut down, with the result that the decay heat in the fuel caused temperatures to rise to a point at which the following reaction takes place: Zr + 2H2O ZrO2 + 2H2. (The fuel rods are made of an alloy of Zirconium called "Zircalloy-4." ) The generated hydrogen mixed with air and was ignited by the heat, causing an explosion.

Like most thermal devices designed to produce electricity, a thermoelectric device is designed to operate over a temperature gradient and the thermodynamic efficiency of the device is related to the establishment of the difference in temperature between the hot and the cool region. The greater the difference in temperature between the hot and cool regions, the greater the thermal efficiency. This is why when one looks at a picture of an RTG on a space craft, the device always has cooling fins; these are designed, even in deep space, to radiate heat.

It follows that an RTG that is placed under water, because of water's high thermal conductivity as well as its high heat capacity can be expected to function at higher efficiency than when cooled by air. This effect is even more pronounced with flowing water.

Ironically, one concern after the reactors were destroyed was the state of the used fuel in "cooling ponds" because of the lack of recycling cooling water. The fuel remained intact (as it was designed to do) in borated water but it was a "concern" and certainly journalists, whose income is proportional to the amount of hysteria they can generate, for example "her emails," prominently hyped this "concern." The reason is that used nuclear fuel even after it is removed from the reactor still is generating significant heat in exactly the same way that an RTG in space is doing, from nuclear decay (as opposed to critical nuclear fission.)

When devices fail, the mode of failure is instructive on how to make them less prone to repeat failure. One approach to preventing another event like Fukushima would be to do away with diesel engines for power back up and replace them with RTGs powered by decay heat which is readily available at nuclear power plants. It's called, using the idiomatic cliche, "killing two bird with one stone." (The switching electronic devices also failed at Fukushima, so making these immune to submersion would also be a good idea.)

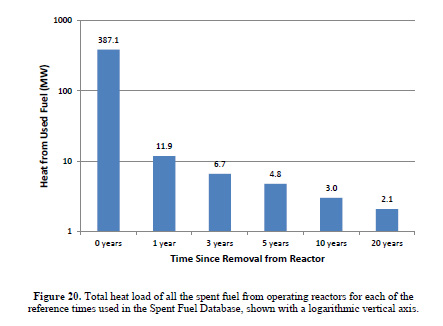

The heat load associated with used nuclear fuel - it changes rapidly with time - is covered in a marvelous Masters thesis (under her birth name) by Dr. Kristina Diane Yancey Spencer, now at INL, when she was a graduate student in the nuclear engineering department at Texas A&M, Nationwide Used Fuel Inventory Analysis.

The caption:

(The power levels here refer to the total amount of fuel removed in a given year from all US reactors, and thus this heat is not located in a single place.)

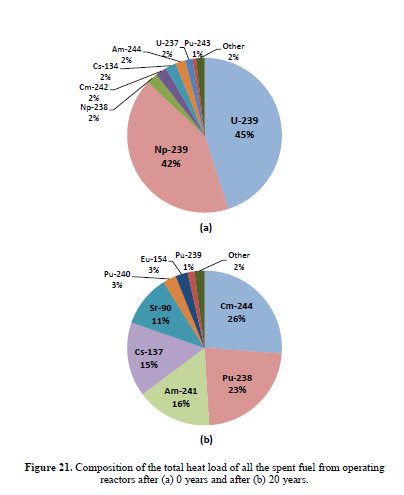

The next figure is more interesting, and I will discuss it below:

The caption:

(She also published the same figures in a paper with her advisor:

Quantification of U.S. spent fuel inventories in nuclear waste management: Quantification of U.S. spent fuel inventories in nuclear waste management (Kristina Yancey, Pavel V. Tsvetkov, / Annals of Nuclear Energy 72 (2014) 277–285)

I don't use the term, "nuclear waste," but prefer the term "nuclear resources," since the plutonium in this so called "waste" is critical, in my mind, to saving the world, and sometimes, perhaps more often, I also use the term "used nuclear fuel," but OK, let me not quibble with Dr. Yancey Spenser, a scientist I admire. The point is that language matters, even when stating facts that matter.)

The heat load at time T=0 is not really recoverable after a reactor shuts down, since the largest portion of it comes from the decay of U-239, which has a half life of 23.45 minutes. The maximum activity associated with U-239 and it's decay daughter, Np-239, which has a half life of 56.544 hours, is reached after 169.5 minutes (2.82 hours), whereupon the ratio between Np-239 and U-239 is 144.7. This decay heat of course, is part of the heat generated during a reactor's operation, but it seems unlikely that it would be possible to capture it in a thermoelectric device after shutdown, and in any case, the heat output would rapidly decline. Even though I personally have spent a lot of time contemplating fast separations of fresh used nuclear fuel while it's still very hot, even I can't imagine recovering this energy. The heat load a couple of weeks out is far more interesting, but let's leave that discussion for another time.

What I'd like to focus on is figure 21b, the heat load 20 years out, particularly the five isotopes providing the majority of the heat load, Cm-244, Pu-238, Am-241, Cs-137 and Sr-90. Two of these have been incorporated into thermoelectric devices, Pu-238 for space missions, and historically, for pacemaker batteries implanted in chests, and Sr-90, which powered some arctic lighthouses in the former Soviet Union. In a continuous closed fuel cycle for power production (and/or high temperature thermal processing) the Pu-238 would be carried along with the Pu-238 and end up in the next generation fuel, while making it less usable, if usable at all, for weapons production.

In fast reactors - the kind I favor - Am-241, which has sometimes been suggested for use in thermoelectric space devices, can operate as an excellent nuclear fuel. It has a significantly lower density than plutonium, with the effect of increasing heat exchange area and a higher critical mass. (I discussed the critical mass of americium isotopes here: Critical Masses of the Three Accessible Americium Isotopes.) During operation, it generates two plutonium isotopes of great value for non-proliferation of nuclear weapons, Pu-238 (via Cm-242) and Pu-242. Although, again, it can be used in thermoelectric devices, and has been evaluated for use as such by the ESA for space missions designed to last even longer than the Voyager missions, the heat load is significantly lower than for Pu-238, 0.115W/g vs. 0.568W/g for Pu-238.

I have convinced myself - with considerable enthusiasm - that the chemical and radiation value of cesium-137 is way to high for solving otherwise intractable environmental problems, in particular the atmospheric rare but potent climate change gases, as well, albeit to a limited extent, extraction of carbon dioxide from the air. This is a conversation for another time, but it would be a waste of perfectly good Cs-137 to simply limit it to thermoelectric devices.

Although Strontium-90 has historically be utilized in thermoelectric devices, it also suggests uses other than that role because of some remarkable nuclear, metallurgic, and chemical properties.

This leaves Cm-244 as a potential thermoelectric fuel to displace diesel back up generators at nuclear power plants. The half-life of Cm-244 is 18.11 years, during which it decays to the useful non-proliferation plutonium isotope Pu-240. It's thermal output, which can be compared to Pu-238 and Am-241 as described above, is higher, 2.83W/g.

From Dr. Yancey Spenser's Master’s thesis above, to estimate how much Cm-244 is available from the heat load described and its thermal output, and the use of the simple radioactive decay law. It is important to note at this point that Dr. Yancey Spencer's estimates produced during her graduate school years are just that, estimates, derived from in silico calculations using the ORIGEN nuclear codes, although she provides an excellent analysis of the common commercial nuclear fuel assemblies, reactor types, and enrichments. Nevertheless, these "seat of the pants" calculations definitely have use. Using her data, I calculate that in a given year, freshly removed used nuclear fuel contains about 1.6 tons of Cm-244, albeit in extremely dilute forms, and, again, using back calculations, that an inventory of used nuclear fuel going back twenty years, will be about 23.5 tons, accounting for annual decays. The heat output of this inventory 66.4 Megawatts thermal.

However the thermodynamic efficiency of thermoelectric devices can be rather low, even lower than the poor energy efficiency of solar cells, where people get excited about energy efficiencies greater than 20%.

There's a very nice presentation from a post-doc symposium held at Cal Tech that gives an overview of thermoelectric materials, sponsored by NASA's Jet Propulsion Laboratory:

Overview of Radioisotope Thermoelectric Generators: Theory, Materials and New Technology.

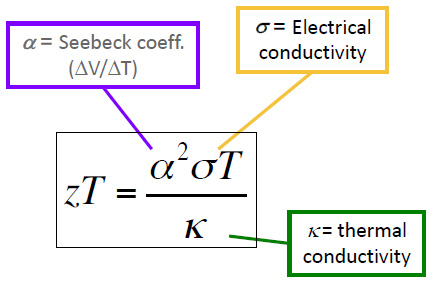

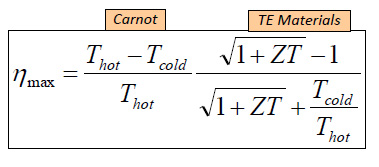

For convenience, I'll post a few graphics from that presentation here. The first is the equation for a parameter known as "ZT," which is actually a single variable, and is not a product of two variables:

It is known as the "figure of merit" for thermoelectric devices. The "figure of merit" for a thermoelectric device, relates to its thermal efficiency according to the following equation, which includes both Carnot terms (as in a mechanical heat engine) related to the heat source and the heat sink, that is ambient temperatures, as well as a term related to the value of ZT:

Enhancing the value of ZT is the goal of much research. Returning to the equation for it, we see that its value climbs as the thermal conductivity decreases, which is the point of the value of the ultralow thermal conductivity discussed in the paper referenced at the outset of this post, the reason that "zintl antimonides" are worthy of consideration.

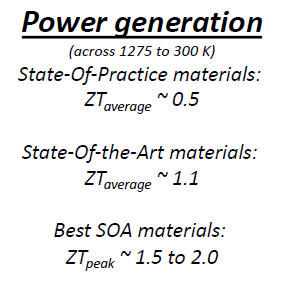

The NASA people provide some insight to where we are with advances in terms of what is commercially available, and what has been developed on a lab scale...

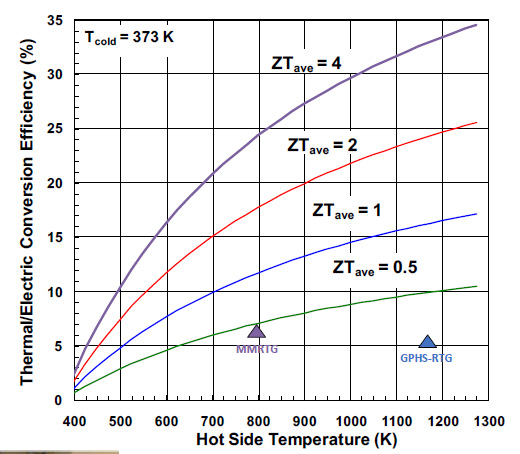

...and then produce this nice graphic showing how thermal efficiency would vary for different ZT values over temperature ranges:

Note that the "cold" temperature, the heat sink, is given in this graphic at 373K on the Kelvin (thermodynamic) temperature scale. This translates to 100 degrees Celsius, the boiling point of water. I'm not sure why this value was chosen, but can speculate that the decay of plutonium-238 on spacecraft is not only required to generate electricity, but also to heat the components of the instruments via conductive materials, thus requiring a higher "cold" temperature. The "MMRTG" is the device that is currently powering the Opportunity rover now investigating the planet Mars. Note that the MMRT has rather low thermal efficiency, around 7%. There may be many reasons that NASA chose the thermoelectric material for the MMRT, considerations of weight, longevity, resistance to thermal stresses, brittleness, etc., conditions which may not and probably wouldn't apply on Earth bound devices.

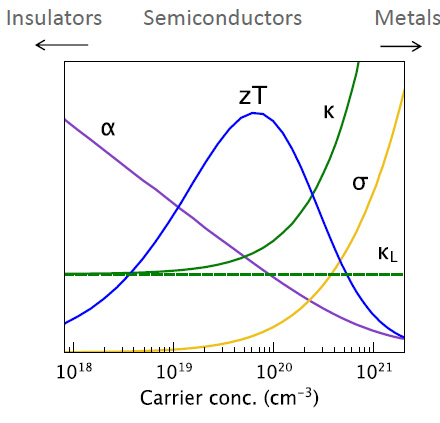

The types of materials that maximize ZT are semiconductors, and the "hole carriers" in the following graphic refer to the "n type" and "p type" "holes" in the semiconductor field, as shown in the following graphic:

It is worth noting that although the solar industry has proved, is proving, and will always prove useless in addressing climate change, the research conducted to develop solar cells is not useless. There are some rather exotic hole carriers that have been developed for that industry, for example, the interesting organic molecule spiro-OMe-TAD. The synthetic precursors of this molecule are petroleum based, but in a nuclear powered world, where chemistry would be dominated by the use of syngas, anything now obtained from petroleum will be accessible from the reduction of carbon dioxide.

I'd like at this point to return to what happened at Fukushima and Dr. Yancey Spenser's graphics above. The fuel rods in the cooling ponds that were of most concern in the hysterical days after the natural disaster which destroyed buildings, homes, cars and human lives - not that we, as a whole, give a shit about the roughly 20,000 people who drowned or were crushed by collapsing buildings, but would rather prattle on that someone might die from radiation exposure someday - were those which were the hottest, specifically those which had most recently been removed from the reactors. As shown in the bar graph, the first reproduced here from her thesis, the one with the logarithmic y-axis, the heat load falls rather rapidly in the first year of cooling, by more than an order of magnitude.

I'd like to turn to two heat producing nuclides near and dear to my heart shown in her first pie chart, the one for year zero, Cm-242 and Cs-134.

The latter is not a major fission product because of the naturally occurring isotope of xenon, Xe-134, which occurs earlier in the beta decay chain occurring among the fission products, all of which are neutron rich. Xe-134, according to the nuclear stability rules, should be radioactive, since the lower mass stable isotope Ba-134 exists, but if it is radioactive, the half-life is so long that its radioactive decay has never been detected. In a nuclear reactor, the Cs-134 isotope which delivers considerable heat in freshly removed used nuclear fuel is formed by neutron capture in the only stable non-radioactive isotope of cesium, Cs-133. (I have convinced myself that in certain kinds of fluid phased reactors, the isolation of nearly pure non-radioactive Cs-133 is possible)

According to Dr. Yancey Spencer's ORIGEN based in silico calculations, hundreds of kilos of Cs-134 are generated in a year, and the sum of power production at specific T=0 time points is on the order of 8 MW. Half a year later, the power output is just under 7 MW.

Cm-242, has a similar power output at T = 0, around 8 MW although much less of it is available, around 10 kg, but after half a year the power has fallen to under 4 MW.

In addition there are many other fission products with relatively short half-lives, on the order of months, that put out significant heat, Ru-106, Ce-144, etc, which rapidly decay into valuable non-radioactive materials.

Using the formula provided above for thermal efficiency, and a "state of the art" thermoelectric material with a ZT of 1.1, a temperature for the heat output of decaying nuclides of 800K and ambient temperatures of 273K, the thermal efficiency is on the order of 37%. (Note that I’m being a little disingenuous here since ZT can vary with temperature, since thermal conductivity and electrical conductivity are generally functions of temperature.)

The temperature at which zirconium reacts with steam to generate hydrogen, which is what took place in the Fukushima reactors is on the order of 1500 °C or higher, and if follows that in the cores of the Fukushima reactors these temperatures were observed when the heat sink failed, despite the placement of the control rods to shut the chain reaction. These temperatures almost certainly resulted, as we've seen, from the decay of of short half lived nuclei like U-239 and more importantly, Np-239 and Np-238, as well as fission products with half-lives from a few weeks or months to a few years.

With all this in mind, and given the concern that the fuel rods in the cooling ponds might similarly fail - they didn't - it is reasonable to assume that the cooling fuel may have had a considerable thermal output, quite possibly significantly more than 10 MW.

A thought experiment: Suppose instead of putting the used nuclear fuel in a cooling pond, where its energy was wasted, the fuel rods were placed in a molten salt bath surrounded by a thermoelectric material, say one with a ZT value, as above of 1.1, with the decay heat temperature at 800K. Fresh used nuclear fuel with a thermal output of 10MW would thus be providing about 3 MW electrical energy. I am not familiar with the detailed design of the Fukushima BWR other than to know that the diesel generators were located in the basement of the reactor buildings, and thus were swamped. However for other reactors, these generators seem to be on the order of 1500 kW to 3000 kW, 1.5 MW to 3 MW. The power supply would be continuous, and uninterrupted, since the device would have no moving parts.

Indeed, in a molten salt bath of certain types - there are huge number of possibilities beyond the famous, and to my mind of dubious desirability FLIBE - anodic dissolution of the fuel rods to obtain their contents. Some of the radioactive materials would be more useful in settings other than merely providing heat. Under these conditions, either direct separations or separations using aliquots would allow for the separation of particular radioactive elements for other uses.

For instance two of the radioactive isotopes of cesium, both 134, discussed above, and the better known 137 isotope, are associated with powerful gamma rays.

Combustion power plants using either dangerous fossil fuels, biomass or trash as an energy source take in air and discharge it powerfully dirtied beyond the climate gases already there from earlier combustion events. I have convinced myself, and hopefully my son, that it is possible to build a power plant, nuclear fueled, which takes in dirty air and discharges it cleaned, with the powerful warming gases CO2, methane, N2O, hydrofluorocarbons and residual CFCs, and indeed sulfur hexafluoride, all removed as well as aerosol particulates, aerosol microplastics, sulfates and sulfites. Having powerful gamma radiation, such as that associated with Cs-137 and Cs-134 might well contribute to such a system, but that's for discussion elsewhere, except to say that there is impetus for doing such separations. The high energy to mass density of nuclear fuels, coupled with Bateman equilibria that places limits on the amount that can form before radioisotopes decay as fast as they form, will mean that there will never be vast amounts of these isotopes, but nevertheless, in continuous flow processes, this gamma radiation might make a significant impact.

The bulk of used nuclear fuel is unreacted uranium. Once through or multiply recycled uranium is somewhat more valuable, at least to my mind, than natural uranium, as it contains the U-236 isotope that does not occur in ores. This isotope, which can be thought of as an inert uranium isotope, since it is not appreciably fissionable except in the epithermal region, and even there, marginally so, and thus has intrinsic nonproliferation value. Reuse of recycled uranium leads to the accumulation of valuable isotope neptunium-237 Removal of this uranium for use in breeder reactors will result in concentration of heat producing isotopes and elements like curium (which is a minor impurity in used nuclear fuels, albeit responsible for much if the generated heat). The point is this: Over many decades of operations at a nuclear power plant considerable amounts of heat generating isotopes not useful in other settings, might thus accumulate, and establish an array of electrical power producing devices that lack moving parts.

When aircraft fail, or for that matter, when automotive systems prove unsafe, neither aircraft nor automobiles are abandoned as technologies. The flaws are engineered away, or procedures put in use to prevent recurrence. The nuclear industry, while not risk free any more than any other industry is risk free, is spectacularly safe when compared to the very dangerous fossil fuel industry, which kills people in vast numbers when it operates normally, never mind in failure modes.

It is possible, I suggest, to engineer away events like Fukushima. It is not, however, possible to engineer the destruction of cities by seawater, but we can minimize that risk to some extent by addressing climate change.

And let's be clear on something. We are decades into cheering by anti-nukes and others into the theoretical value of so called "renewable energy." It did not work. It is not working. It will not work. And by "working" I mean, working to address climate change. The theory was not borne out by experiment, and, as this is the Science forum, if a theory does not agree with experiment, it is the experiment that is discarded; it is the theory.

The experimental results of the expensive "renewable energy will save us" theory are in: The use of dangerous fossil fuels is rising, not falling. The reliance on dangerous fossil fuels is not merely killing people continuously. It’s killing the entire planet.

Thus engineering away the comparatively minor risks of events like Fukushima, there is a strong impetus for the development of thermoelectric devices, and thus it is important to study materials with extremely low thermal conductivity, indeed for other reasons, for example, the construction of devices capable of utilization in high temperature exposure to increase thermodynamic efficiency, this to reduce the cost of energy so as to extend its benefits to those who lack them by reducing costs and increasing access.

From the introductory text of the paper cited at the outset of this post:

A special class of materials that has been actively explored as promising candidates for thermoelectric applications are Zintl phases—valence-precise intermetallic compounds with polar chemical bonding.(3−6) The charge balance in these materials is achieved by electron redistribution between different parts of the crystal structure so that the constituting atoms adopt a stable closed-shell electron configuration. Typically, such compounds demonstrate semiconducting properties with narrow electronic bandgaps and tunable electrical resistivity. At the same time, high structural complexity enables low phonon group velocities and efficient phonon scattering and hence low thermal conductivity.(3−11)

Most Zintl phases of relevance for thermoelectric research belong to the chemical families of tetrelides and pnictides, i.e., compounds with the elements of groups 14 and 15, respectively. One of the highest-performing materials within the pnictide family is Yb14MnSb11, with the maximum zT value approaching 1.2 at T ≥ 1000 K...

Yb, ytterbium, is not what we call an "earth abundant element," it is a minor constituent of lanthanide ores. It is not a fission product. Antimony, Sb, is also not an "earth abundant element" but it is a fission product. In the fast fission of plutonium, the kind of approach to plutonium utilization I favor, about 0.25% of fissions produce a mass number associated with antimony. Antimony in fresh used nuclear fuel will contain the radioactive isotope Sb-125, but the half life of this isotope is relatively short, 2.76 years, whereupon it decays into a stable isotope of the similarly valuable element, tellurium, which is also an element of concern and which is also valuable in some thermoelectric settings. Thus aged used nuclear fuel, accumulated in the 1970s and 1980s is a potential source of both of these elements without requiring mining.

The paper's introduction continues later:

Neither gallium (Ga) nor Indium (In) are "earth abundant elements. Indium is a fission product, but only one of the two natural isotopes is present, the radioactive one, the 115 isotope, owing to the extremely long half-life of the cadmium 113 radioactive isotope. Indium is one of the rare elements whose radioactive component is dominates its natural ores. The indium in your touch screens, including that in your cell phone, is radioactive, but the half-life is so long that it doesn't matter. Thus it is possible to isolate indium from used nuclear fuel, although its concentration is very low, about 0.1%, although such concentrations are found in ores that are used to provide this element for touch screens and for unsustainable solar cells of the CIGS type.

However, Mn and Zn, are relatively abundant, although there is concern about minable zinc ores. Zinc may ultimately be required to be obtained from very dilute streams, requiring significant energy inputs.

The poor (and desirable) low thermal conductivity is a function of relative disorder, isolating the phonons through "phonon scattering" - phonons are the structures in matter that transmit heat and sound vibrations.

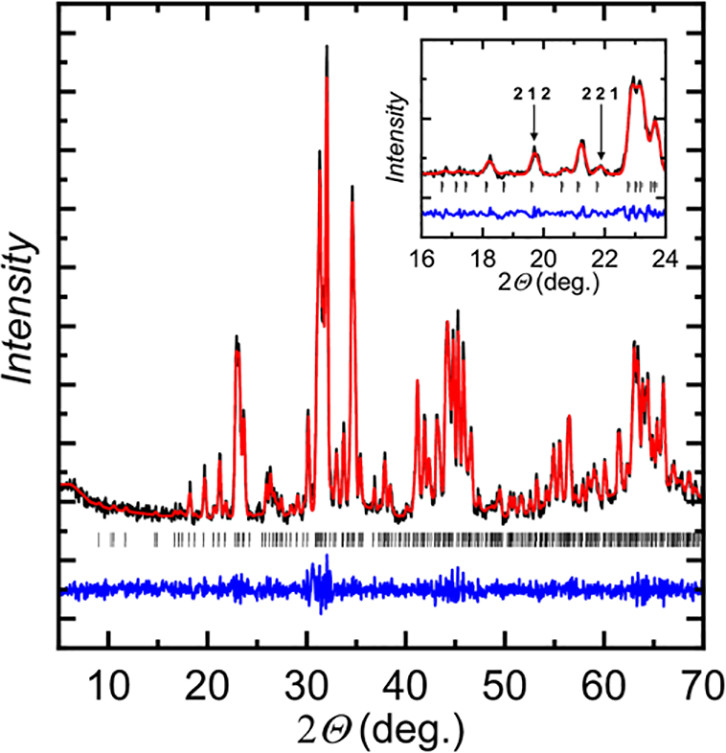

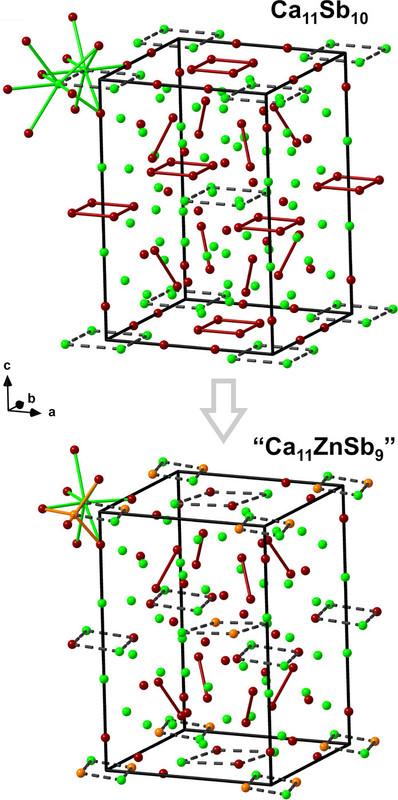

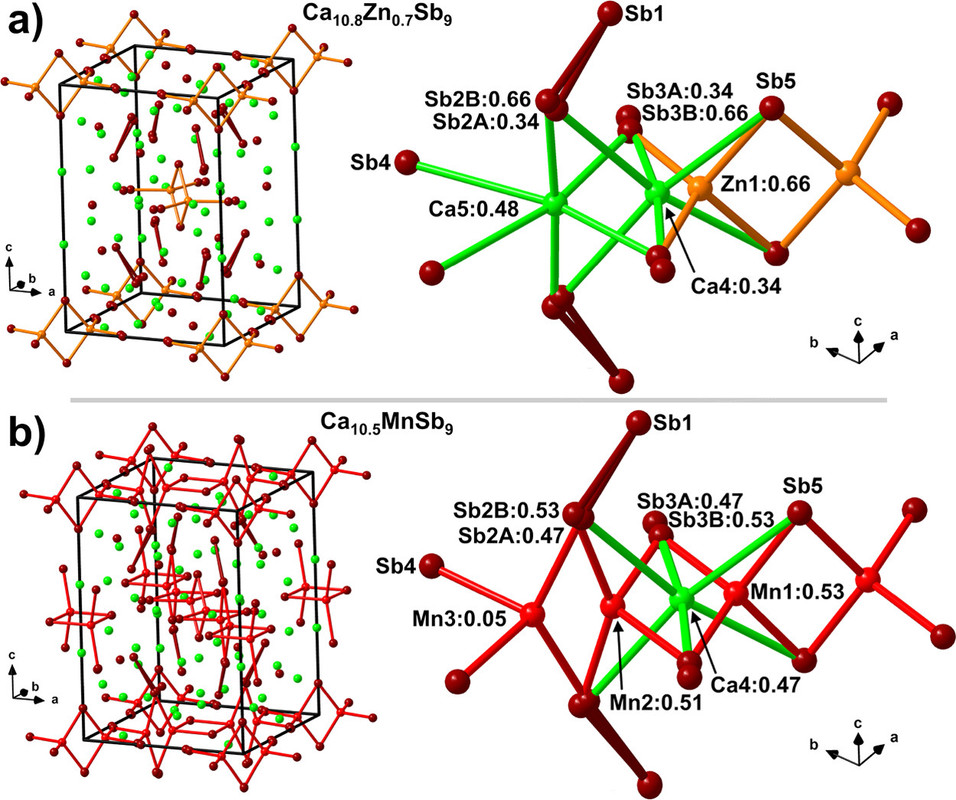

Some pictures from the text:

The caption:

The caption:

The caption:

The caption:

The caption:

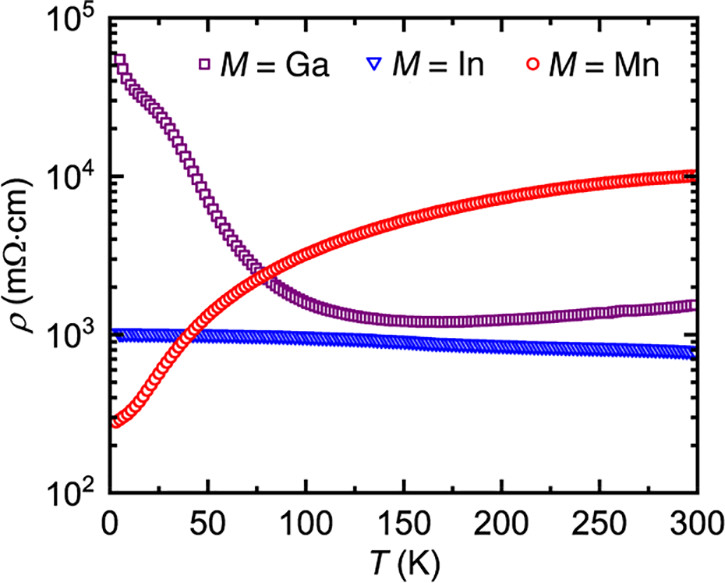

In this case the earth abundant elements, Mn and Zn perform better than the less available Ga and In, but this does not necessarily carry through to thermoelectric performance.

The caption:

The actual performance of these materials as thermoelectric materials is nothing about which to write home, but this said, the purpose of this investigation is not to develop a definitive material but rather to suggest a path to optimization. The development of nanostructured materials, including perhaps "hole carriers" suggest a value.

As for the performance of the investigated materials as thermoelectric materials, again, nothing about which to write home, there's this graphic:

The caption:

This is indeed, worthy research. Almost every issue of the journal Chemistry of Materials includes a few papers discussing thermoelectric devices, although I have been struggling to keep up with this publication in recent weeks.

This same issue, issue 9 of this year, includes another paper discussing the theory of thermoelectric devices, interestingly including those dependent largely, if not entirely, on earth abundant elements:

Key Role of d0 and d10 Cations for the Design of Semiconducting Colusites: Large Thermoelectric ZT in Cu26Ti2Sb6S32 Compounds (Takashi Hagiwara, Koichiro Suekuni, Pierric Lemoine, Andrew R. Supka, Raju Chetty, Emmanuel Guilmeau, Bernard Raveau, Marco Fornari, Michihiro Ohta, Rabih Al Rahal Al Orabi, Hikaru Saito, Katsuaki Hashikuni, and Michitaka Ohtaki Chemistry of Materials 2021 33 (9), 3449-3456)

This paper describes materials with a ZT as high as 0.9, albeit titanium and copper materials doped with germanium, which cannot be considered an earth abundant element.

Again, there's a lot of research in this area. My personal interest in selecting the paper I discussed out many possible such papers was more connected with my interest in thermal insulation materials for high temperature applications, but I fully appreciate the need to understand these materials in thermoelectric settings, which I discussed at greater length.

This post is, admittedly, esoteric, particularly for a political website, but I write these things to teach, if not anyone else, myself.

I trust you are having a pleasant weekend.

Stuff: Sankey diagrams of Material Flows for Polyurethane in the United States.

I don't have a lot of time to discuss this paper, but the graphics in it say something about mass transfer and the handwaving belief we're "just going to recycle" our stuff. The paper is here: Material Flows of Polyurethane in the United States

(Chao Liang, Ulises R. Gracida-Alvarez, Ethan T. Gallant, Paul A. Gillis, Yuri A. Marques, Graham P. Abramo, Troy R. Hawkins, and Jennifer B. Dunn Environmental Science & Technology 2021 55 (20), 14215-14224).

The graphics in question, material flows for polyurethane.

The caption:

The caption:

We are accumulating "stuff" at a massive rate, much of derived from dangerous fossil fuels.